Intel Core i7-975 EE and Core i5-750 in Contemporary Games

When it comes to performance in contemporary games, it is almost always about graphics accelerators. Today we are going to address this matter in a bit unusual manner and will try to find out if today’s gaming fans are in real need of high-performance processors.

Once upon a time, when PC games used to be 2-dimensional, every kind of graphics processing was done on the CPU. This was also true for early 3D games. Neither Wolfenstein 3D nor Doom with its numerous clones listed a graphics accelerator among their system requirements. Well, they couldn’t since there were no graphics accelerators at that time and game developers did not rely on them. Moreover, talking about gaming applications of their products, Intel and AMD focused on enhancing the capabilities of their CPUs in the way of MMX and 3DNow! instruction sets. Intel promoted MMX as a means to boost the quality, level of detail and speed of gaming graphics whereas the name of AMD’s technology speaks for itself.

This situation went on for quite a while. Even rather late projects by id Software and Epic Games such as Quake and Unreal used the CPU as the main tool for processing graphics, notwithstanding the significantly expanded game worlds. Things changed in 1996 when the obscure and young firm 3dfx Interactive unveiled the world’s first 3D graphics accelerator for the PC affordable for ordinary gamers. The product looks ridiculous by today’s standards as it could only map and filter textures, but the quality of the filtering was unprecedented at that time. None of then-existing CPUs, whatever multimedia instruction sets they supported, could deliver such performance even at a lower image quality. A game would look completely different running in the Glide mode as opposed to software mode.

Software mode:

Glide mode

That was the first revolution in the world of gaming 3D graphics. CPUs were losing their ground year by year, their influence on performance constantly diminishing. There were other turning points, the next one being the Nvidia GeForce 256, the world’s first graphics processor with a TCL unit (Transformation, Clipping, Lighting) that could transform 3D coordinates into 2D ones, clip polygons and light the scene, offloading the CPU. As is often the case, the new product did not take off immediately. Nvidia’s opponents still relied on the growing computing capacities of CPUs for those tasks, yet hardware TCL had become widespread by the end of 2001 anyway. The same year there was a third revolution that expanded the capabilities of GPUs even more by making them programmable. The Nvidia NV20 (GeForce 3) came out as the first chip to support DirectX 8.0. And the last notable innovation occurred in 2002 when ATI Technologies announced the R300, the first GPU to support DirectX 9.0.

From that moment onwards, GPUs were developing in an evolutionary way. New versions of DirectX and OpenGL were being implemented. The computing part, originally divided into vertex and pixel processors, became unified. New types of shaders were supported and there were lots of other innovations. GPUs quickly surpassed ordinary CPUs in sheer computing power, giving birth to the idea to use them not only to process graphics but also to accelerate complex computations unrelated or loosely related to 3D applications. Both leading developers, AMD and Nvidia, are working actively in this direction, but that’s not the point of this review. Looking at the computing capabilities of today’s GPUs estimated at teraflops (more than the huge supercomputers of earlier times could offer!), one might find modern CPUs to have rather humble parameters.

This provokes a natural question if 3D games need powerful CPUs at all. The answer is not as simple as it seems. First, if some GPUs resources are allotted to compute the game AI or physical model, there are fewer resources left for graphics processing. And we know just too well that today’s games have very complex visuals that may take all the 1600 stream processors of an RV870 chip to be rendered at a decent frame rate. Second, it is not so easy to rewrite the game code to make maximum use of GPU resources. It looks like a number of computational tasks, including gaming ones, are still performed better on the CPU, therefore premium-class gaming computers from famous manufacturers like Alienware are equipped with extremely fast and expensive CPUs. Particularly, the quad-core Intel Core i7-975 Extreme Edition cost $999 when announced and the newest six-core Core i7-980X is going to cost that much today. That’s quite a lot, but a top-end graphics card is expensive as well. For example, a Radeon HD 5850 costs about $300 whereas topmost solutions that deliver maximum performance are as expensive as $600-800 (a dual-processor Radeon HD 5970) or even $1000 and more (a couple of Nvidia GeForce GTX 480 cards working in SLI mode).

Core i7-975 EE vs. Core i5-750 in Games

We know that the frame rate of a modern video game depends much more on the graphics card than on the CPU but does it make sense to try to save on the latter? Won’t a cheaper CPU be a bottleneck in a system with a newest graphics card? We are going to investigate this issue using all of our testing tools. The platform we use for benchmarking graphics cards is already equipped with one of the fastest CPUs available today. It is an Intel Core i7-975 Extreme Edition that sells in retail for $700-800. Let’s compare it with an affordable Intel Core i5-750:

The Core i5-750 is appealing from a financial point of view. Having a recommended price of $199, it can be found selling for $170-190. It is a full-featured quad-core CPU with the Nehalem architecture, 8 megabytes of L2 cache, but without Hyper-Threading. The latter technology is unimportant for gaming whereas the amount of cache is. And the i5-750 is just as good as the i7-975 EE in terms of cache memory. It must also be noted that a good LGA1156 mainboard is going to be much cheaper than an LGA1366 one.

From every aspect the Core i5-750 looks a highly attractive option for a gamer who wants to save some money on the CPU and invest it into a faster graphics card. Having both platforms at our disposal, we want to check out how wise such economy is and if the affordable CPU will allow the graphics card to show its best.

Testbed and Methods

We are going to compare the performance of Intel processor with Nehalem microarchitecture on two testbeds, which were built on different mainboards, memory modules configuration and, of course, CPUs.

Intel LGA1366 platform:

- Intel Core i7-975 Extreme Edition processor (3.33 GHz, 6.4 GT/s QPI);

- Gigabyte GA-EX58-Extreme mainboard (Intel X58 Express chipset);

- Corsair XMS3-12800C9 (3 x 2 GB, 1333 MHz, 9-9-9-24, 2T).

Intel LGA1156 platform:

- Intel Core i5-750 processor (2.66 GHz, 2.4 GT/s DMI);

- Intel DP55KG mainboard (Intel P55 Express chipset);

- Corsair XMS3-12800C9 (2 x 2 GB, 1333 MHz, 9-9-9-24, 2T).

Other system components were identical and included the following:

- Scythe SCKTN-3000 Katana 3 CPU cooler;

- Samsung Spinpoint F1 HDD (1 TB, 32 MB buffer, SATA II);

- Ultra X4 850 W Modular power supply;

- Dell 3007WFP monitor (30″, 2560×1600 @ 60 Hz max display resolution);

- Microsoft Windows 7 Ultimate 64-bit;

- Nvidia GeForce 197.41 WHQL for Nvidia GeForce;

- ATI Catalyst 10.4 for ATI Radeon HD.

The ATI Catalyst and Nvidia GeForce graphics card drivers were configured in the following way:

ATI Catalyst:

- Smoothvision HD: Anti-Aliasing: Use application settings/Box Filter

- Catalyst A.I.: Standard

- Mipmap Detail Level: High Quality

- Wait for vertical refresh: Always Off

- AAMode: Quality

- Other settings: default

Nvidia GeForce:

- Texture filtering – Quality: High quality

- Texture filtering – Trilinear optimization: Off

- Texture filtering – Anisotropic sample optimization: Off

- Vertical sync: Force off

- Antialiasing – Gamma correction: On

- Antialiasing – Transparency: Multisampling

- Set PhysX GPU acceleration: Enabled

- Ambient Occlusion: Off

- Other settings: default

Below is the list of games and test applications we used during this test session:

First-Person 3D Shooters

- Battlefield: Bad Company 2

- Call of Duty: Modern Warfare 2

- Crysis Warhead

- Far Cry 2

- Metro 2033

- S.T.A.L.K.E.R.: Call of Pripyat

- Wolfenstein

Third-Person 3D Shooters

- Just Cause 2

- Resident Evil 5

- Street Fighter IV

RPG

- Mass Effect 2

Simulators

- Colin McRae: Dirt 2

- Tom Clancy’s H.A.W.X.

Strategies

- BattleForge

- World in Conflict: Soviet Assault

Semi-synthetic and synthetic Benchmarks

- Futuremark 3DMark Vantage

We selected the highest possible level of detail in each game using standard tools provided by the game itself from the gaming menu. The games configuration files weren’t modified in any way, because the ordinary user doesn’t have to know how to do it. We ran the tests in the following resolutions: 1600×900, 1920×1080 and 2560×1600. Unless stated otherwise, everywhere, where it was possible we added MSAA 4x antialiasing to the standard anisotropic filtering 16x. We enabled antialiasing from the game’s menu. If this was not possible, we forced them using the appropriate driver settings of ATI Catalyst and Nvidia GeForce drivers.

Since there is an enormous number of graphics card models available to us these days, we tried to make sure that each of the two major players in the discrete gaming graphics market is represented by super-powerful flagship solutions, as well as comparatively affordable mainstream models, which are popular among users with limited budget. As a result we ended up with three graphics accelerators from each: ATI and Nvidia:

- ATI Radeon HD 5970

- ATI Radeon HD 5850

- ATI Radeon HD 5770

- Nvidia GeForce GTX 480

- Nvidia GeForce GTX 470

- Nvidia GeForce GTX 275

Performance was measured with the games’ own tools and the original demos were recorded if possible. We measured not only the average speed, but also the minimum speed of the cards where possible. Otherwise, the performance was measured manually with Fraps utility version 3.1.2. In the latter case we ran the test three times and took the average of the three for the performance charts.

Performance in First-Person 3D Shooters

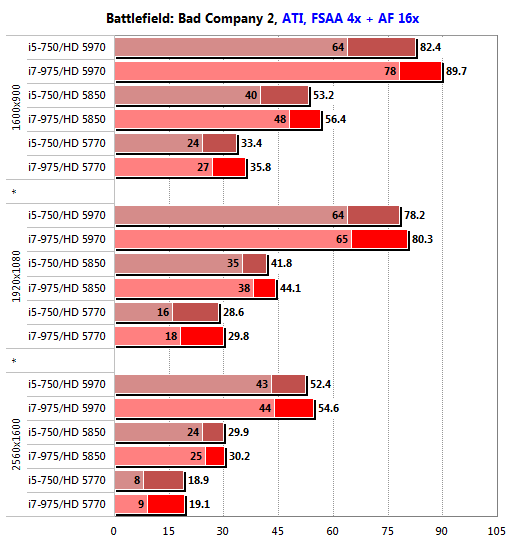

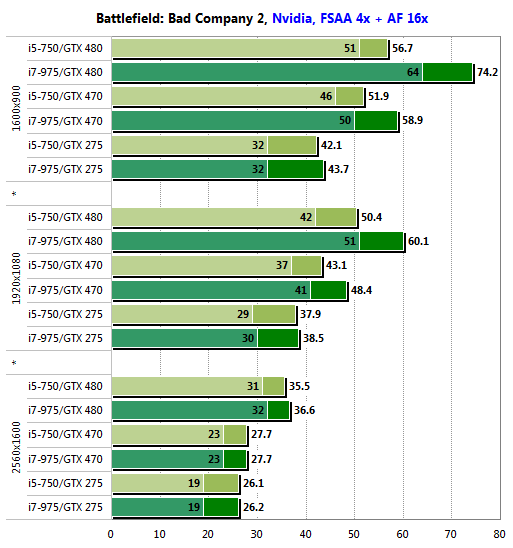

Battlefield: Bad Company 2

The CPU does not affect the frame rate much in this game and its influence lowers at higher resolutions. The Core i7-975 EE is 6-9% ahead of the Core i5-750 in terms of average speed at 1600×900, but at 2560×1600 the gap is only 4% with the dual-processor card and within 1% with the single-GPU Radeon HD 5000 series models. It is at the lowest resolutions that the CPU affects the bottom speed the most. For the Radeon HD 5770, replacing the CPU with a faster one can already make sense then, but we guess that purchasing a faster graphics card, e.g. a Radeon HD 5850, will be an even better solution.

It’s different with the Nvidia products. For example, the senior GeForce series model will perform better with a top-end CPU: the difference between the two CPU models we use in this test session can be as large as 30% (in favor of the Core i7-975 EE, of course). The GeForce GTX 470 depends less on the CPU whereas the previous-generation architecture does not benefit much from the faster CPU because the frame rate seems to be limited by the graphics card itself. The graphics card’s role grows up naturally as the display resolution gets higher, but the new GeForce TX 400 series models perform better when coupled with a top-speed CPU. The only exception is the extreme resolution of 2560×1600 pixels where this difference is reduced to naught. Anyway, the GeForce GTX 400 will benefit from a top-end CPU whereas the Radeon HD 5850, for example, can do just fine without one. By the way, this may be an indication of higher efficiency of the AMD Catalyst driver over the Nvidia GeForce one.

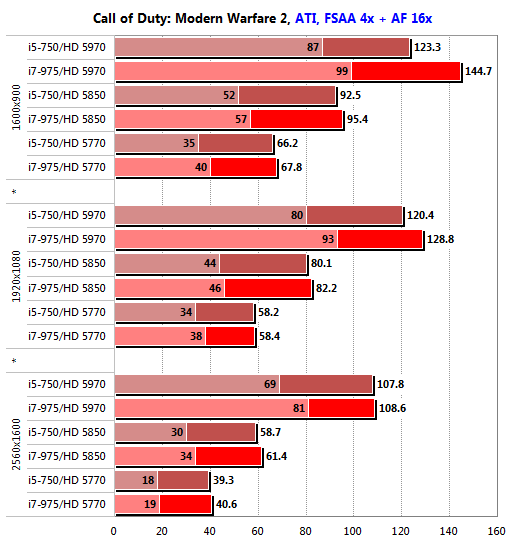

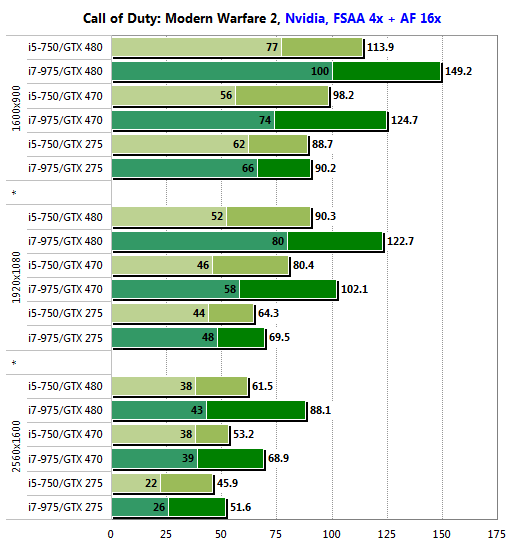

Call of Duty: Modern Warfare 2

The AMD products are somewhat more CPU-dependent in this game, the maximum effect from the faster CPU amounting to 15% and higher – with the Radeon HD 5970 at 1600×900. This effect is no higher than 7% at the higher resolutions, though, and is really hard to see at all at 2560×1600. Even the Radeon HD 5770 speeds up by a mere 3% with the faster CPU then, the bottom speed remaining roughly the same. Thus, the wise solution is to save on the CPU and invest the money into a better graphics card.

It’s different with the Nvidia cards. They become increasingly more CPU-dependent as the display resolution grows up, which is especially conspicuous with the GeForce GTX 400 series: the senior and junior models enjoy a performance boost of 43% and 30%, respectively, in terms of average frame rate. The Core i7-975 EE can even make the game comfortable to play on the GeForce GTX 275 at 2560×1600! Perhaps this is some future potential which Nvidia can untap by releasing new and optimized drivers.

Crysis Warhead

We don’t have a clear picture here. The tested cards perform predictably at 1600×900 but then we see a queer thing at 1920×1080: the performance of the top-end model gets somewhat lower when we switch from the LGA1156/Core i5-750 to the LGA1366/Core i7-975 EE platform. This may be due to a negative effect of Hyper-Threading technology which is not supported by the Core i5-750, but we can also write it off as a measurement inaccuracy. The Core i5-750 platform suddenly slows down at 2560×1600 but this was due to the smaller amount of system memory: 4 gigabytes as opposed to 6 gigabytes on the LGA1366 platform.

The Nvidia cards show a similar effect. It is the GeForce GTX 275 at 1600×900 and the difference of 5% can hardly be only due to measurement inaccuracies. This effect does not show up at the higher resolutions where the GeForce series products perform in different ways. For example, the GeForce GTX 470 is 6% faster with the better CPU at 1920×1080 but does not benefit from the latter at 2560×1600 whereas the GeForce GTX 480 speeds up by 8% with the top-end CPU at the highest resolution. As opposed to the previous tests, the Nvidia cards are not very CPU-dependent, but they only deliver high speed at 1600×900 (and the bottom speed of the GeForce GTX 275 is too low to be comfortable even then). Oddly enough, the LGA1156 platform is quite satisfied with 4 gigabytes of system memory. We don’t see any performance slump at the highest resolution as with the AMD cards.

Far Cry 2

The junior graphics card in this review is obviously a bottleneck and its performance doesn’t depend much on the CPU and amount of system memory. The more advanced cards offer more headroom in choosing your CPU, especially at 1600×900 (but this resolution is unlikely to be used with top-end graphics cards). At the higher resolutions the Core i7-975 EE does not boost the frame rate more than 5%, so there is no sense to prefer it to the cheaper Core i7-920 if you’ve got an LGA1366 mainboard. And if you are building your computer from scratch, you may as well go the LGA1156 or even AMD AM3 platform, especially as both platforms offer more advanced processors than junior Core i5 or AMD Phenom II models.

The same is true for the Nvidia cards. We can only note that the GeForce GTX 470 proves to be the least CPU-dependent model in this test. That card is going to perform nicely in Far Cry 2 together with an affordable CPU, but you must be aware that it may only show its best with some more advanced CPUs than the Core i5-750.

Metro 2033

This game runs without multisampling antialiasing because it worsens the quality of textures and lowers the frame rate.Contrary to our expectations, the AMD products prove to depend more on the CPU at 2560×1600. At the lower resolutions the difference between the two CPUs from Intel is negligible.

The same is true for the Nvidia GeForce series, the GeForce GTX 400 cards being somewhat slower with the top-end CPU at high resolutions. This may be a negative effect of Hyper-Threading technology which is enabled in the Intel Core i7-975 EE by default.

S.T.A.L.K.E.R.: Call of Pripyat

We use the game’s DirectX 10.1 and DirectX 11 modes for graphics cards that support them.

The Radeon HD 5850 is the least CPU-dependent product from AMD here. When installed into the LGA1156 platform, it slowed down by 0.5 to 21% whereas the other Radeons could be up to 30-35% slower with the Core i5-750, especially at the lowest resolution.

The Nvidia cards are good in this test. The GeForce GTX 480 slows down by 17% only when moving to the slower platform. The difference is even smaller than 10% at high resolutions. The less advanced graphics cards are even more indifferent to the CPU. There is one important nuance with graphics cards from both developers: they deliver a higher bottom speed on the LGA1366 platform with Core i7-975 processor and you should take this into account if you want to play at high resolutions.

Wolfenstein

This game is tested in multiplayer mode that uses the OpenGL API. The integrated benchmark does not report the bottom frame rate.

This game is rather simple in terms of visuals and 3D technologies. The CPU doesn’t influence the frame rate much as we benchmark the multiplayer mode. The difference between the two Intel platforms is about 10%, reaching 12-15% in a few individual cases like when the top-end cards from AMD and Nvidia perform at low resolutions. At 2560×1600 the difference between the expensive and affordable CPUs is negligible.

Performance in Third-Person 3D Shooters

Just Cause 2

This game’s integrated benchmark does not report the bottom frame rate.

The AMD cards do not lose much speed when switching from the LGA1366/Core i7-975 EE platform to the LGA1156/Core i5-750 one. It is only in one case that the gap is more than 15%, with the Radeon HD 5770 at 1920×1080 (this resolution is too high for that card anyway). Like in most other tests, the smallest effect is observed at 2560×1600 where the capabilities of the graphics subsystem come to the fore.

The Nvidia products do not show clear trends but the GeForce GTX 275 is overall more CPU-dependent in this game than its newer GF100-based cousins. The cheaper CPU and mainboard do not provoke a serious performance hit. If the frame rate is high on the LGA1366/Core i7-975 EE, it is also playable on the LGA1156/Core i5-750. The opposite is true, too. If you buy a top-end CPU, you still won’t be able to increase the frame rate to a comfortable level with a GeForce GTX 275 despite the formal performance increase of 25%.

Resident Evil 5

This test is unique as the difference between the two platforms having the same graphics card inside may be as high as 45 and even 60%! On the other hand, the frame rates are so high that this performance boost has no practical value. And the difference becomes small at 2560×1600, just when the extra speed would be most called for. The only exception is the Radeon HD 5970 which is 16% slower with the Core i5-750 at the highest resolution, but the difference between 110 and 95 fps cannot be felt without Fraps.

Street Fighter IV

The integrated benchmark does not report the bottom frame rate.

The Nvidia solutions are less CPU-dependent than their opponents in this game. The Radeon HD 5000 series is up to 30-35% slower on the LGA1156/Core i5-750 platform than on the LGA1366/Core i7-975 EE. With the Nvidia cards, the maximum difference is 15%, which is observed with the GeForce GTX 480 at 1600×900. In the other cases, the difference is even smaller than 10%, the GeForce GTX 275 being even faster on the slower platform (this must be due to the Core i5-750 not supporting Hyper-Threading). The frame rate is playable in every case, so this game does not really call for a top-end CPU.

Performance in RPG

Mass Effect 2

We enforced full-screen antialiasing using the method described in our special Mass Effect 2 review.

The game is rather indifferent to the CPU and mainboard, at least when it comes to the CPUs we use in this test. After all, the affordable Core i5-750 is quite an advanced CPU with four cores but we did not have the opportunity to perform the test with a dual-core model. We can note that the Nvidia products are more CPU-dependent. Moreover, the bottom speed does not change much with the Radeon HD 5000 series whereas the Nvidia cards have a lower bottom speed on the LGA1156/Core i5-750 platform at resolutions up to 1920×1080. We can see no situation where the cheaper CPU does now allow playing comfortably. Like in the other cases, the wisest decision is to save on the CPU and invest the money into a faster graphics card.

Performance in Simulators

Colin McRae: Dirt 2

We enable the DirectX 11 mode for graphics cards that support it.

The performance of the Radeon HD 5000 series cards does not depend much on the platform: the Core i7-975 EE is only 11% ahead at best, which is too small to make any practical difference. Replacing a Radeon HD 5770 with a Radeon HD 5850 is going to be a better decision since the latter card delivers comfortable performance at 2560×1600 irrespective of the CPU and platform.

The Nvidia cards are more sensitive to the platform, especially the newer GF100-based products which need a top-end CPU to show their best. However, it is still better to buy a faster graphics card rather than a faster CPU especially as the G200-based models from Nvidia do not support the DirectX 11 capabilities which improve the visuals of this particular game greatly.

Tom Clancy’s H.A.W.X.

The game’s integrated benchmark cannot report the bottom frame rate. We use DirectX 10 and 10.1 modes here.

Here, all of the graphics cards, except for the flagship models, deliver the same results irrespective of what platform they work on. The Radeon HD 5970 and the GeForce GTX 480 are really limited by the Core i5-750. The former performs 2-5% faster and the latter, 25-30% faster when the CPU is replaced with the Core i7-975 EE. On the other hand, these performance benefits are not as crucial as to justify the investment into the expensive CPU. Purchasing a faster graphics card will be a wise decision.

Performance in Strategies

BattleForge

We use DirectX 11 mode for graphics cards that support it.

The results are indicative of the near identical reaction of the AMD cards to the platform and CPU. Among the Nvidia cards, the GeForce GTX 480 is the only card to depend on the CPU. This beast seems to be only satiated by a most powerful CPU indeed as the increased bottom speed indicates. On the other hand, the frame rate is never lower than 25 fps even at 2560×1600, so a faster CPU does not make much point even here.

World in Conflict: Soviet Assault

The last gaming test in this review produces unique results. We’ve finally found a game where the faster CPU is justifiable in a gaming platform as it determines whether the game is playable or not. Just take a look at the results of the Radeon HD 5800. You can also see that the Core i7-975 EE/Radeon HD 5770 configuration is preferable to the Core i5-750/Radeon HD 5850 one at 1600×900 due to the higher bottom speed.

Nvidia cards behave in the same manner. Not only the GeForce GTX 275 but also the advanced GeForce GTX 470 can benefit from working together with a faster CPU. The difference in bottom speed is considerable even with the GeForce GTX 480, although this card runs the game fast enough even with the weaker CPU. Overall, we can note that Nvidia solutions are less CPU-dependent than the Radeon HD 5800 series.

Performance in Semi-Synthetic Benchmarks

Futuremark 3DMark Vantage

We minimize the CPU influence by using the Extreme profile (1920×1200, 4x FSAA and anisotropic filtering). We also publish the results of the individual tests across all resolutions.

This is a rather resource-consuming benchmark, especially in the Extreme mode, so the CPU does not influence the result much. When it comes to the AMD solutions, replacing the Core i5-750 with the Core i7-975 EE leads to a performance growth of 7-8%, but may also provoke a small performance hit with some graphics card models. With the Nvidia cards, the frame rate does not grow up more than 2%.

We can see a similar picture in the individual tests. There is no notable effect even at low resolutions, let alone 2560×1600. In other words, a platform with a rather weak CPU can score quite well in 3DMark if you equip it with a top-end graphics card or a multi-GPU tandem.

Conclusion

The results of our comparative test of two Intel platforms are easy to understand and explain. Yes, it is best to equip your gaming platform with both a top-performance graphics card and a premium-class CPU if you’ve got the money, but what if you haven’t? According to our tests, the graphics card being the same, the performance of the platform with an Intel Core i7-975 EE processor can be 10 to 30% higher than that of the platform with an Intel Core i5-750.

But as our tests have also shown, this difference is far from crucial and can be easily made up for by purchasing a better graphics card. It is only in individual cases such as Call of Duty: Modern Warfare 2 or WiC: Soviet Assault that using a top-end CPU is indeed justifiable and necessary to enjoy a comfortable frame rate, but such games are rather rare. With the current prices, buying a Core i5-750 for $200 instead of a Core i7-975 EE for $650-800 will save you about $400 or more which you can spend for a Radeon HD 5850/5870 or even GeForce GTX 480.

As for specific recommendations concerning the choice of a graphics card, the current Radeon HD 5000 series from AMD is overall less CPU-dependent than the GeForce GTX 400 and 200 series from Nvidia. This trend does not hold true in every game, however, and you can see the opposite situation in certain games. But whatever games you are going to play, we would recommend you to prefer a top-class graphics card to a top-end and expensive CPU if you can’t afford to have both. This will ensure high performance in most modern as well as in upcoming games. Besides, the Intel LGA1156 platform is at its peak right now and you can buy an inexpensive Core i5-750 today to replace it with a more advanced model, i.e. a Core i7-870, in the future.