“All Inclusive”: Nvidia GeForce GTX 690 2×2 GB Super Graphics Card Review

Today we are introducing to you the fastest graphics accelerator of our times and even of the rapidly approaching near future.

Nvidia has warmed the public up to the official announcement of the GeForce GTX 690 graphics card in many ways. They started a countdown to a secret event and also sent prybars to hardware reviewers. Yes, prybars rather than graphics cards! This PR move was quite successful as there were dozens of ways of using that tool proposed in forum discussion around the Web, although the correct answer was obvious enough. Of course, samples of the new product were sent out as well, just a few days prior to the market release. Anyway, we’ve managed to make our own preparations just in time. We’ve prepared for you this review of the fastest graphics card available today!

It’s no secret already that the GeForce GTX 690 is a dual-GPU card with two GK104 Kepler processors, so it should have something in common with the earlier products of this kind like Nvidia GeForce GTX 590 or AMD Radeon HD 6990, such as large size, high power consumption and high PSU requirements, high temperature and a noisy cooler (compared to single-GPU products). A dual-GPU card is also supposed to depend on software optimizations to deliver its best performance. Running a little ahead, we can tell you that Nvidia has managed to overturn or at least shake nearly all of these stereotypes with its GeForce GTX 690. So, let’s take a look at it.

Closer Look at Nvidia GeForce GTX 690 2x2GB Graphics Card

Technical Specifications and Recommended Pricing

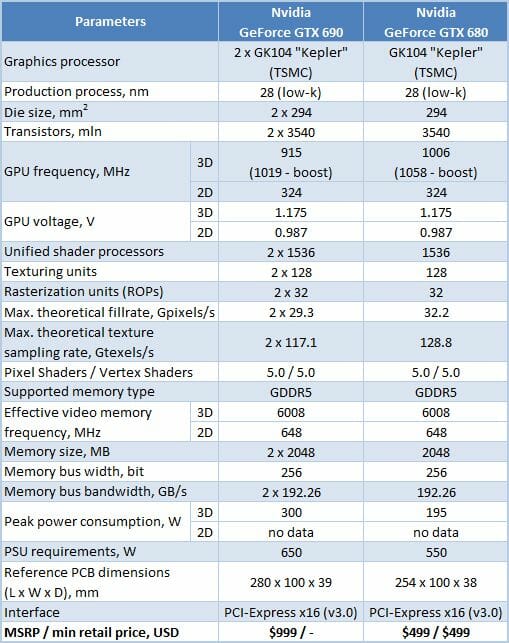

The technical specs of the new Nvidia GeForce GTX 690 are summed up in the following table side by side with the specifications of the GeForce GTX 680 for comparison purposes:

Wooden Box and Prybar

We’ve never seen packaging like that in our many years of testing graphics cards. It is a large and sturdy wooden box nailed up all over.

It’s impressive, to say the least, especially when you realize that this rude packaging contains a high-tech $999 product. That’s what the prybar for, by the way. The binary code on the side of the box, 0b1010110010, stands for the graphics card’s model number (690) and there’s a warning on the top: “Caution. Weapons Grade Gaming Power”.

Although the prybar doesn’t contain 1500 shader processors and can never reach an operating frequency of 1 GHz, it turned out to be perfect for its job. We opened the box up in just a few seconds.

The two thick sheets of foam rubber securely fix the masterpiece of engineering thought, the object of desire for millions of common gamers, the No.1 item on the shopping list of a few thousand wealthy enthusiasts – the GeForce GTX 690 graphics card.

There are no accessories, not even power cables, but that’s just a prelease sample for testing purposes. GeForce GTX 690 will be available from many brands such as ASUS, EVGA, Gainward, Galaxy, Gigabyte, Inno3D, MSI, Palit and Zotac and these makers are going to put everything necessary into the box (we’re not sure about the prybar, though). Once again, the recommended price of the new card is $999.

Design and Features

Nvidia touts its GeForce GTX 690 as the acme of computing technologies, comparing it to the supercar Ferrari F12 Berlinetta and the audiophile system B&W Nautilus. Indeed, this graphics card betrays the developer’s attention to every detail.

The face side of the PCB, which is 280 millimeters long, is covered by the cooler’s casing. Instead of trivial plastic or ordinary metal, the casing is made of aluminum coated with trivalent chromium and has a black magnesium-alloy insert around the fan and two polycarbonate windows through which you can see the GPU heatsinks. The whole thing looks very stylish. Indeed, the photo can’t convey the pleasure you feel while holding this card in your hands.

The reverse side of the PCB is exposed.

That’s okay because the exposed PCB is going to be cooled better than if it were completely sealed within a casing. There are highlighted letters “GEFORCE GTX” on one edge of the card. You’ll see it again in this review shortly.

There’s nothing on the other edge. We can note the lack of vent holes.There is a vent grid in the card’s mounting bracket, though. You can also find three dual-link DVI-I connectors and one mini-DisplayPort here.

Thanks to this configuration of video interfaces, you can connect as many as four HD monitors to a single GeForce GTX 690.There’s an opening in the opposite side of the casing through which you can see the fins of one of the GPU heatsinks.Some of the hot air is exhausted through that opening into the system case.

The GeForce GTX 690 is equipped with one SLI connector, so you can build a multi-GPU configuration with two such cards and a total of four top-end GPUs!

At the back of the card we can see two 8-pin power connectors. The GeForce GTX 690 is specified to have a peak power draw of 300 watts and a typical power draw of 263 watts. A 650-watt or higher PSU is recommended for a computer with a GeForce GTX 690 inside.

The 10-layer PCB is populated by two GK104 Kepler chips, two 2GB sets of GDDR5 memory, a switch chip and power circuitry.The power system contains 10 phases.The two GPUs communicate via a PLX Technology PEX8747 chip that supports up to 48 PCI Express lanes.

Both GK104 Kepler chips are revision A2.

If you’ve examined the specs, you must have noticed that the GeForce GTX 690 comes with full-featured Kepler GPUs that are not cut down in any way. The card has a total of 3072 unified shader processors, 256 texture-mapping units, and 64 raster operators. However, the base GPU clock rate is reduced in comparison with the GeForce GTX 680. It is 915 MHz in 3D applications or 91 MHz lower compared to the GTX 680. The boost clock rate is 1019 MHz, which is only 3.9% lower than the boost clock rate of the GeForce GTX 680. The GPU frequency is dropped to 324 MHz in 2D mode.

We couldn’t read the markings on the GDDR5 memory chips of our card but we suspect the GeForce GTX 690 to use the same memory as the GeForce GTX 680, i.e. FCBGA-packaged chips from Hynix Semiconductor labeled H5GQ2H24MFR R0C. There are 2 gigabytes of memory per each GPU. The card’s memory clock rate is 6008 MHz in 3D mode (the same as on the GTX 680) and 648 MHz in 2D mode.

GPU-Z 0.6.1, the latest version available as of our writing this, knows the GeForce GTX 690 well enough.

Cooling System: Efficiency and Noisiness

Here’s how the cooling system deployed on the GeForce GTX 690 is supposed to work.The GPUs have nickel-plated aluminum heatsinks with copper base designed as a vapor chamber. The power system components and memory chips are cooled by a metallic plate that carries a 9-blade 83mm impeller with PWM-based regulation.

The air from that fan goes through the slim heatsink fins and gets exhausted through the vent grid in the mounting bracket as well as through the opening in the other side of the casing. Some of the hot air remains inside the system case, which seems to be the only downside of this cooler design. On the other hand, thanks to the fan working both ways, the GPUs are going to have about the same temperature, which may be beneficial for overclocking.

We checked out the card’s temperature while running Aliens vs. Predator (2010) in five cycles at the highest settings (2560×1600, with 16x anisotropic filtering and 4x antialiasing). We used MSI Afterburner 2.2.0 and GPU-Z 0.6.0 as monitoring tools. This test was carried out with a closed system case at an ambient temperature of 23°C. We didn’t change the card’s default thermal interface.

Let’s see what temperature the card has when its fan is regulated automatically.

We’ve got interesting results here. The GPUs were 79 and 82°C hot during the test while the top speed of the fan was 2160 RPM. But the most interesting thing is that the GPU clock rate was boosted from 915 MHz not to the specified 1019 MHz but to 1071 MHz. GPU-Z and MSI Afterburner both report the same number, so it’s not an inaccuracy of our monitoring tools.

At the maximum fan speed of 2970 RPM the GPUs had peak temperatures of 71 and 73°C.The numbers are quite normal for a dual-GPU card and the two GPUs do have similar temperature.

We should note that the speed of 2160 RPM in automatic mode and the maximum speed of 2970 RPM set manually are rather low even for single-GPU products, let alone dual-GPU ones. Nvidia puts an emphasis on the fact that the GeForce GTX 690 is actually 4 dBA quieter than the reference GeForce GTX 680.

Let’s check this out, though, using our own method.

We measured the level of noise using an electronic noise-level meter CENTER-321 in a closed and quiet room about 20 sq. meters large. The noise-level meter was set on a tripod at a distance of 15 centimeters from the graphics card which was installed on an open testbed. The mainboard with the graphics card was placed at an edge of a desk on a foam-rubber tray.

The bottom limit of our noise-level meter is 29.8 dBA whereas the subjectively comfortable (not low, but comfortable) level of noise when measured from that distance is about 36 dBA. The speed of the graphics card’s fan was being adjusted by means of a controller that changed the supply voltage in steps of 0.5 V.

We’ve included the results of reference GeForce GTX 680 and Radeon HD 7970 cards into the next diagram. Here are the results (the vertical dotted lines indicate the top speed of the fans in automatic regulation mode).

Well, the GeForce GTX 690 doesn’t meet our expectations as its noise graph goes higher than the GeForce GTX 680’s and merges with the Radeon HD 7970’s graph after 1650 RPM. However, thanks to the lower speed of the fan, the GTX 690 is quieter than the HD 7970 and comparable to (yet not quieter than) the GTX 680. The GeForce GTX 690’s fan worked smoothly, without vibrations or rattling or anything. The card was silent in 2D mode.

Overclocking Potential

Although the new card has two GPUs on board, Nvidia implies that it has got some overclocking potential.Indeed, we could overclock its memory from 6008 to 7588 MHz (+26.3%) and also added 170 MHz (+18.6%) to the base GPU clock rate.

The boost GPU frequency reached as high as 1241 MHz as testified to by the monitoring graph.The peak temperature of the GPUs in the automatic fan regulation mode remained almost the same (80 and 82°C) while the max fan speed only increased by 90 RPM to 2250 RPM. We guess that more efficient cooling (a liquid cooling system, for example) and volt-modding may help reach even higher GPU clock rates but our result is quite satisfying, too.

And, we’ve almost forgotten to tell you – it glows!

Power Consumption

We measured the power consumption of computer systems with different graphics cards using a multifunctional panel Zalman ZM-MFC3 which can report how much power a computer (the monitor not included) draws from a wall socket. There were two test modes: 2D (editing documents in Microsoft Word and web surfing) and 3D (the benchmark from Metro 2033: The Last Refuge at 2560×1600 with maximum settings). The GeForce GTX 690 is compared with a single GeForce GTX 680, a single slightly overclocked Radeon HD 7970 and a couple of HD 7970s in CrossFireX mode. Here are the results.

So, the system with one GeForce GTX 690 card needs less power than the system with two Radeon HD 7970s. The difference is quite large and amounts to 112 watts when the GeForce GTX 690 is not overclocked. We can also note that the GeForce GTX 690 configuration consumes about 150 watts more than the GeForce GTX 680 one. The power consumption of the GTX 680 doesn’t grow up much at overclocking (+28 watts).

Testbed Configuration and Testing Methodology

All graphics cards were tested in a system with the following configuration:

- Mainboard: Intel Siler DX79SI (Intel X79 Express, LGA 2011, BIOS 0460 from 03/27/2012);

- CPU: Intel Core i7-3960X Extreme Edition, 3.3 GHz, 1.2 V, 6 x 256 KB L2, 15 MB L3 (Sandy Bridge-E, C1, 32 nm);

- CPU cooler: Phanteks PH-TC14PE (2 x 135 mm fans at 900 RPM);

- Thermal interface: ARCTIC MX-4;

- System memory: DDR3 4 x 4GB Mushkin Redline (Spec: 2133 MHz / 9-11-10-28 / 1.65 V);

- Graphics cards:

- NVIDIA GeForce GTX 690 2×2 GB, 256 bit GDDR5, 915/6008 MHz and 1085/7588 MHz;

- NVIDIA GeForce GTX 680 2 GB/256 bit GDDR5, 1006/6008 MHz;

- Gigabyte Radeon HD 7970 Ultra Durable 3 GB/384 bit GDDR5, 1000/5800 MHz;

- Sapphire Radeon HD 7970 OC Dual-X 3 GB/384 bit GDDR5, 1000/5800 MHz;

- System drive: Crucial m4 256 GB SSD (SATA-III,CT256M4SSD2, BIOS v0009);

- Drive for programs and games: Western Digital VelociRaptor (300GB, SATA-II, 10000 RPM, 16MB cache, NCQ) inside Scythe Quiet Drive 3.5” HDD silencer and cooler;

- Backup drive: Samsung Ecogreen F4 HD204UI (SATA-II, 2 TB, 5400 RPM, 32 MB, NCQ);

- System case: Antec Twelve Hundred (front panel: three Noiseblocker NB-Multiframe S-Series MF12-S2 fans at 1020 RPM; back panel: two Noiseblocker NB-BlackSilentPRO PL-1 fans at 1020 RPM; top panel: standard 200 mm fan at 400 RPM);

- Control and monitoring panel: Zalman ZM-MFC3;

- Power supply: Xigmatek “No Rules Power” NRP-HC1501 1500 W (with a default 140 mm fan);

- Monitor: 30” Samsung 305T Plus.

The unique graphics cards from Gigabyte and Sapphire, which will be discussed in detail in our next review, were tested in CrossFireX configuration at identical frequencies of 1000/5800 MHz. on the one hand, it is not quite fair towards GeForce GTX 690, because the dual-GPU graphics card from AMD aka Radeon HD 7990 will most likely be working at lower frequencies than Radeon HD 7970 (925/5500 MHz). On the other hand, the majority of currently manufactured Radeon HD 7970 and HD 7950 graphics cards are already manufactured with higher frequencies. Moreover, if our today’s hero outperforms the combination of two Radeons at higher frequencies, the victory will be even sweeter.

In order to lower the dependence of the graphics cards performance on the overall platform speed, I overclocked our 32 nm six-core CPU with the multiplier set at 37x, BCLK frequency set at 125 MHz and “Load-Line Calibration” enabled to 4.625 GHz. The processor Vcore was increased to 1.46 V in the mainboard BIOS.

Hyper-Threading technology was enabled. 16 GB of system DDR3 memory worked at 2 GHz frequency with 9-11-10-28 timings and 1.65V voltage.

The test session started on May 1, 2012. All tests were performed in Microsoft Windows 7 Ultimate x64 SP1 with all critical updates as of that date and the following drivers:

- Intel Chipset Drivers 9.3.0.1020 WHQL from 01/26/2011 for the mainboard chipset;

- DirectX End-User Runtimes libraries from November 30, 2010;

- AMD Catalyst 12.3 driver from 03/28/2012 + Catalyst Application Profiles 12.3 (CAP1) from 03.29.2012 for AMD based graphics cards;

- Nvidia GeForce 301.33 beta driver for Nvidia graphics cards.

The graphics cards were tested in two resolutions: 1920×1080 and 2560×1600. The tests were performed in two image quality modes: “Quality+AF16x” – default texturing quality in the drivers with enabled 16x anisotropic filtering and “Quality+ AF16x+MSAA 4(8)x” with enabled 16x anisotropic filtering and full screen 4x or 8x antialiasing if the average framerate was high enough for comfortable gaming experience. We enabled anisotropic filtering and full-screen anti-aliasing from the game settings. If the corresponding options were missing, we changed these settings in the Control Panels of Catalyst and GeForce drivers. We also disabled Vsync there. There were no other changes in the driver settings.

The list of games and applications used in this test session includes two popular semi-synthetic benchmarking suites, one technical demo and 15 games of various genres:

- 3DMark Vantage (DirectX 10) – version 1.0.2.1, Performance and Extreme profiles (only basic tests);

- 3DMark 2011 (DirectX 11) – version 1.0.3.0, Performance and Extreme profiles;

- Unigine Heaven Demo (DirectX 11) – version 3.0, maximum graphics quality settings, tessellation at “extreme”, AF16x, 1280×1024 resolution with MSAA and 1920×1080 with MSAA 8x;

- S.T.A.L.K.E.R.: Call of Pripyat (DirectX 11) – version 1.6.02, Enhanced Dynamic DX11 Lighting profile with all parameters manually set at their maximums, we used our custom cop03 demo on the Backwater map;

- Left 4 Dead 2 (DirectX 9) – version 2.1.0.0, maximum graphics quality settings, proprietary d98 demo (two runs) on “Death Toll” map of the “Church” level;

- Metro 2033: The Last Refuge (DirectX 10/11) – version 1.2, maximum graphics quality settings, official benchmark, “High” image quality settings; tesselation, DOF and MSAA4x disabled; AAA aliasing enabled, two consecutive runs of the “Frontline” scene;

- Just Cause 2 (DirectX 11) – version 1.0.0.2, maximum quality settings, Background Blur and GPU Water Simulation disabled, two consecutive runs of the “Dark Tower” demo;

- Aliens vs. Predator (2010) (DirectX 11) – Texture Quality “Very High”, Shadow Quality “High”, SSAO On, two test runs in each resolution;

- Lost Planet 2 (DirectX 11) – version 1.0, maximum graphics quality settings, motion blur enabled, performance test “B” (average in all three scenes);

- StarCraft 2: Wings of Liberty (DirectX 9) – version 1.4.3, all image quality settings at “Extreme”, Physics at “Ultra”, reflections On, two 2-minute runs of our own “bench2” demo;

- Sid Meier’s Civilization V (DirectX 11) – version 1.0.1.348, maximum graphics quality settings, two runs of the “diplomatic” benchmark including five heaviest scenes;

- Tom Clancy’s H.A.W.X. 2 (DirectX 11) – version 1.04, maximum graphics quality settings, shadows On, tessellation Off (not available on Radeon), two runs of the test scene;

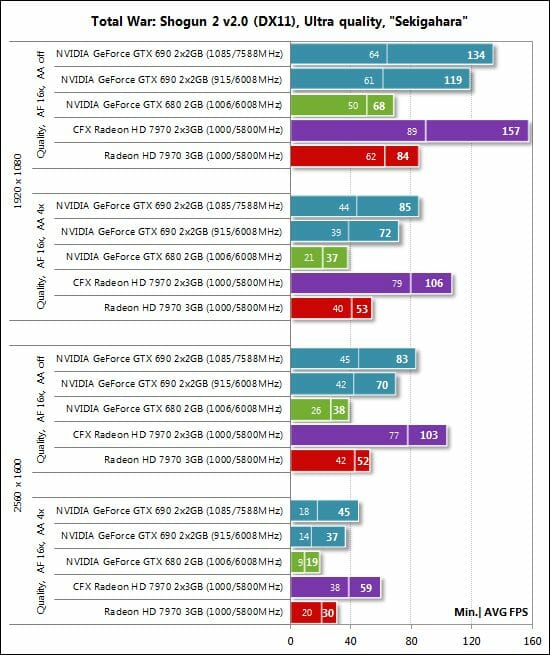

- Total War: Shogun 2 (DirectX 11) – version 2.0, built in benchmark (Sekigahara battle) at maximum graphics quality settings;

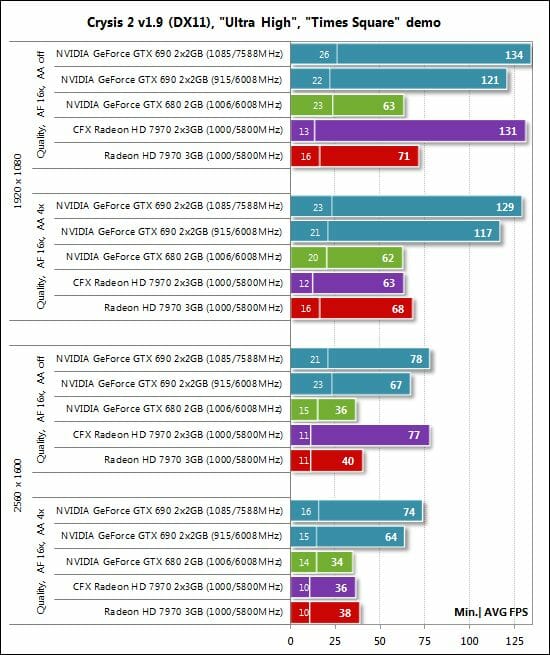

- Crysis 2 (DirectX 11) – version 1.9, we used Adrenaline Crysis 2 Benchmark Tool v.1.0.1.13. BETA with “Ultra High” graphics quality profile and activated HD textures, two runs of a demo recorded on “Times Square” level;

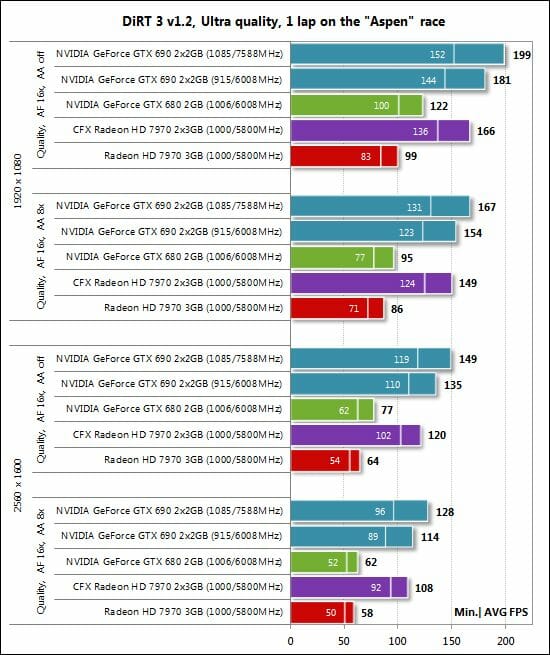

- DiRT 3 (DirectX 11) – version 1.2, built-in benchmark at maximum graphics quality settings on the “Aspen” track;

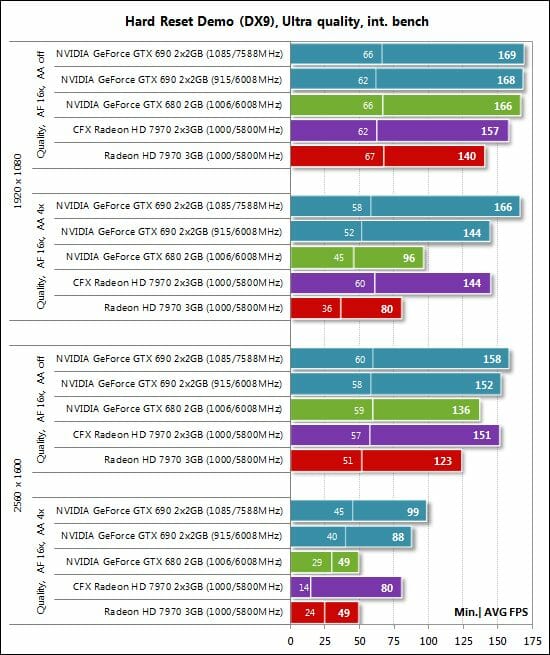

- Hard Reset Demo (DirectX 9) – benchmark built into the demo version with Ultra image quality settings, one test run;

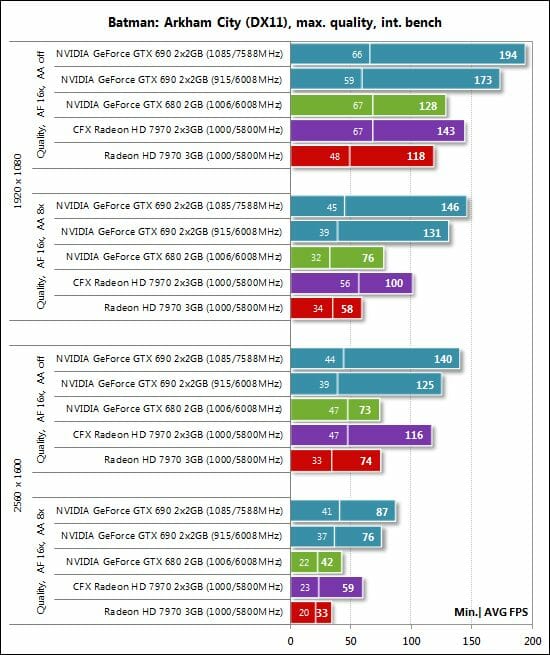

- Batman: Arkham City (DirectX 11) – version 1.2, maximum graphics quality settings, physics disabled, two sequential runs of the benchmark built into the game.

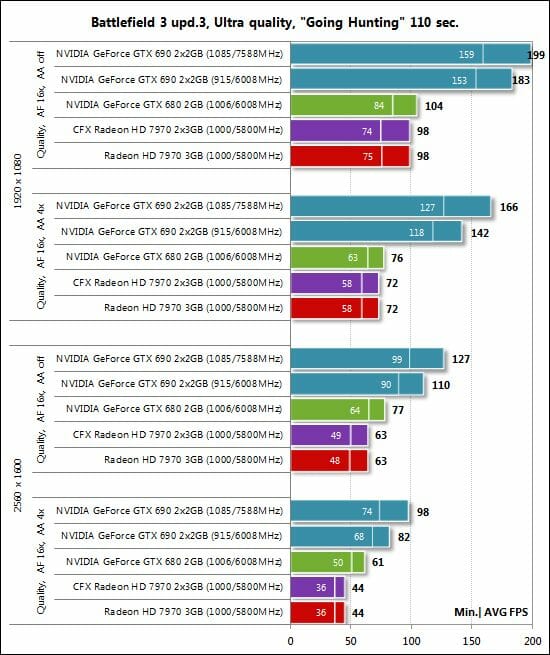

- Battlefield 3 (DirectX 11) – version 1.3, all image quality settings set to “Ultra”, two successive runs of a scripted scene from the beginning of the “Going Hunting” mission 110 seconds long.

If the game allowed recording the minimal fps readings, they were also added to the charts. We ran each game test or benchmark twice and took the best result for the diagrams, but only if the difference between them didn’t exceed 1%. If it did exceed 1%, we ran the tests at least one more time to achieve repeatability of results.

Performance

Unigine Heaven Demo

The GeForce GTX 690 sets new performance records in the semi-synthetic 3DMarks and Unigine Heaven, outpacing the pair of slightly overclocked Radeon HD 7970s.

S.T.A.L.K.E.R.: Call of Pripyat

The new card is good in S.T.A.L.K.E.R.: Call of Pripyat although the CrossFireX tandem of two Radeon HD 7970s is somewhat faster in the heaviest graphics mode.

Left 4 Dead 2

Such top-end graphics solutions can only be compared in Left 4 Dead 2 at 2560×1600 with 8x MSAA turned on. The Radeon HD 7970s are ahead, but the game runs smoothly even on the single GeForce GTX 680.

Metro 2033: The Last Refuge

The GeForce GTX 690 is over 80% faster than the GeForce GTX 680 in this resource-consuming game. Nvidia’s dual-GPU flagship is as fast as the two overclocked Radeon HD 7970s.

Just Cause 2

Just Cause 2 isn’t a heavy application, yet it allows us to compare the new card with the GeForce GTX 680 and AMD solutions. The GeForce GFTX 690 comes out the winner, beating the GeForce GTX 680 by about 80%.

Aliens vs. Predator (2010)

Aliens vs. Predator (2010) is one of the three games on our list where the CrossFireX tandem of Radeon HD 7970s cards overclocked to 1000/5800 MHz beats the default GeForce GTX 690. The latter is fast anyway, enjoying a 90% advantage over the GeForce GTX 680.

Lost Planet 2

The GeForce GTX 690 is equal to the CrossFireX tandem of Radeon HD 7970s in Lost Planet 2 and is even 6 fps ahead in average frame rate in the heaviest graphics mode.

StarCraft II: Wings of Liberty

Everything’s clear in StarCraft II: Wings of Liberty. When not limited by the platform, the GeForce GTX 690 is superior whereas AMD’s solutions have problems with antialiasing in this game.

Sid Meier’s Civilization V

The Radeons look pretty confident in Sid Meier’s Civilization V, though:

The GeForce GTX 690 is 85% ahead of the GeForce GTX 680.

Tom Clancy’s H.A.W.X. 2

Total War: Shogun 2

It is in Total War: Shogun 2 that the Radeon HD 7970 CrossFireX configuration scores its third win over the GeForce GTX 690 which in its turn is much faster than the GeForce GTX 680.

Crysis 2

The Radeon HD 7970 CrossFire can only compete with the GeForce GTX 690 in Crysis 2 until we turn antialiasing on. When antialiasing is enabled, CrossFireX turns off, so the CrossFireX tandem becomes no faster than the single Radeon HD 7970. Meanwhile, the GeForce GTX 690 beats the GeForce GTX 680 by 85% and more!

DiRT 3

The GeForce GTX 690 wins DiRT 3.

Hard Reset Demo

The CPU-dependent Hard Reset can only help us compare the cards when antialiasing is turned on. The GeForce GTX 690 wins then.

Batman: Arkham City

The GeForce GTX 690 is unrivalled in Batman: Arkham City, too.

Battlefield 3

The CrossFireX tandem has some problems in Battlefield 3, so Nvidia’s new card meets no competition:

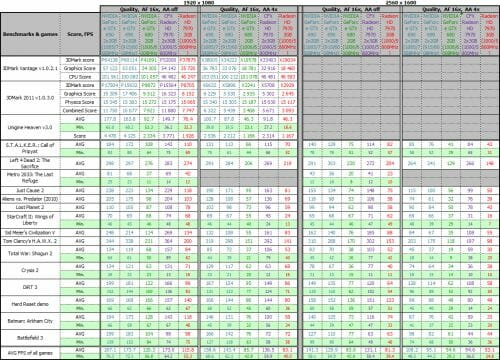

In conclusion here is the table with detailed test results for your reference:

Performance Summary

First, let’s measure the difference between the GeForce GTX 680 and the dual-GPU GeForce GTX 690 at their default clock rates.

We can see the same trend throughout all the tests: the gap between the two cards grows larger at higher graphics loads. The yellow columns are almost always higher than the violet ones, which means that the GeForce GTX 690 shows its best when you enable antialiasing and highest resolutions. The average advantage of the GeForce GTX 680 over its junior cousin amounts to 54-72% at 1920×1080 and to 62-78% at 2560×1600. But if we take the most resource-consuming applications, the gap will be 90-95%!

The next pair of summary diagrams compare the default GeForce GTX 690 with the CrossFireX tandem of Radeon HD 7970s clocked at 1000/5800 MHz.

We’ve got two multi-GPU technologies competing here. We’ll discuss the efficiency of CrossFireX in more detail in our upcoming report, but we can note here that the GeForce GTX 690 easily wins where CrossFireX doesn’t work or works inefficiently (and there are quite a lot of such applications on our list including Battlefield 3, Crysis 2 at the high-quality settings and Batman: Arkham City). The slightly overclocked CrossFireX tandem is ahead in three games: Aliens vs. Predator (2010), Sid Meier’s Civilization V and Total War: Shogun 2 (the GeForce series has got slower in the latter game with the recent drivers). In the rest of the games and tests the two solutions deliver similar performance. If we calculate the average across all the tests (which is not quite a correct way of comparison, we must admit), the default GeForce GTX 690 is ahead of the two Radeon HD 7970s overclocked to 1000/5800 MHz by 5-12% at 1920×1080 and by 1-15% at 2560×1600.

The AMD Radeon HD 79xx series features high overclocking potential and a linear correlation between performance and clock rates. Today we’ve overclocked our GeForce GTX 690 from 915/6008 to 1085/7588 MHz (+18.6/26.3% without frequency boost), so let’s see what performance benefits this overclocking provides.

Comparing the results at 1920×1080 and 2560×1600, we can see a higher performance growth at the higher resolution. The overclocked GeForce GTX 690 is faster by 8-12% at 1920×1080 and by 11-15% at 2560×1600. The heavier the test, the bigger the performance growth is. The Radeon HD 79xx series has a stronger correlation between performance and frequency at overclocking, but the GeForce GTX 690 is a multi-GPU solution as opposed to the single-GPU Radeon HD 7970.

Conclusion

Nvidia GeForce GTX 690 2×2 GB is the world’s fastest graphics accelerator being as much as 95% faster than the today’s flagship single-GPU Nvidia product – GeForce GTX 680 2 GB. The new Nvidia creation boasts mind-blowing performance, which is so incredibly high that it simply makes no sense to invest in this card unless your monitor supports resolutions above 1920×1080. And in 2560×1600 resolution, which is currently the highest resolution possible (on single-monitor configurations), GeForce GTX 690 will deliver comfortable gaming experience in any contemporary game even with enable antialiasing. And this performance won’t be excessive in such games as Metro 2033: The Last Refuge or Total War: Shogun 2.

I would like to specifically stress superb energy-efficiency of the new GeForce GTX 690. As we have just seen, a system equipped with one dual-GPU Nvidia graphics accelerator consumes about 112 W less under peak operational load than a system with a pair of slightly overclocked AMD Radeon HD 7970 graphics cards. I doubt that the owners of graphics cards like that ever pay attention to their power bills, but Nvidia’s advantage here is beyond obvious. Moderate level of generated noise will also be a pleasant bonus for the owners of these graphics cards. Of course, we were truly impressed with the unique packaging and an enclosed prybar: Nvidia’s out-of-the-box PR move has definitely been extremely successful. Keep up such creativity, guys!

Now we are looking forward to the dual-GPU response from AMD. And from now on the ultimate dream-machine of every dedicated and wealthy gamer out there will look something like that.