HDMI, DisplayPort, VGA, and DVI. What You Need to Know

Display connectors can be problematic even when you know which connector you need. Standards have changed since the early 2000s but with people having old TVs, monitors, or even laptops, there can be issues with connectors and cables.

A laptop might have a VGA out, while the monitor could have a DVI-in connector. That in itself is a problem, because the two are not compatible without an adapter. The most common connectors you will find on devices that have display in or out are VGA, DVI, HDMI, and DisplayPort.

Following is a history overview and everything you should know about the four mentioned connectors and standards.

VGA – The First of Many

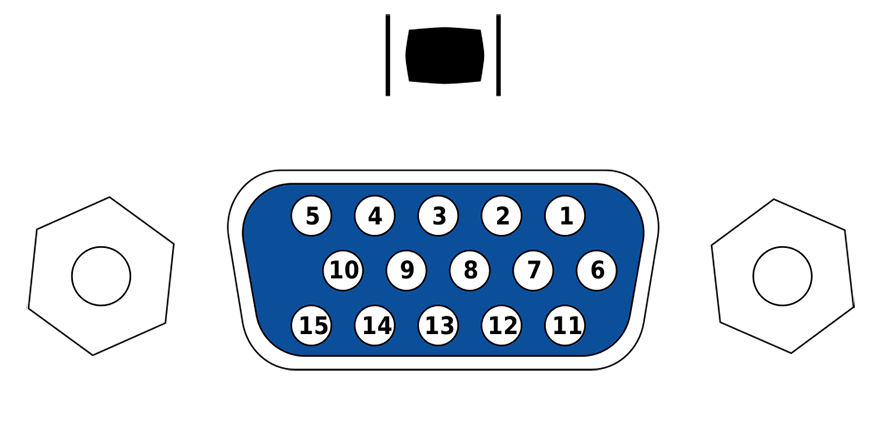

The VGA plug with its iconic screws on both sides.

VGA stands for Video Graphics Array and was a game changer in 1987. Its first implementation was in the IBM Personal System/2 or shortly, PS/2. It superseded the EGA or Enhanced Graphics Adapter.

It used a miniature D-sub connector, named that way because when introduced, they were among the smallest available connectors. The resolution that was the most used since its inception and throughout the mid 1990s was 640×480, often dubbed the VGA resolution.

The D-sub connector typically had 15 pins and can be seen throughout the entirety of VGA’s lifespan, from its inception to even the 2010s and beyond. Some motherboards still have VGA out as an option for displays.

The VGA connector was often blue and had security screws.

VGA is Analog

One of the first things to note about VGA is that it is sending an analog signal, meant to be used with CRT monitors. Afterwards, LCD monitors also supported VGA, despite its very apparent age. That being said, graphics cards with a VGA socket had a digital to analog converter.

It also goes without saying that any VGA to modern interface such as the three remaining ones, requires an adapter. Depending on the interface, the adapter might need to be active whereas sometimes a passive one could suffice.

SVGA

Super VGA became popular in 1988 when NEC Home Electronics announced that they wanted to create a standards organization for computer displays, what is now known as VESA. The main reason for that was the development and standardization of SVGA.

SVGA would become the most widely adopted display standard throughout the 1990s, despite IBM pushing VGA and XGA. IBM’s standards were proprietary and thus required licensing, while SVGA was more accessible.

Feature-wise, it boosted the resolution to the famous 800×600, a solid increase over 640×480. VESA added display driver standards in 1989, the VESA BIOS Extension, providing a common ground for graphics cards.

Digital Visual Interface (DVI)

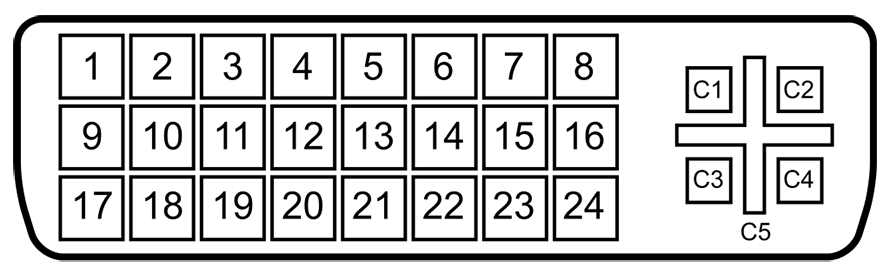

The full dual link DVI-I connector with its 24 pins and 5 analog pins.

DVI is a visual interface that was developed primarily to standardize uncompressed digital signals for displays, graphics cards and video devices. Created by the Digital Display Working Group in 1999, it was by far the most popular connector and interface in the 2000s.

Apart from being able to meet the growth of digital TVs, monitors and graphics cards, it also accommodated analog devices. There are three DVI connectors:

- DVI-A – where A stands for analog. It sends only analog signals.

- DVI-I – where I stands for integrated. It is able to send both analog and digital signals.

- DVI-D – where D stands for digital. This type could send only digital signals.

The preferred connector would be DVI-I because of its versatility. The others were fine for specific devices and use cases. Active adapters were necessary for DVI-A and DVI-D connectors, if one wanted to send signals to an analog or digital device.

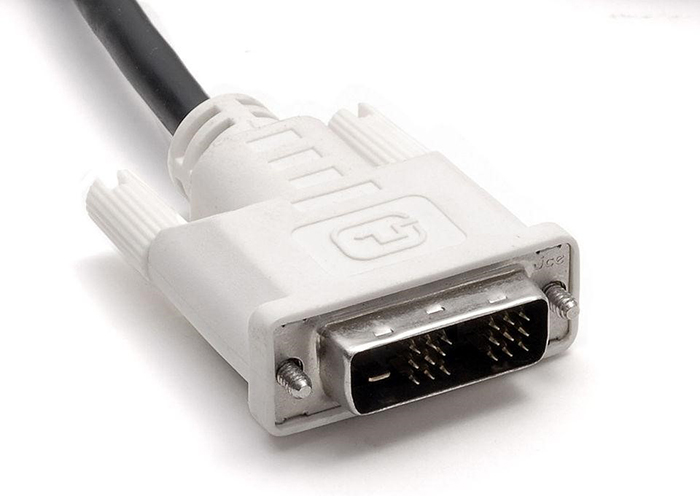

A DVI single link connector.

Breakthroughs

Other than compatibility, DVI brought higher resolutions and refresh rates. DVI uses transition minimized differential signaling or TMDS to support a resolution of 1920×1200 at 60Hz. This is with single link DVI.

Dual link DVI has the full 24 pins and supports a resolution of 2560×1600 at 60Hz. There are resolutions in between, such as Full HD at 144Hz, which made early esports titles much more interesting. Despite that, many affordable monitors still had VGA connectors at the time.

Cable length was an issue, though it was easier to mitigate because digital signals aren’t influenced by electrical noise in the same ways that analog ones are. With longer cables, signal repeaters are recommended to boost the TMDS clock frequency.

High-Definition Multimedia Interface (HDMI)

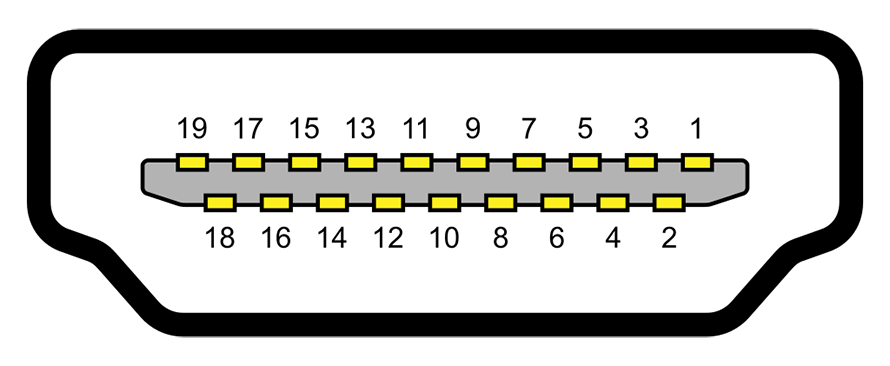

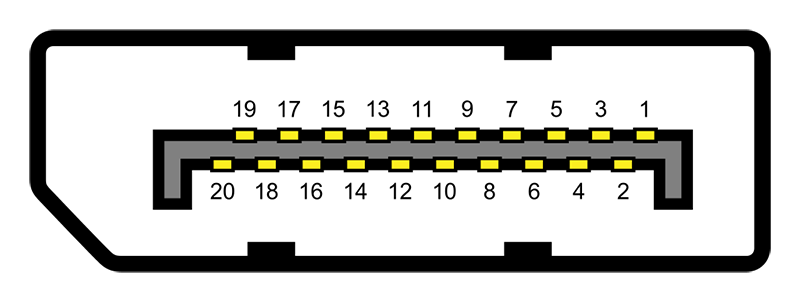

An HDMI type A connector pinout, with its symmetrical layout.

HDMI was created in 2002 and was first seen on commercial devices in 2004, selling by the tens of millions by 2007. What made it attractive was simplicity, but also high-bandwidth digital copy protection or HDCP.

Increased resolutions, speed, and refresh rate, made HDMI a desirable interface. DVI-D and HDMI were interchangeable without the need for anything other than a passive adapter for the connectors.

HDMI still relies on TMDS for data interleaving, but sends other information such as ethernet data, consumer electronics control or CEC (used to communicate HDMI connected devices with a single input device), and more.

HDMI cables have connectors of varying sizes, typically type A, C, D, and E. Type B was a proposed dual link HDMI, a larger type A, but had never seen the light of day on consumer devices.

A type A, type C (mini), and type D (micro) HDMI connector.

DisplayPort

The DisplayPort connector is different from HDMI and has notable asymmetry.

After the failed Plug & Display and Digital Flat Panel, VESA designed DisplayPort in 2006 and devices were on shelves in 2008. It is the first video interface to use micro packets, information broken into packets, just like Ethernet, USB, and PCIe do.

This makes it incompatible with previous standards, though dual-mode was supported from the first iteration, allowing DisplayPort to send TMDS information. Connectors for adapters were necessary.

DVI dual link and VGA required active adapters, powered by the DisplayPort connector. Single link DVI and HDMI just require passive connector adapters.

Today, DisplayPort is most widely adopted on computer monitors, especially high refresh rate monitors, while its competitor, HDMI, is mostly found on TVs.

A standard DisplayPort cable with its locking mechanism, designed to keep the connector secure.

Conclusion

Computer display standards have changed and evolved over the years. From VGA, over DVI to now HDMI and DisplayPort, we have seen technology change for the better.

Understanding the interfaces, their flaws and advantages, makes it easy to find the right one for your next monitor, TV, or even graphics card. A better understanding of the standards also helps us avoid mistakes when purchasing adapters.

There are subtle differences between the standards, not to mention glaring ones. These differences and details, however, are a story for another time.

A nice summary! – Thanks!