GeForce GTX 680 2 GB on “Kepler” Graphics Architecture

At last the patience of beautiful graphics fans has been rewarded: Nvidia has finally rolled out their response to AMD’s “Tahiti”. And today we are proud to present a new GK104 graphics processor on “Kepler” architecture and the first graphics card based on it.

One year and four months ago the GeForce GTX 580, Nvidia’s top-of-the-line single-processor graphics cards with the Fermi architecture, was released. That’s a very long time for the GPU market, so users have been eagerly awaiting the next Nvidia GPU with new Kepler architecture and 28nm tech process, analyzing every rumor and every piece of leaked-out data that could be gathered about it. To add more tension to the situation, Nvidia’s competitor AMD successfully launched its own top-end Radeon HD 7970 graphics card in late 2011 and it turned out to be the fastest single-GPU graphics card with enormous overclocking potential.

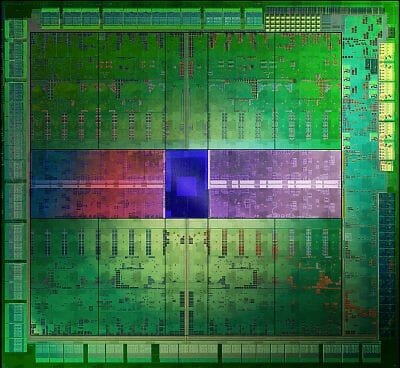

Well, the wait is over now. The GK104 processor and the Nvidia GeForce GTX 680 graphics card have finally arrived:

It is interesting that the GK104 is not positioned as a hi-end solution even though Nvidia claims the GeForce GTX 680 to be the fastest and highest-efficiency graphics card available right now. Nvidia is going to roll out faster solutions later this year, the 28nm GK104 and the GeForce GTX 680 being promoted as products that deliver highest performance per watt through the benefits of the thinner manufacturing process and Kepler architecture. Let’s check out the Kepler’s capabilities right now.

GPU Architecture and Positioning

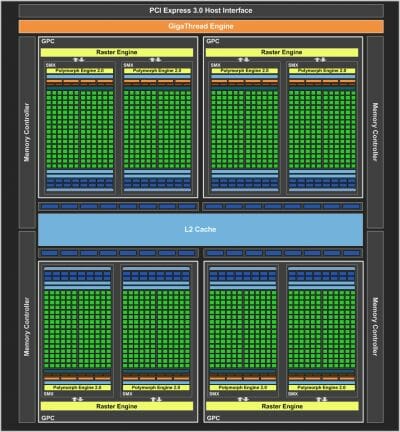

Faster and more efficient – that’s how Nvidia promotes its Kepler architecture. But how did they intend to increase efficiency and performance of their new GPUs? DirectX 11 still being the newest API for 3D applications, both GPU developers have focused on polishing off their existing DirectX 11 implementations. As you already know, AMD replaced its VLIW architecture with GCN, which is more GPGPU oriented. Nvidia’s previous architecture, codenamed Fermi, was originally developed for DirectX 11 and GPGPU and had no downright weak spots. How could it be optimized further? Towards higher performance and energy efficiency, of course. So, here is the Kepler architecture:

The Kepler is similar to the Fermi architecture in its general topology and structure, so we will just cover the changes here.

Like the previous generation of Nvidia’s GPUs, the GK104 contains a GigaThreadEngine, memory controllers, L2 cache for data and instructions, raster back-ends, and Graphics Processing Clusters responsible for computing and texture-mapping operations. The GeForce GTX 680 has as many as four GPCs, each comprising a Raster Engine and several stream multiprocessors which are now called SMXs rather than SMs as in the Tesla and Fermi architectures. With the GK104, each GPC contains two SMXs as opposed to the Fermi’s four SMs in each GPC.

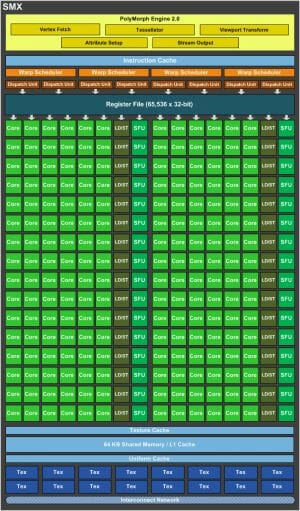

Being the basic building block of the Kepler architecture, an SMX contains:

- One geometry processing unit called PolyMorph Engine 2.0 which is supposed to be twice as fast as its Fermi counterpart;

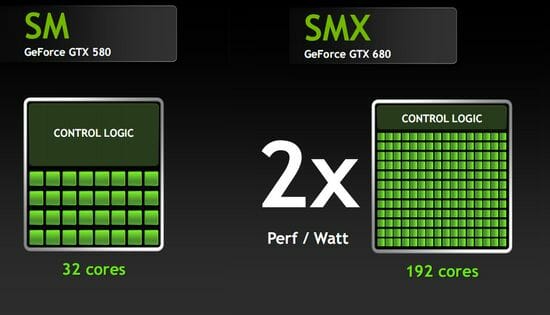

- 192 CUDA cores, 32 special function units and 32 load & store units working at the base GPU frequency (rather than at a double frequency as in the Fermi);

- 16 texture-mapping units (two times more than in the Fermi);

- 64KB L1 cache;

- A register file for 65536 x 32-bit entries, which is twice as large as in the Fermi;

- 8 dispatch units and 4 warp schedulers (twice more than in the Fermi).

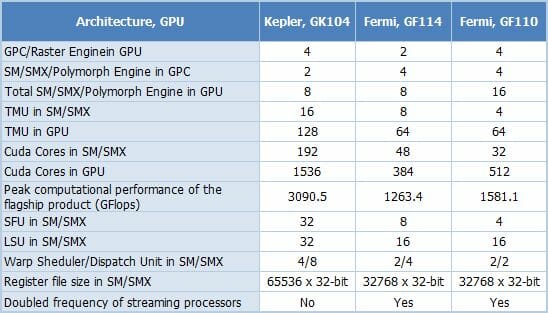

For easier reading, the next table summarizes the architectural differences between the GK104, GF114 and GF110:

It can be noted that the GK104 is structurally closer to the GF114 rather than to the GF110. This seems to be another indication that the GK104 is a flagship solution but temporarily and will eventually be replaced by a GK110 that is going to feature some GPGPU optimizations.

Thanks to the new SMX design, the GK104 has got a lot of execution units, surpassing the GeForce GTX 580 in nearly every parameter. It has half the latter’s geometry-processing units but each of those units has double performance. And the transistor count has only increased by 18% at that: from 3 billion in the GeForce GTX 580 to 3.54 billion in the GeForce GTX 680.

Another goal Nvidia wanted to reach with its Kepler was to make the new architecture more energy efficient. Most of the changes in the architecture were implemented with this purpose in mind. The power consumption of stream processors and control logic has been reduced. The double clock rate of stream processors in previous GPUs used to require various buffers and queues but now that the whole GPU is clocked at the same frequency, these auxiliary components are not needed anymore and their transistors can be utilized to implement more execution devices. The clock generators and stream processors requiring less power at the lower frequency, the total power consumption is reduced although the peak theoretical performance remains the same. Nvidia claims that the new stream processor design makes them twice as energy efficient as in the Fermi.

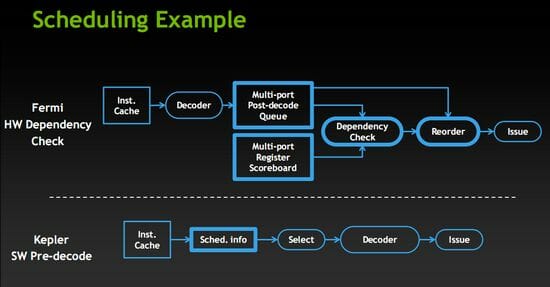

The control logic has been modified as well. The Fermi’s hardware instruction decoding and reordering mechanisms have been replaced with software ones which, although require almost twice the amount of transistors to implement, consume less power. This has helped Nvidia to boost performance and reduce power consumption even more. The amount of control logic per stream processor has decreased, contributing to power saving. Considering that most of a GPU’s transistors account for execution devices and control logic, the described changes make the Kepler architecture far more energy efficient compared to its predecessor.

GPU Boost

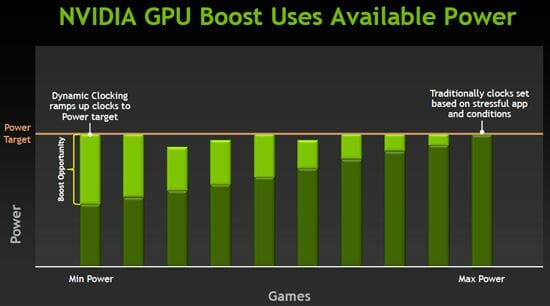

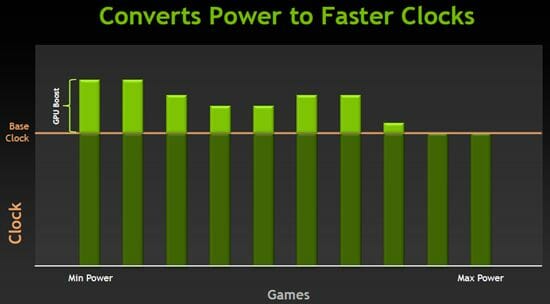

GPU frequency management is one important innovation in the Kepler architecture. GPUs normally use a clock rate which is fixed at a certain level which is supposed to be safe under any usage scenarios. This clock rate is usually selected with a large safety margin, so that the graphics card worked within permissible power consumption limits even at the highest load. But it means that in ordinary applications the graphics card has a large reserve in terms of power consumption and heat dissipation.

Nvidia suggests that this reserve can be converted into increasing GPU clock rate and, consequently, performance in such applications. So, the Kepler has a special subunit that monitors key GPU parameters such as clock rate, power consumption, temperature and computing load, and controls the graphics card’s frequencies and voltages basing on that data. The technology is called GPU Boost.

For example, the base clock rate of the GeForce GTX 680 is 1006 MHz. The card is guaranteed to have it whatever 3D load. However, in most 3D applications it will be automatically increased to 1058-1100 MHz, i.e. by 5-10%. And in some cases the frequency will be increased even higher. So, this technology is an efficient way of getting all the performance the graphics card can give without going out of the permissible power consumption limits.

Adaptive V-Sync

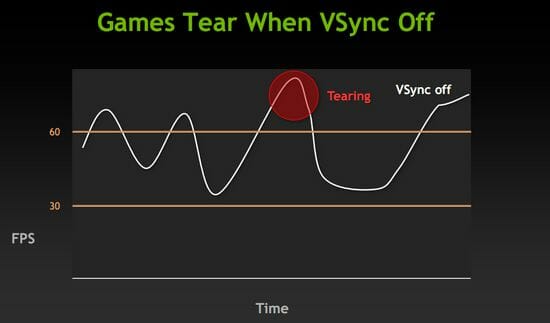

If you play modern video games, you know that the difference between the bottom and top frame rate can be very large. If the frame rate is much higher than the monitor’s refresh rate, which is usually 60 Hz and corresponds to 60 fps, you can see image tearing artifacts. It’s when the top and bottom parts of the image appear to be torn apart.

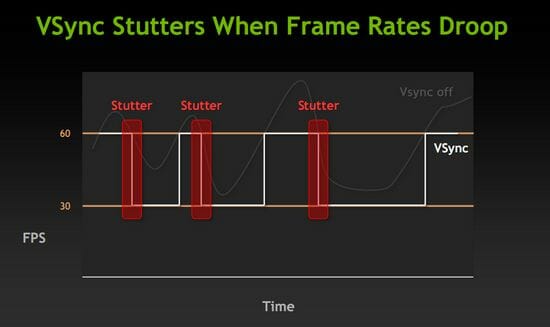

This problem can be eliminated by means of vertical synchronization. However, the solution causes another problem. If the frame rate is lower than the monitor’s refresh rate, the enabled V-Sync lowers the effective frame rate to multiples of 60 Hz, i.e. 30, 20 or even 15 fps. That’s not comfortable to play.

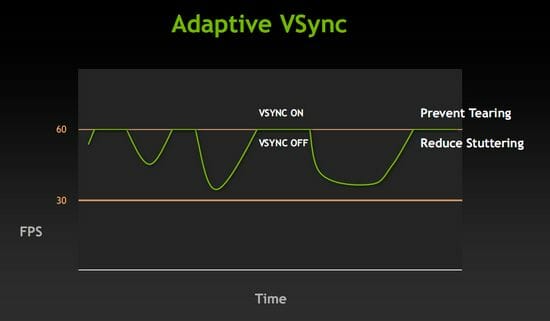

So, the point of adaptive V-Sync is in turning on vertical synchronization when it’s necessary (i.e. when the game’s frame rate is higher than the monitor’s refresh rate) and turning it off when it’s not necessary (for example, if the game’s frame rate is 50 fps, i.e. below the monitor’s refresh rate, vertical synchronization is turned off to prevent the frame rate from plummeting to 30 fps).

New Antialiasing Algorithms – FXAA and TXAA

Fast Approximate Anti-Aliasing or FXAA is a post-processing shader that implements an antialiasing algorithm which works faster than the classic MSAA, consumes fewer resources, and generally delivers higher quality of antialiasing. The only problem is that it had to be integrated into the game engine. With the new generation of Nvidia cards, however, this algorithm is part of the graphics card driver and can be enabled in most games, even if they do not explicitly support it.

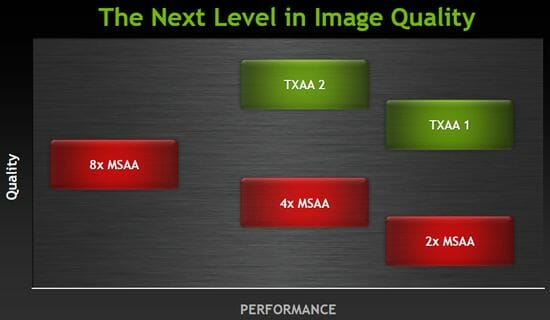

TXAA is a more sophisticated algorithm. Based on hardware MSAA, it uses a software filter to deliver high temporal antialiasing quality at a small performance hit.

Two TXAA modes have been declared so far. TXAA1 is somewhat better in quality than 8x MSAA but comparable to 2xMSAA in terms of consumed resources. TXAA2 is superior to MSAA algorithms in quality and comparable to 4x MSAA in terms of resources.

These antialiasing methods will be available not only with the new Kepler-based graphics cards but also with the older Fermi-based solutions (GeForce GTX 4xxx and GTX 5xx series).

Multi-Monitor Configurations

Nvidia’s GeForce 2MX series was the first to support two displays over a decade ago. You had to do some complex setting-up, though, and you could only normally connect a TV-set with S-Video interface as a second display. Starting from the GeForce 4 series, the support for dual-monitor configurations has been a standard feature. Unfortunately, Nvidia was slow to progress further despite the growing popularity of multi-monitor setups. For example, TV-sets with HDMI interfaces are often connected to computers as monitors. So now Nvidia wants to catch up with the current trends by supporting up to four displays in the Kepler architecture. The GeForce GTX 680 comes with four interfaces by default: two dual-link DVIs, one HDMI and one DisplayPort.

A gamer may want to connect up to three monitors to get a panoramic picture in games and use a fourth monitor for something else.

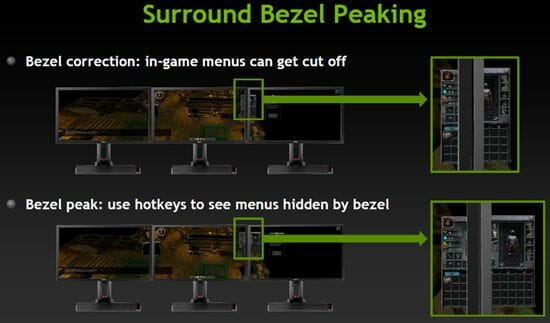

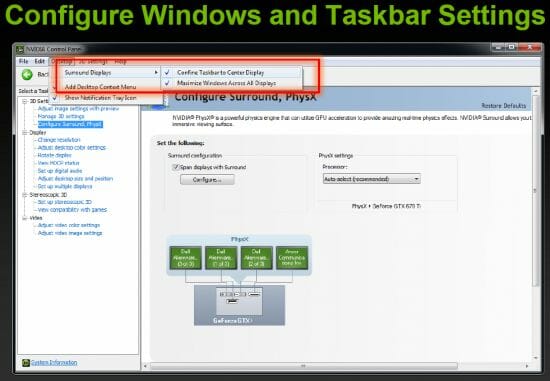

Nvidia has also done some software optimizations for multi-monitor configurations targeted at both gamers and Windows application users.

There are changes in terms of power saving, too. Older graphics cards, when connected to two monitors, could not switch to the power-saving mode and worked at their Low 3D frequencies instead, which led to higher power consumption and temperature in idle mode.

The new graphics card has fewer limitations in this respect. It can now switch to power-saving mode even with four monitors attached if all of them use the same display resolution. But if there is at least one monitor whose resolution differs from the others’, the graphics card will use the Low 3D frequencies instead.

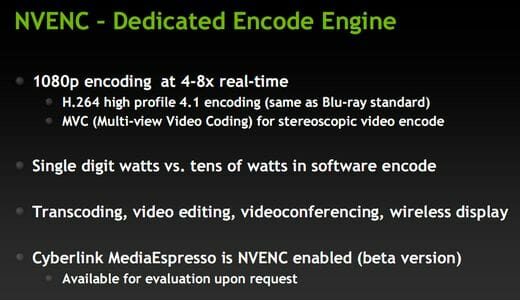

NVENC Encoder

Yet another interesting feature of GK104/Kepler chip is integrated hardware video encoder known as NVENC. It can encode 1080p video in the H.264 format’s Base, Main and High 4.1 profiles in hardware, provided that the software supports it. Nvidia claims that in terms of speed Envencs’s performance is higher than that of software solutions that utilize the GPU’s CUDA cores for video encoding. This technology seems to be targeted at notebook users who need to edit or broadcast HD video captured from camera, which means it is heavely software dependent..

Winding up the theoretical part of our review, we have to confess that the Kepler architecture looks truly impressive even on cursory inspection. It brings about a lot of diverse improvements over the previous architecture, so Nvidia can make its new product interesting to different categories of users. The new antialiasing and adaptive vertical sync algorithms help get smoother and better-looking visuals in video games. The support for up to four monitors can be interesting for gamers as well as professionals. The hardware video decoder and low power consumption are the winning points in a notebook user’s eyes. And, finally, its highest-ever performance is just what computer enthusiasts want. Let’s now check out these claims practice.Closer Look at Nvidia GeForce GTX 680 2GB Graphics Card

Technical Specifications

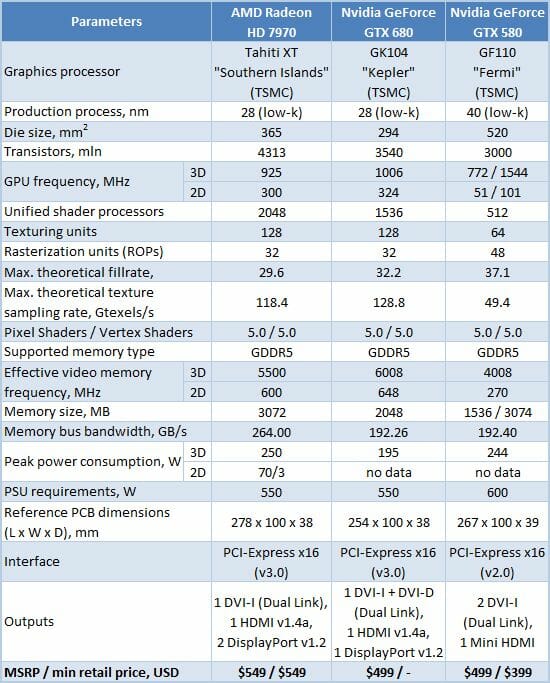

The technical specifications of the new Nvidia GeForce GTX 680 are summed up in the following table and compared side by side against predecessors – GeForce GTX 580 and AMD Radeon HD 7970:

Design and Functionality

The reference Nvidia GeForce GTX 680 doesn’t look much. It has a dual-slot design with a plastic casing and a centrifugal fan in its back. The reverse side of the device is exposed:

The PCB is 254 millimeters long, the cooler casing being no longer than it. There are large letters “GEFORCE GTX” running along the edge of the device. An Nvidia logo can be found in the bottom right of the face side. The GTX 680 will mostly ship in this form, perhaps with different labels and decorations, for the time being. Non-reference versions are likely to come about no sooner than in a couple of months.

According to the official specs, the Nvidia GeForce GTX 680 is equipped with two dual-link DVI ports, one HDMI version 1.4a, and one DisplayPort version 1.2:

If you take a closer look, you can see that the top DVI connector is DVI-D whereas the bottom one is DVI-I. Besides the numerous connectors, there is also a vent grid in the card’s mounting bracket. The air exhausted out of it was very hot during our lengthy tests, by the way.

Quite expectedly, the card has two MIO connectors for SLI configurations in the top part of the PCB.

The two 6-pin power connectors are placed one above another in such a way that the cable locks are both inside. But since the connectors are rather far from each other, it is quite easy to plug and unplug power cables. The card is specified to have a peak power draw of 195 watts, which is 55 and 49 watts less than required by the AMD Radeon HD 7970 and GeForce GTX 580, respectively.

The plastic casing is secured with screws along the graphics card’s perimeter. Below it we can see a GPU heatsink and a metallic plate with centrifugal fan that covers almost the entire face side of the PCB.

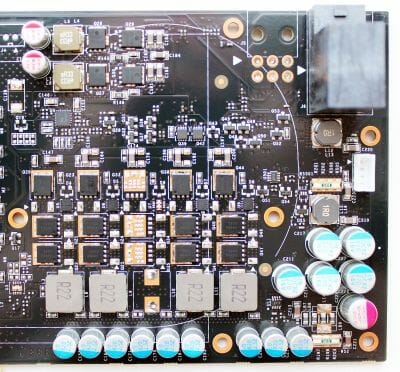

Here is the PCB with that plate removed:

We can see a 4+2 power system here. Interestingly, the fifth phase is not installed, just like yet another 6-pin power connector:

It looks like Nvidia developed this PCB with a higher-performance solution in mind. We don’t think that’s just a cost-cutting measure, especially at the moment of the product’s announcement.

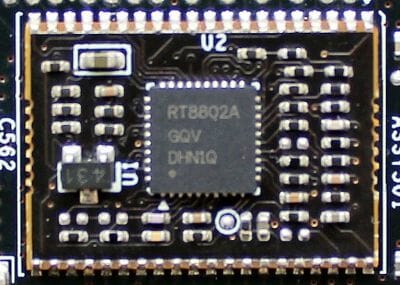

The next component we can take a look at is the PWM-controller RT8802A manufactured by Richtek Technology Corporation.

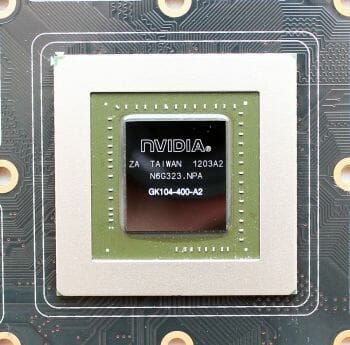

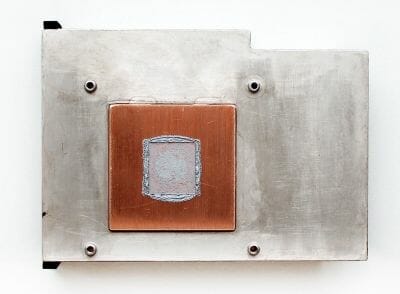

And, finally, here is the open-die 28nm GK104 processor. It is a mere 294 sq. mm large:

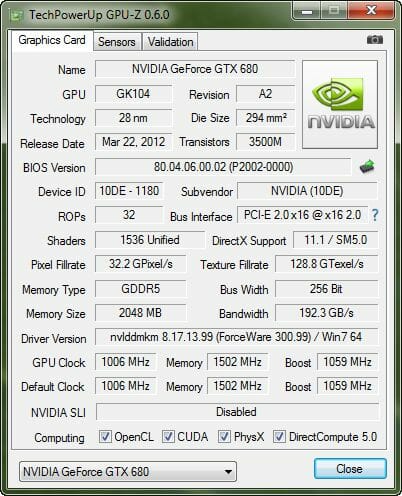

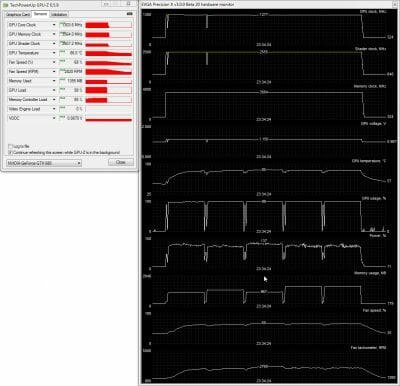

The GPU of our card is revision A2 and was manufactured on the third week of this year. Its default clock rate in 3D mode is 1006 MHz at a voltage of 1.15 volts but it can get as high as 1124 MHz with the GPU Boost feature. In 2D applications the GPU frequency is lowered to 324 MHz at a voltage of 0.987 volts. We’ve already discussed the new GPU’s specs above, though.

There are eight GDDR5 memory chips on the face side of the PCB. They are manufactured by Hynix Semiconductor and labeled H5GQ2H24MFR R0C:

The chips are clocked at 6008 MHz at a voltage of 1.6 volts. This is the fastest memory among all serially produced graphics cards. However, the memory bus is 256 bits wide, so the memory bandwidth is only 192.26 GB/s which is equivalent to that of the GeForce GTX 580 with its 384-bit memory bus and 71.74 GB/s lower than the memory bandwidth of the AMD Radeon HD 7970 (5500 MHz and 384 bits). The GeForce GTX 680 drops its memory clock rate to 648 effective MHz in 2D applications.

3 days before the official launch of the new GeForce GTX 680 a new GPU-Z utility version 0.6.0 came out. It is already fully familiar with specifications of the new product:

The recommended retail price of the Nvidia GeForce GTX 680 is set at $499, which is $50 cheaper than AMD Radeon HD 7970 and $50 more expensive than JHD 7950. Soon we are going to find out what the performance ranking is going to look like.

Cooling System Design and Efficiency

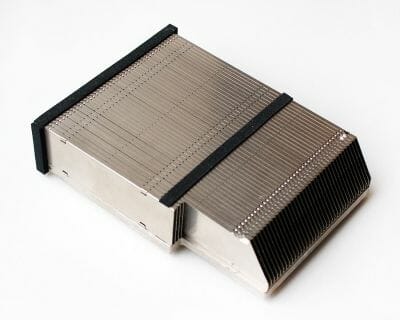

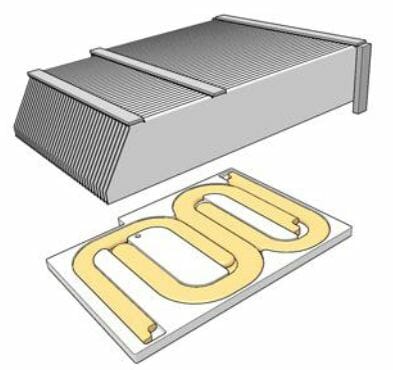

There is nothing original about the GeForce GTX 680’s cooler. It is based on a modest-sized aluminum heatsink with slim fins.

According to available documentation, the heatsink has three copper heat pipes in its base that help distribute the heat uniformly in the aluminum base and fins.

The GPU is only contacted by the cooler’s copper sole which is quite satisfactorily finished:

The power components and memory chips are cooled by a metallic plate with thermal pads:

A classic centrifugal fan with a diameter of 80 millimeters is employed in this cooler:

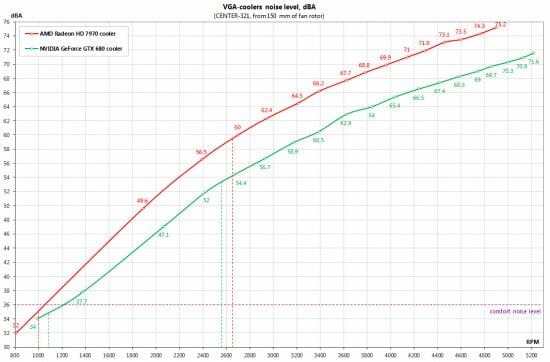

Nvidia claims that the use of high-quality materials and individually balanced fans helps keep the noise level 5 dBA lower compared to the Radeon HD 7970. We’ll check this out shortly.

The speed of the fan is PWM-regulated within a range of 1000 to 4200 RPM, the latter number being just 85% of the fan’s full speed. We tried to regulate the fan manually and found its top speed to be 5200 RPM. Nvidia thinks that 4200 RPM is enough to cool the 28nm GPU chip, though.

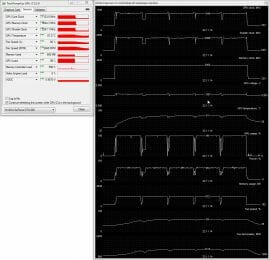

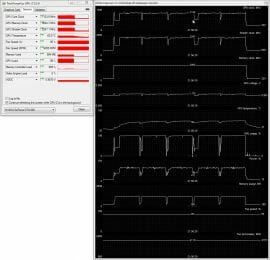

We checked out the card’s temperature while running Aliens vs. Predator (2010) in five cycles at the highest settings (2560×1600, with 16x anisotropic filtering and 4x full-screen antialiasing). We used EVGA PrecisionX version 3.0.0 Beta 20 and GPU-Z 0.5.9 as monitoring tools, the latter reporting the temperature and fan speed of the GeForce GTX 680 correctly. This test was carried out with a closed system case at an ambient temperature of 26°C. We didn’t change the card’s default thermal interface.

Here is the temperature of the card in the automatic fan regulation mode and at 85% speed:

So, the GPU was as hot as 81°C in the automatic regulation mode, the fan speed rising from 1100 to 2460 RPM. At the top speed of 4200 RPM the temperature was no higher than 62°C. Now what about noise?

Noise Level

We measured the level of noise using an electronic noise-level meter CENTER-321 in a closed room about 20 sq. meters large. The noise-level meter was set on a tripod at a distance of 15 centimeters from the graphics card which was installed on an open testbed. The mainboard with the graphics card was placed at an edge of a desk on a foam-rubber tray.

The bottom limit of our noise-level meter is 29.8 dBA whereas the subjectively comfortable (not low, but comfortable) level of noise when measured from that distance is about 36 dBA. The speed of the graphics card’s fans was being adjusted by means of a controller that changed the supply voltage in steps of 0.5 V. We’ve included the results of the reference AMD Radeon HD 7970 into the next diagram. Here are the results (the vertical dotted lines indicate the top speed of the fans in the automatic regulation mode):

As you can see, the cooling system of the reference Nvidia GeForce GTX 680 is indeed better than that of the reference AMD Radeon HD 7970. Despite the higher top speed of the fan (5200 RPM with the GTX 680 and 4900 RPM with the HD 7970), the new card is quieter. As we’ve written above, the top speed of the GeForce GTX 680’s cooler is limited to 4200 RPM (85% of the fan’s full speed). When under continuous 3D load, the speed range of the cooler is 1080 to 2460 RPM with the GTX 680 and 1000 to 2640 RPM with the HD 7970. Thus, Nvidia comes out the winner in this respect as well.

On the other hand, we can’t say that Nvidia’s cooler is really quiet. It was only silent in 2D mode, but as soon as we launched a 3D application we could hear the GeForce GTX 680 against the background noise of our otherwise quiet testbed. The card would grow louder, the longer we tested it. So, if you want a quiet computer, you will have to look for alternatives to the reference cooler.

Overclocking Potential

The base frequency of the GPU is 1006 MHz but it can be dynamically overclocked by 5-10% to 1056/1112 MHz or somewhat higher. We also tried to overclock our card manually using EVGA PrecisionX 3.0.0 Beta 20, checking the card for stability in a few games and two benchmarks.

The Power Target parameter was set at the maximum 132%. After some experimentation, we found that the GPU could be overclocked by 180 MHz (+17.9%) without compromising the card’s stability and image quality. The memory frequency could be increased by 1120 (+18.6%), notching 7128 MHz.

By the way, the GPU Boost feature would automatically raise the GPU clock rate up to 1277 or, occasionally, to 1304 MHz.

The GPU temperature of the overclocked card grew from 81 to 86°C, the cooling fan accelerating to 2820 RPM. This is our first experience of overclocking a reference GeForce GTX 680 and we are going to continue our experiments as soon as we get serially produced versions of that card.

Power Consumption

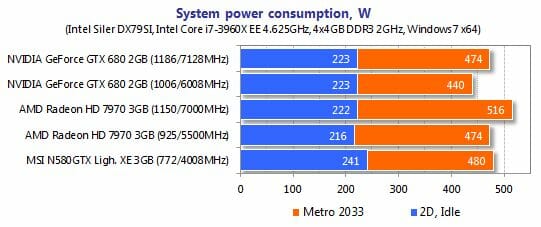

We measured the power consumption of computer systems with different graphics cards using a multifunctional panel Zalman ZM-MFC3 which can report how much power a computer (the monitor not included) draws from a wall socket. There were two test modes: 2D (editing documents in Microsoft Word and web surfing) and 3D (the benchmark from Metro 2033: The Last Refuge in 1920×1080 with maximum settings). Here are the results:

The new GeForce GTX 680 is indeed more economical than its competitors and predecessor. The GeForce GTX 680 system needs 40 watts less power under load than its GeForce GTX 580 counterpart and 34 watts less than the AMD Radeon HD 7970 configuration. We can also note that the power appetite of the GeForce GTX 680 doesn’t grow up as much as that of the Radeon HD 7970 at overclocking.

Testbed Configuration and Testing Methodology

All graphics cards were tested in a system with the following configuration:

- Mainboard: Intel Siler DX79SI (Intel X79 Express, LGA 2011, BIOS 0380 from 02/13/2012);

- CPU: Intel Core i7-3960X Extreme Edition, 3.3 GHz, 1.2 V, 6 x 256 KB L2, 15 MB L3 (Sandy Bridge-E, C1, 32 nm);

- CPU cooler: Phanteks PH-TC14PE (2 x 135 mm fans at 900 RPM);

- Thermal interface: ARCTIC MX-4;

- System memory: DDR3 4 x 4GB Mushkin Redline (Spec: 2133 MHz / 9-11-10-28 / 1.65 V);

- Graphics cards:

- NVIDIA GeForce GTX 680 2 GB/256 bit GDDR5, 1006/6008 MHz;

- AMD Radeon HD 7970 3 GB/384 bit GDDR5, 925/5500 MHz and 1150/7000 MHz;

- MSI N580GTX Lightning Xtreme Edition 3 GB/384 bit GDDR5, 772/1544/4008 MHz;

- System drive: Crucial m4 256 GB SSD (SATA-III,CT256M4SSD2, BIOS v0009);

- Drive for programs and games: Western Digital VelociRaptor (300GB, SATA-II, 10000 RPM, 16MB cache, NCQ) inside Scythe Quiet Drive 3.5” HDD silencer and cooler;

- Backup drive: Samsung Ecogreen F4 HD204UI (SATA-II, 2 TB, 5400 RPM, 32 MB, NCQ);

- System case: Antec Twelve Hundred (front panel: three Noiseblocker NB-Multiframe S-Series MF12-S2 fans at 1020 RPM; back panel: two Noiseblocker NB-BlackSilentPRO PL-1 fans at 1020 RPM; top panel: standard 200 mm fan at 400 RPM);

- Control and monitoring panel: Zalman ZM-MFC3;

- Power supply: Xigmatek “No Rules Power” NRP-HC1501 1500 W (with a default 140 mm fan);

- Monitor: 30” Samsung 305T Plus.

It is quite logical that the competitors for the new Nvidia card will be the fastest graphics accelerator on AMD GPU and the fastest graphics accelerator on the previous-generation Nvidia GPU:

MSI N580GTX Lightning Xtreme Edition 3 GB was tested only at its nominal clock frequencies, while AMD Radeon HD 7970 3 GB was also tested in overclocked mode at 1150/7000 MHz corresponding frequencies.

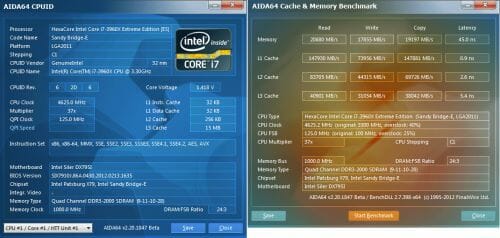

In order to lower the dependence of the graphics cards performance on the overall platform speed, I overclocked our 32 nm six-core CPU with the multiplier set at 37x, BCLK frequency set at 125 MHz and “Load-Line Calibration” enabled to 4.625 GHz. The processor Vcore was increased to 1.45 V in the mainboard BIOS:

Hyper-Threading technology was enabled. 16 GB of system DDR3 memory worked at 2 GHz frequency with 9-11-10-28 timings and 1.65V voltage.

The test session started on March 14, 2012. All tests were performed in Microsoft Windows 7 Ultimate x64 SP1 with all critical updates as of that date and the following drivers:

- Intel Chipset Drivers 29.3.0.1020 WHQL from 01/26/2011 for the mainboard chipset;

- DirectX End-User Runtimes libraries from November 30, 2010;

- AMD Catalyst 12.2 driver from 03/07/2012 + Catalyst Application Profiles 12.2 (CAP1) from 01.18.2012 for AMD based graphics cards;

- Nvidia GeForce 296.10 WHQL from 03/13/2012 for Nvidia GeForce GTX 580 and GeForce 300.99 beta for Nvidia GeForce GTX 680 graphics cards.

The graphics cards were tested in two resolutions: 1920×1080 and 2560×1600. The tests were performed in two image quality modes: “Quality+AF16x” – default texturing quality in the drivers with enabled 16x anisotropic filtering and “Quality+ AF16x+MSAA 4(8)x” with enabled 16x anisotropic filtering and full screen 4x anti-aliasing (MSAA) or 8x if the average framerate was high enough for comfortable gaming experience. We enabled anisotropic filtering and full-screen anti-aliasing from the game settings. If the corresponding options were missing, we changed these settings in the Control Panels of Catalyst and GeForce drivers. We also disabled Vsync there. There were no other changes in the driver settings.

The list of games and applications used in this test session includes two popular semi-synthetic benchmarking suites, one technical demo and 15 games of various genres:

- 3DMark Vantage (DirectX 10) – version 1.0.2.1, Performance and Extreme profiles (only basic tests);

- 3DMark 2011 (DirectX 11) – version 1.0.3.0, Performance and Extreme profiles;

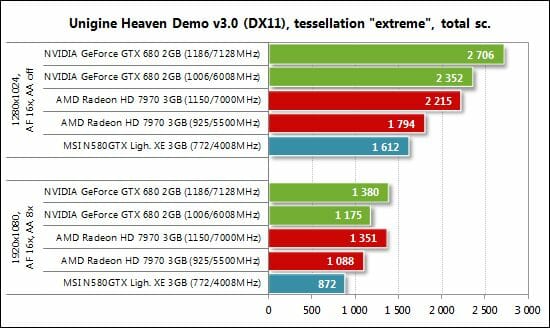

- Unigine Heaven Demo (DirectX 11) – version 3.0, maximum graphics quality settings, tessellation at “extreme”, AF16x, 1280×1024 resolution with MSAA and 1920×1080 with MSAA 8x;

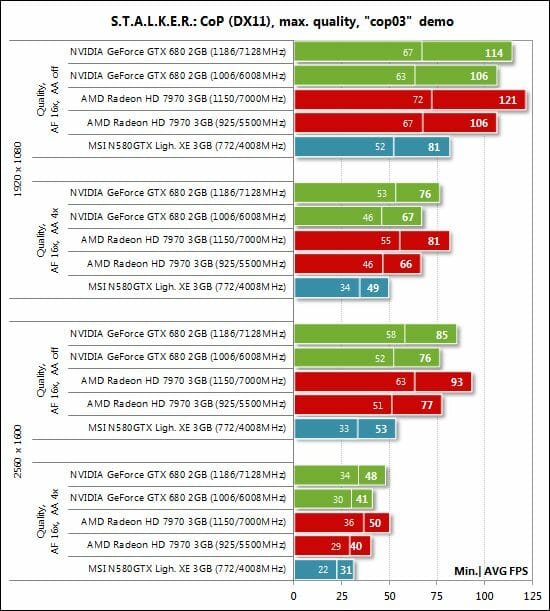

- S.T.A.L.K.E.R.: Call of Pripyat (DirectX 11) – version 1.6.02, Enhanced Dynamic DX11 Lighting profile with all parameters manually set at their maximums, we used our custom cop03 demo on the Backwater map;

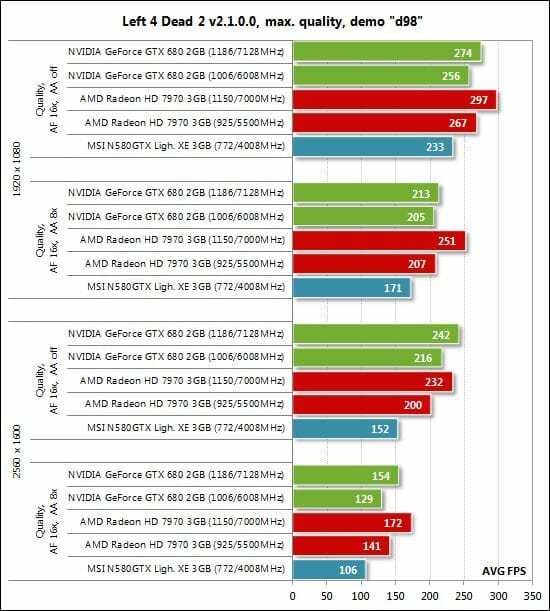

- Left 4 Dead 2 (DirectX 9) – version 2.1.0.0, maximum graphics quality settings, proprietary d98 demo (two runs) on “Death Toll” map of the “Church” level;

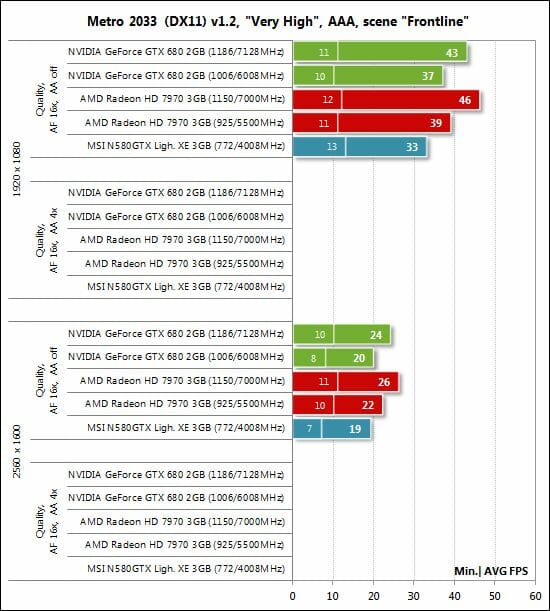

- Metro 2033: The Last Refuge (DirectX 10/11) – version 1.2, maximum graphics quality settings, official benchmark, “High” image quality settings; tesselation, DOF and MSAA4x disabled; AAA aliasing enabled, two consecutive runs of the “Frontline” scene;

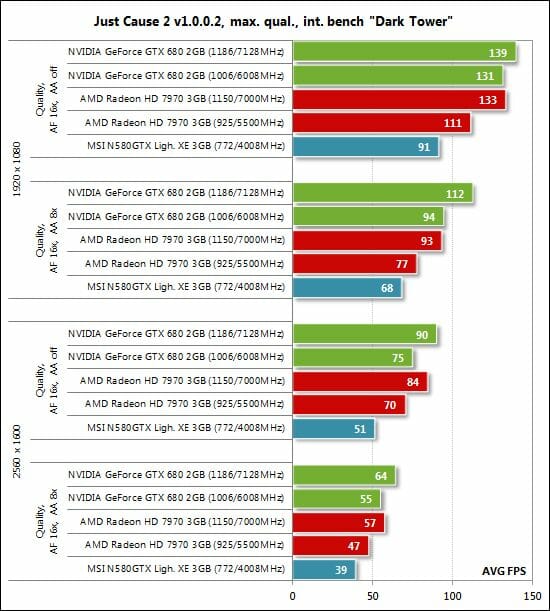

- Just Cause 2 (DirectX 11) – version 1.0.0.2, maximum quality settings, Background Blur and GPU Water Simulation disabled, two consecutive runs of the “Dark Tower” demo;

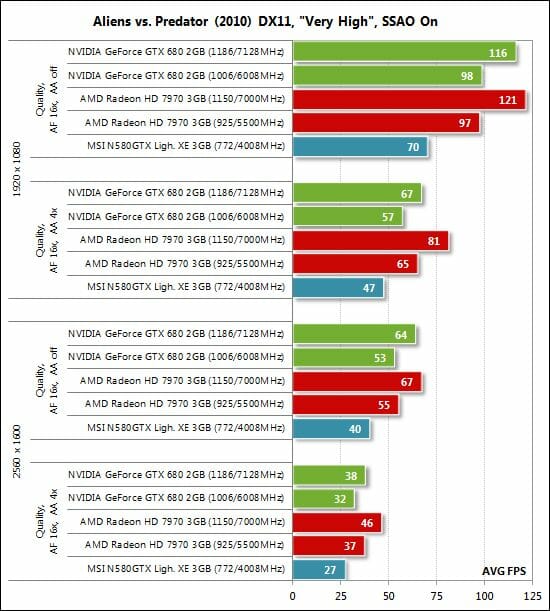

- Aliens vs. Predator (2010) (DirectX 11) – Texture Quality “Very High”, Shadow Quality “High”, SSAO On, two test runs in each resolution;

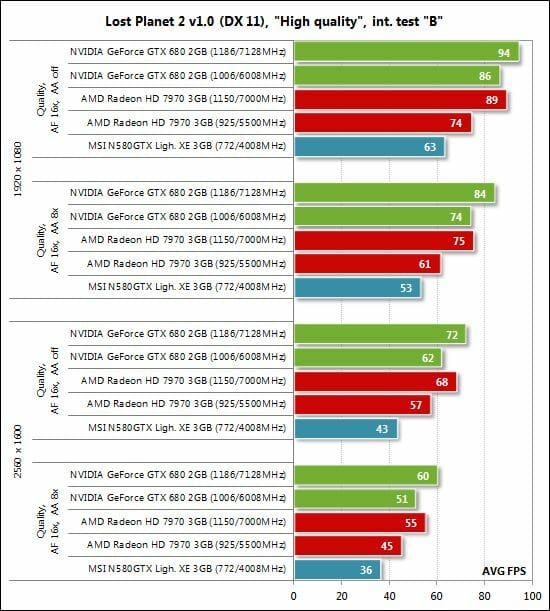

- Lost Planet 2 (DirectX 11) – version 1.0, maximum graphics quality settings, motion blur enabled, performance test “B” (average in all three scenes);

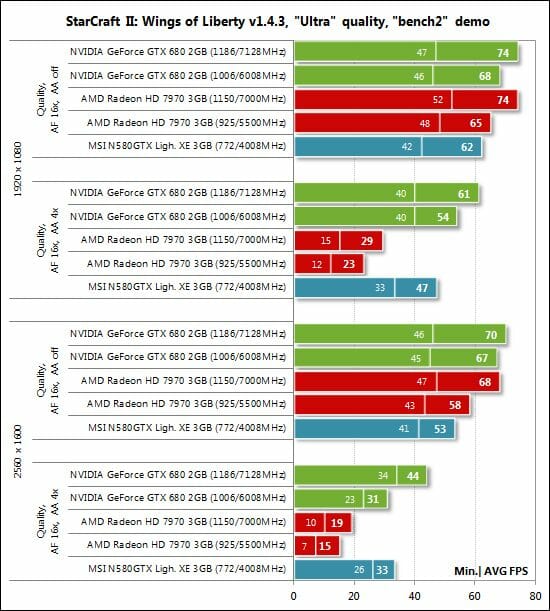

- StarCraft 2: Wings of Liberty (DirectX 9) – version 1.4.2, all image quality settings at “Extreme”, Physics at “Ultra”, reflections On, two 2-minute runs of our own “bench2” demo;

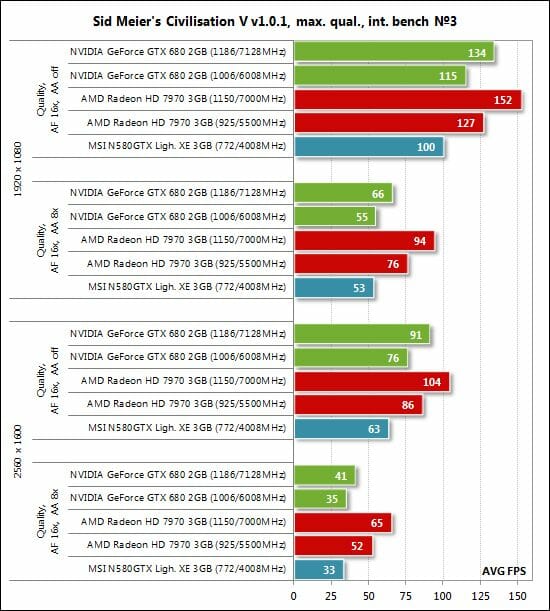

- Sid Meier’s Civilization V (DirectX 11) – version 1.0.1.348, maximum graphics quality settings, two runs of the “diplomatic” benchmark including five heaviest scenes;

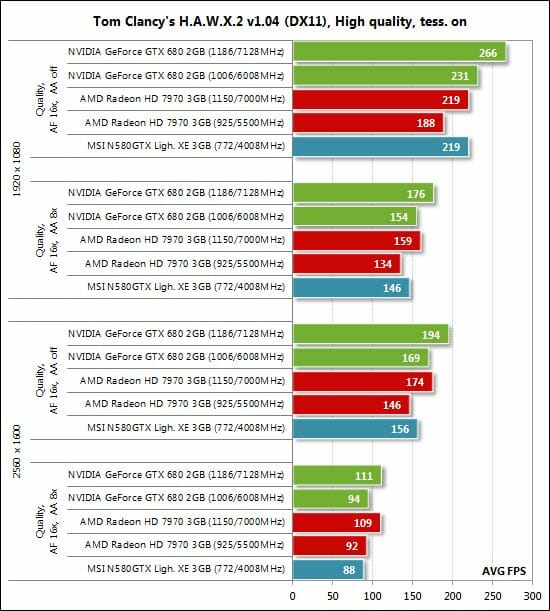

- Tom Clancy’s H.A.W.X. 2 (DirectX 11) – version 1.04, maximum graphics quality settings, shadows On, tessellation Off (not available on Radeon), two runs of the test scene;

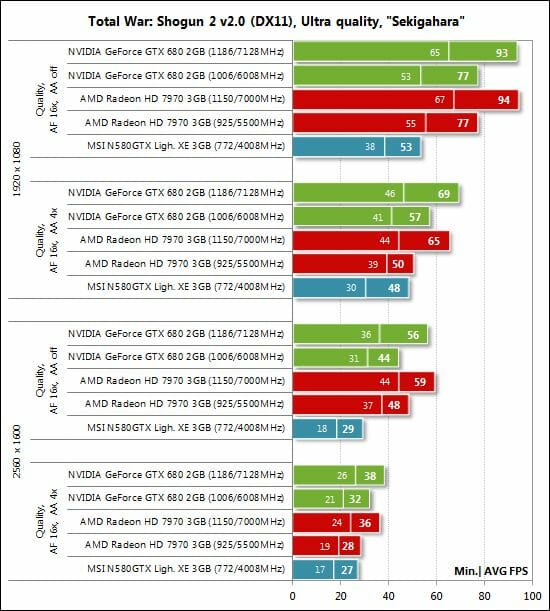

- Total War: Shogun 2 (DirectX 11) – version 2.0, built in benchmark (Sekigahara battle) at maximum graphics quality settings;

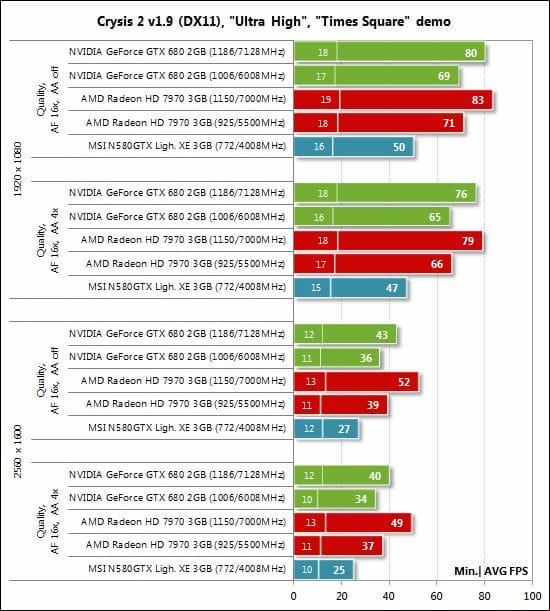

- Crysis 2 (DirectX 11) – version 1.9, we used Adrenaline Crysis 2 Benchmark Tool v.1.0.1.13. BETA with “Ultra High” graphics quality profile and activated HD textures, two runs of a demo recorded on “Times Square” level;

- DiRT 3 (DirectX 11) – version 1.2, built-in benchmark at maximum graphics quality settings on the “Aspen” track;

- Hard Reset Demo (DirectX 9) – benchmark built into the demo version with Ultra image quality settings, one test run;

- Batman: Arkham City (DirectX 11) – version 1.2, maximum graphics quality settings, physics disabled, two sequential runs of the benchmark built into the game.

- Battlefield 3 (DirectX 11) – version 1.3, all image quality settings set to “Ultra”, two successive runs of a scripted scene from the beginning of the “Going Hunting” mission 110 seconds long.

If the game allowed recording the minimal fps readings, they were also added to the charts. We ran each game test or benchmark twice and took the best result for the diagrams, but only if the difference between them didn’t exceed 1%. If it did exceed 1%, we ran the tests at least one more time to achieve repeatability of results.

Performance

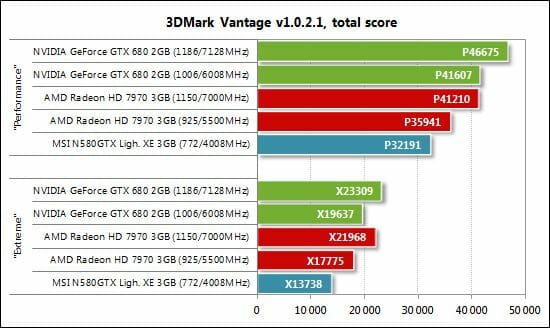

3DMark Vantage

The new GeForce GTX 680 2GB shows its worth right from the first test, enjoying a 29-43% advantage over the GeForce GTX 580 3GB and beating the Radeon HD 7970 by 11-16% depending on the quality settings. Interestingly, the HD 7970 overclocked at 1150/7000 MHz can equal the default GTX 680 in the Performance mode. But in the heavier Extreme mode the overclocked Tahiti-based card goes ahead, even though the GTX 680 can regain its leading position through overclocking to 1186/7128 MHz and GPU Boost. That’s a good beginning for the Kepler. Let’s see what we have in other tests.

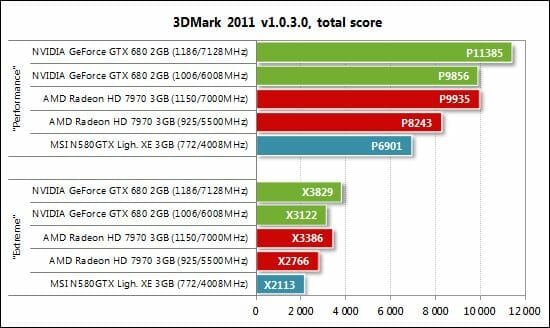

3DMark 2011

We’ve got the same picture in 3DMark 2011. The exact numbers are like follows: the GeForce GTX 680 is 43-48% faster than the GTX 580 and 13-20% faster than the Radeon HD 7970 (both cards working at their default clock rates). The GTX 680 is also ahead when overclocked.

Unigine Heaven Demo

There are no surprises here. The GeForce GTX 680 is 35 to 46% faster than the GTX 580 and outperforms the HD 7970 by 8 to 31%. However, we can see that the AMD-based card is but slightly slower when we enable antialiasing, both at the default and overclocked frequencies. It looks like the 256-bit memory bus may let the GeForce GTX 680 down in heavy graphics modes.

Anyway, the GeForce GTX 680 is the overall winner of our synthetic benchmarks. Let’s see if it can prove its superiority in real games.

S.T.A.L.K.E.R.: Call of Pripyat

The GeForce GTX 680 doesn’t look impressive in S.T.A.L.K.E.R.: Call of Pripyat, being only equal to the Radeon HD 7970. The latter even goes ahead when overclocked. The good news is that the GTX 580 is 32-43% faster than the GTX 580.

Left 4 Dead 2

Considering the high frame rates, we should compare the results in the hardest mode only: 2560×1600 with 8x MSAA. And the AMD Radeon HD 7970 is superior at such settings.

Metro 2033: The Last Refuge

Metro 2033: The Last Refuge is a third game in a row where the GeForce GTX 680 can’t deliver impressive performance and beat the Radeon HD 7970. It is a mere 5-12% better than the GTX 580, which isn’t a large gap for GPUs from two different generations. The Kepler seems to have got a tough opponent to beat.

Just Cause 2

Just Cause 2 is the first game from our list where the Nvidia GeForce GTX 680 beats the AMD Radeon HD 7970. The gap varies from 7 to 22% depending on the quality settings and resolution. Interestingly, the Tahiti-based AMD card cannot win even when overclocked. The GeForce GTX 680 looks impressive in comparison with the previous-generation GeForce GTX 580, too: the gap is 38 to 47%.

Aliens vs. Predator (2010)

The green team gets beaten by the red flagship Radeon HD 7970 in Aliens vs. Predator, the GeForce GTX 680 lagging behind the leader by 4-14%. The gap is larger in the antialiasing mode due to the narrow memory bus of the new Nvidia-based solution. We can also see that by comparing the GeForce GTX 680 (256-bit bus) with the GeForce GTX 580 (384-bit bus): the former is 33-40% faster without 4x MSAA but only 19-21% faster with 4x MSAA turned on.

Lost Planet 2

The game engine is optimized for Nvidia’s GPUs, so it is no wonder that they are superior here. The GeForce GTX 680 is 9 to 21% faster than the Radeon HD 7970 depending on the graphics quality settings and resolution. It is also 37-44% ahead of the GeForce GTX 580.

StarCraft II: Wings of Liberty

The GeForce GTX 680 beats its opponent in this game, the gap amounting to 100% and more in the antialiasing mode. However, this test also indicates that the GeForce GTX 680 might be even faster if it had a 384-bit memory bus: the older GeForce GTX 580 is faster than the newcomer in the hardest test mode!

Sid Meier’s Civilization V

The AMD Radeon HD 7970 strikes back in Sid Meier’s Civilization V. It beats the GeForce GTX 680, enjoying a big advantage of 28-33% with enabled antialiasing. Take note that the Kepler-based card is a mere 4-6% ahead of its Fermi-based predecessor when antialiasing is turned on.

Tom Clancy’s H.A.W.X. 2

The GeForce GTX 680 is but barely ahead of its AMD opponent in Tom Clancy’s H.A.W.X. The GeForce GTX 580 is also as close to the leader as 5-8%.

Total War: Shogun 2

It’s quite a surprise that the GeForce GTX 680 can beat the Radeon HD 7970 in Total War: Shogun 2 as the game is optimized for AMD’s solutions. Although a little faster than the HD 7970, the GTX 680 seems to lack memory bandwidth again.

Crysis 2

When working at their default clock rates, the GeForce GTX 680 and Radeon HD 7970 deliver the same performance in Crysis 2. The AMD card overclocks better and outperforms its opponent at the increased clock rates, though. We can also note that the GeForce GTX 680 is quite faster than its predecessor GTX 580.

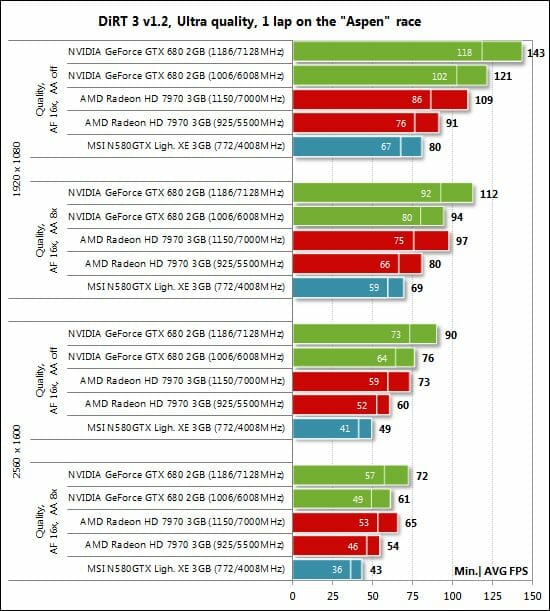

DiRT 3

The GeForce GTX 680 enjoys a massive 55% advantage over the GTX 580 in DiRT 3. The new Nvidia card is also superior to the AMD Radeon HD 7970, leaving the latter behind by 18-33%.

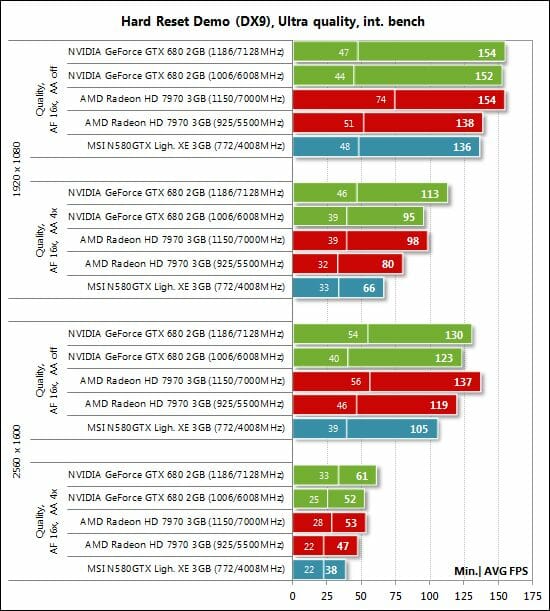

Hard Reset Demo

We can see the same situation in the harder mode of Hard Reset.

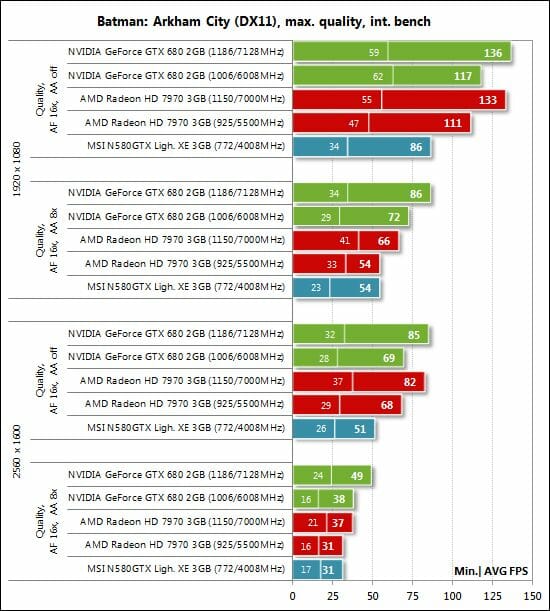

Batman: Arkham City

The GeForce GTX 680 meets no competition in Batman: Arkham City…

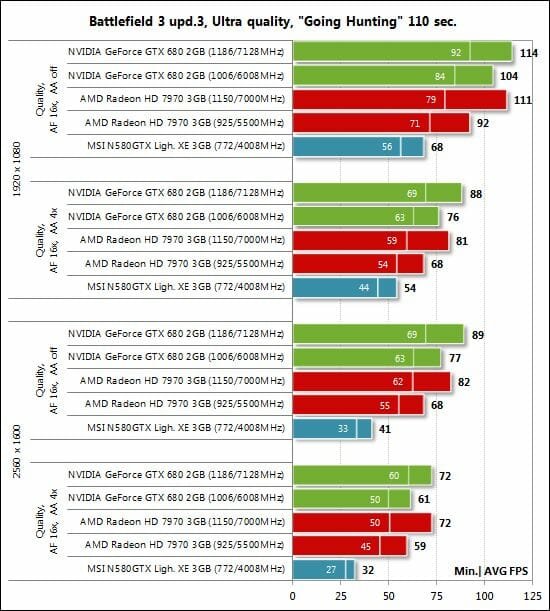

Battlefield 3

…and in Battlefield 3, too:

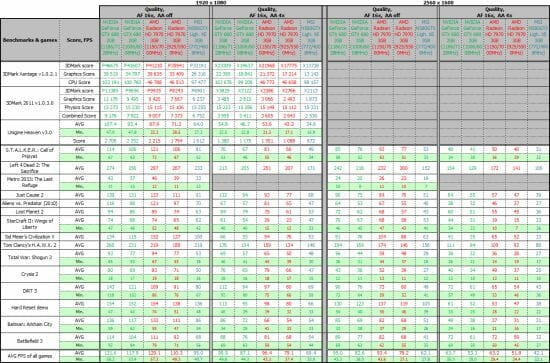

Here is a table with full test results, so we can now proceed to our summary diagrams.

Performance Summary

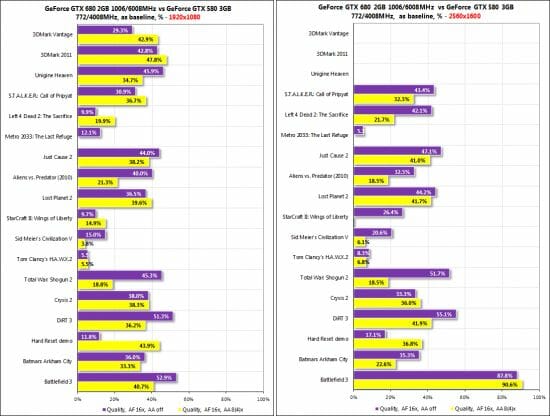

First let’s see how the Nvidia GeForce GTX 680 2GB compares with the GeForce GTX 580 3GB, the fastest single-GPU card of the previous generation, the latter serving as a baseline in the diagrams:

So, the GeForce GTX 680 is an average 30-31% faster than its predecessor in 1920×1080 and 29-37% faster in 2560×1600. Recalling our comparison of the Radeon HD 7970 against its own predecessor HD 6970 in the same tests, we can see that AMD delivered a higher performance growth, but the GeForce GTX 580 was faster than the Radeon HD 6970. Besides that, the 256-bit memory bus of the GeForce GTX 680 should be blamed once again. It is obviously too narrow as is indicated by the difference in performance growth between the FSAA on and off modes.

Next goes a very interesting pair of summary diagrams that compare the Nvidia GeForce GTX 680 with the AMD Radeon HD 7970, both working at their default clock rates. The AMD Radeon HD 7970 serves as the baseline here.

Thus, the GeForce GTX 680 wins in all of our three synthetic benchmarks as well as in the following games: Just Cause 2, Lost Planet 2, StarCraft II: Wings of Liberty, Tom Clancy’s H.A.W.X.2, DiRT 3, Hard Reset, Batman: Arkham City and Battlefield 3. The AMD Radeon HD 7970, on its part, is ahead of its opponent in Left 4 Dead 2: The Sacrifice, Metro 2033: The Last Refuge, Aliens vs. Predator (2010), Sid Meier’s Civilization V and Crysis 2. The two cards are more or less similar to each other in S.T.A.L.K.E.R.: Call of Pripyat and Total War: Shogun 2.

If we take average numbers (that’s not quite correct, for example due to the StarCraft II: Wings of Liberty results), the GeForce GTX 680 is 9-16% ahead of the Radeon HD 7970 in 1920×1080 and 4-10% ahead in 2560×1600.

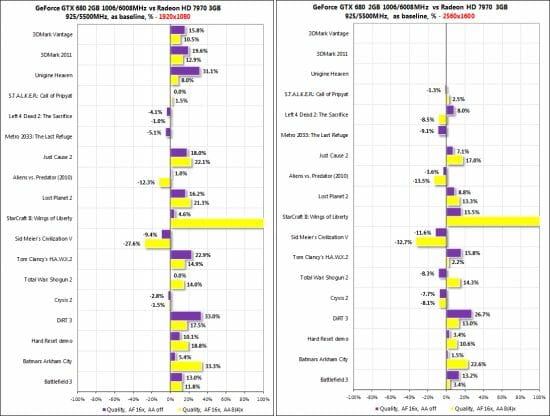

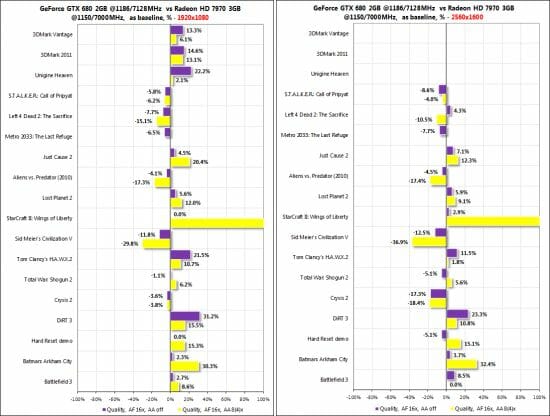

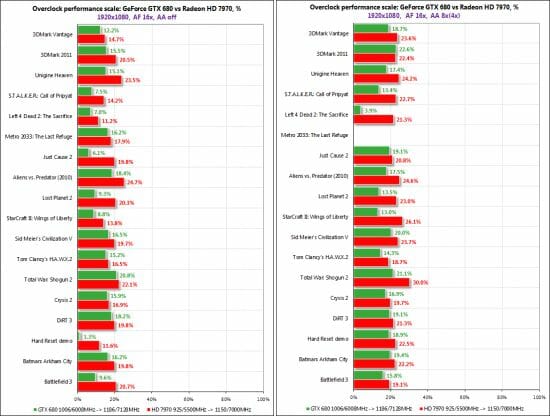

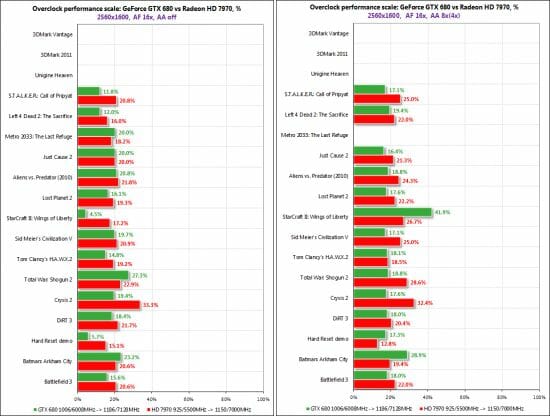

Comparing the results of the overclocked cards in the diagrams below, you should keep it in mind that our AMD Radeon HD 7970 was overclocked by 24.3/27.3% (GPU/memory) whereas the GeForce GTX 680, by 17.3/18.6% (without dynamic overclocking). That’s why the AMD card looks better in comparison with its opponents than at the default clock rates.

The GeForce GTX 680 2GB overclocked to 1186/7128 MHz is an average 4-11% ahead of the AMD Radeon HD 7970 3GB (overclocked to 1150/7000 MHz) in 1920×1080 and 1-9% ahead in 2560×1600.

And finally we can compare the performance boost each card gets from overclocking. It’s clear the AMD Radeon HD 7970 benefits more from overclocking in nearly every test both in 1920×1080…

…and in 2560×1600:

So, the clock rates of our GeForce GTX 680 grew by 17.3/18.6% and its performance increased by an average 12.8-16.8% in 1920×1080 and by 16.6-20.4% in 2560×1600. As for the Radeon HD 7970, the increase in its clock rates by 24.3/27.3% helped improve its performance by 18.2-22.7% in 1920×1080 and by 20.5-22.9% in 2560?1600.

Conclusion

Nvidia’s response to AMD’s recent advances is quite a success. Based on the GK104 processor with the new Kepler architecture, the GeForce GTX 680 graphics card has proved to be somewhat faster than the AMD Radeon HD 7970 across all the tests. It is also considerably quieter, more energy-efficient, smaller and lighter. The GeForce GTX 680 supports new and efficient antialiasing methods, FXAA and TXAA, which are going to be discussed in our upcoming reviews, and Adaptive V-Sync for smoother gaming. Finishing all this off is the availability of all popular video interfaces and support for multi-monitor configurations.

Most importantly, the recommended price of the new card is set at $499, which is $50 cheaper than the recommended price of the AMD Radeon HD 7970. Things are not as hopeless for AMD as they seem, though. The AMD Radeon HD 7970 has been around for three months, so it is already available in lots of custom versions with original cooling systems and pre-overclocked frequencies. Nvidia-based solutions of this kind have not yet even been announced.

So, the only thing we are certain of is that the GeForce GTX 680 is going to increase competition in the top-end market sector and is likely to cause a reduction in prices. It means we, end-users, are on the winning side regardless of who’s on top in the GPU makers’ race.

Notes:

- Nvidia’s GeForce 300.99 driver doesn’t look stable as yet. We could observe image tearing in Metro 2033, occasional image defects in Crysis 2 and Battlefield 3, and long test loading times in 3DMark Vantage. Hopefully, driver updates will solve these and other bugs and will also push the graphics card’s performance even higher up.

- When we replaced the default GPU thermal interface with Arctic MX-4, we saw the GPU temperature drop by 4°C at peak load. The top speed of the fan decreased by 120 RPM in the automatic regulation mode at that.

- Our testbed with GeForce GTX 680 would occasionally not start up at all, emitting two long beeps. This must be some partial incompatibility with the Intel Siler DX79SI mainboard or a defect of our sample of the GeForce GTX 680 card.

- When checking out the overclocking potential of the card, we found a strong correlation between its stability and cooling. For example, if the GPU temperature was not higher than 70°C (at 85% fan speed), the card’s GPU could be overclocked by 15-20 MHz more.