Nvidia GeForce GTX Titan 6 GB Graphics Card Review

Let’s meet the fastest single-processor graphics card with mind-blowing performance. But it is not only the performance that will blow you away…

Nvidia GeForce GTX 680 graphics card, based on the Kepler architecture, was released about a year ago, which is quite a long time by the standards of the GPU industry, even though the latter has slowed down recently. Many of us expected AMD and Nvidia to roll out new processors and technologies this spring but it never happened. Moreover, traditional autumnal announcements are uncertain too, according to latest news. For example, AMD has decided just to rename its graphics cards once again.

Still, there is at least one interesting product which is absolutely new. It is the Nvidia GeForce GTX Titan graphics card.

Shipped as a limited edition (10,000 pieces) at a recommended $999 apiece, it is no mass product. The GK110 processor installed on the Titan comes from professional Tesla K20X cards designed for supercomputers and high-performance servers. They have different standards there, hence the extremely high price for a single-GPU solution from a desktop user’s standpoint.

Let’s see what Nvidia offers for that substantial sum of money.

Technical Specifications and Architectural Modifications

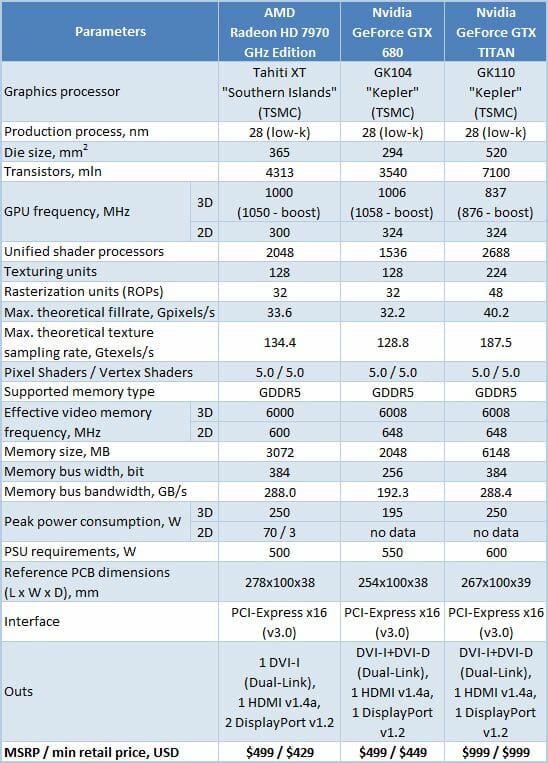

The following table helps you compare the GeForce GTX Titan with AMD’s Radeon HD 7970 GHz Edition and NVIDIA’s GeForce GTX 680:

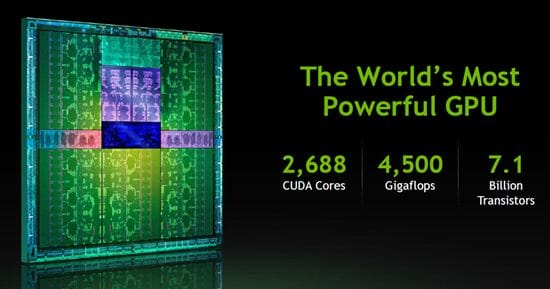

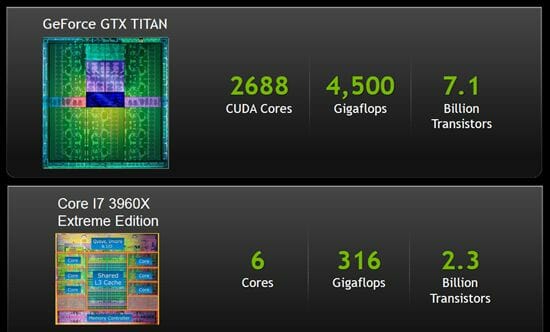

So, the Kepler-based GK110 chip is manufactured on 28nm tech process and incorporates 7.1 billion transistors. Featuring 2688 CUDA cores, it delivers a theoretical SP computing performance of 4.5 teraflops.

Here’s an interesting comparison Nvidia makes against Intel’s top-end CPU:

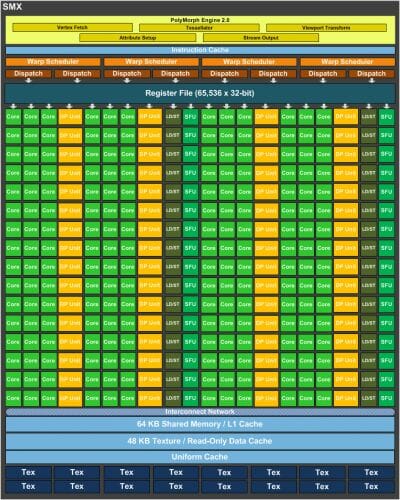

The GK110 has 15 SMX units but only 14 of them are active. There are 224 texture-mapping units and 48 raster operators in this GPU.

As opposed to the GK104, which is used in the GeForce GTX 680 for example, the GK110 has 64 double-precision units (FP64) with a peak theoretical performance of 1.3 teraflops. Thus, the GK110 has as many as 896 execution cores with support for double-precision computing.

Then, the GeForce GTX Titan features a 384-bit memory bus whereas Nvidia’s previous flagship GeForce GTX 680 had a 256-bit one. The amount of onboard memory is increased to an impressive 6 gigabytes. This should help the new card feel at ease at extremely high display resolutions.

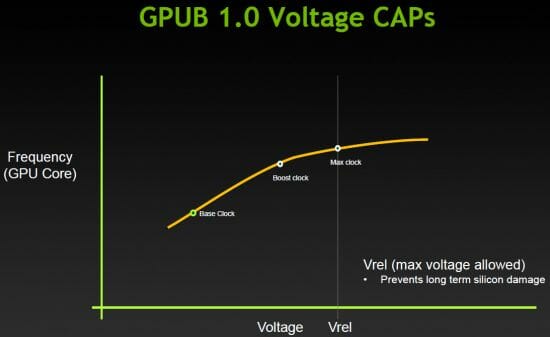

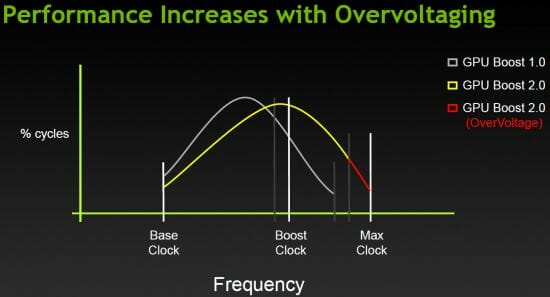

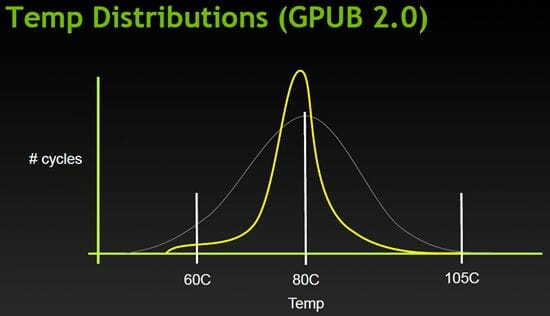

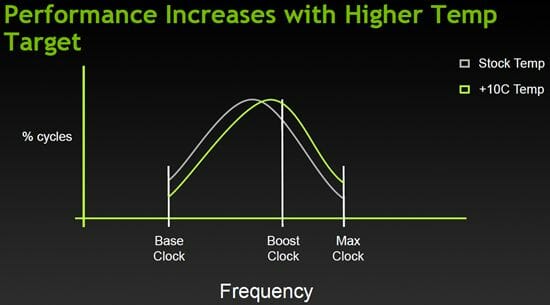

Besides the architectural enhancements, the GeForce GTX Titan supports the second version of the dynamic overclock technology GPU Boost:

The GPU clock rate used to depend on the voltage/power limit…

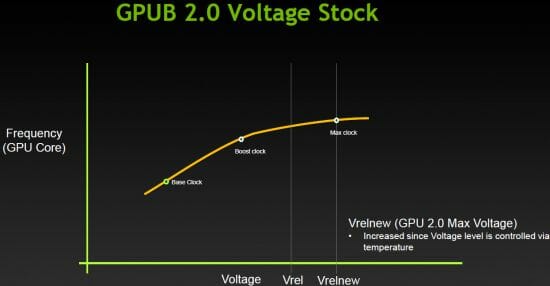

…but GPU Boost 2.0 adds a temperature limit for GPU overclocking.

This limit is set at 80°C for the GK110 by default but the user can increase it to 94°C to make the card able to work at higher clock rates.

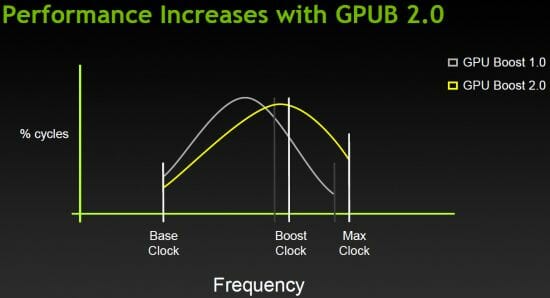

In other words, GPU Boost 2.0 can, on one hand, increase the performance of the GeForce GTX Titan when it’s possible and, on the other hand, ensure automatic control over its heat dissipation, keeping GPU temperature within safe limits.

Running a little ahead, we can tell you that temperature is key to successfully overclocking the GK110 chip.

At the time of our writing this, the Titan was fully supported by the EVGA Precision X version 4.0.0 utility:

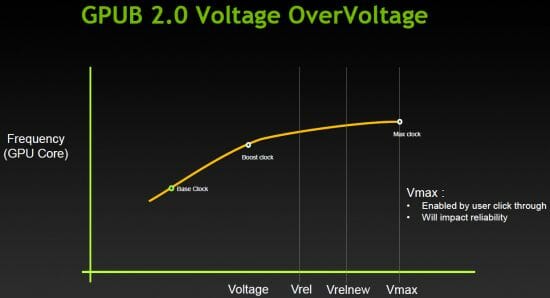

The utility can be used to overclock the Titan by manipulating such parameters power and temperature targets as well as by increasing voltage.

And now let’s take a look at the card itself.

PCB Design and Functionality

The new GeForce GTX Titan resembles the GeForce GTX 690 visually. It has the same color of the metallic casing on its face side, the same inserts and the same polycarbonate window:

There’s a logo with LED highlighting on the top edge of the device:

The GeForce GTX Titan is 267 mm long, which is a perfectly normal size for a modern graphics card.

It is no different from the GTX 680 in terms of its video interfaces: a dual-link DVI-I, a dual-link DVI-D, one HDMI version 1.4a, and one DisplayPort version 1.2.

At the opposite end we can see the exposed side of the heatsink and a couple of screws that secure the cooler’s casing.

The GeForce GTX Titan has two MIO connectors for building multi-GPU configurations out of two, three or even four such cards.

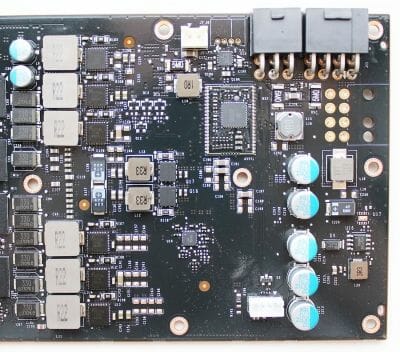

There are 8- and 6-pin power connectors on the PCB, so the card seems to be in between the GTX 690 (2×8-pin) and the GTX 680 (2×6-pin) in its power requirements. According to its specs, the Titan is supposed to consume no more than 250 watts. A 600-watt or better PSU is recommended for a computer with one such device.

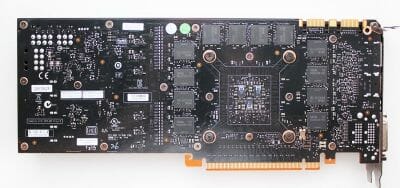

The Titan’s PCB features an original design with memory chips located on both sides of it:

Contrary to our expectations and to the 384-bit memory bus, the GPU is powered via six phases based on Dr.MOS components, just like on the reference GeForce GTX 680.

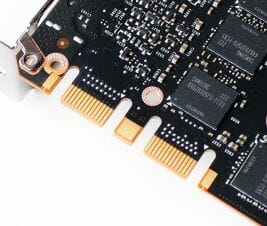

These are managed by an ON Semiconductor NCP4206 controller:

There are also two power phases for the graphics memory and one phase for PLL.

The GK110 GPU is as large as 520 sq. mm and carries no heat-spreader, so we can easily read its revision, which is A1, and the date of manufacture (50th week of 2012):

We’ve talked about its configuration and specs in the previous section of our review. Its 3D clock rate is 837 MHz at a voltage of 1.162 volts and can be boosted to 867 MHz at high loads (things are somewhat different in reality, though, as we will explain shortly). The clock rate and voltage are dropped to 324 MHz and 0.875 volts in 2D applications.

The graphics card is equipped with FCBGA-packaged GDDR5 chips for a total of 6 gigabytes of onboard memory. They are manufactured by Samsung Semiconductor and marked as K4G20325FD-FC03:

Their 3D clock rate is 6008 MHz. Coupled with the 384-bit bus, the peak memory bandwidth is as high as 288.4 GB/s. This is 50% higher compared to the GeForce GTX 680 and should make the new card fast at high resolutions with any type of full-screen antialiasing enabled.

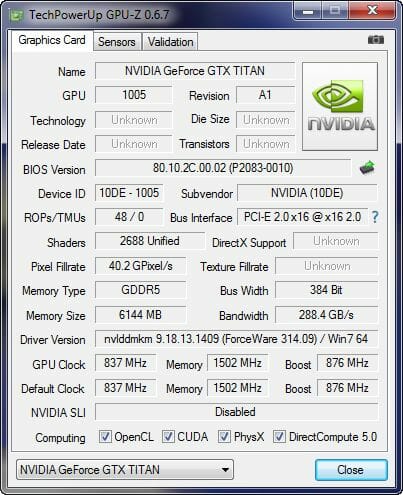

The latest version of GPU-Z doesn’t report all of the GeForce GTX Titan parameters correctly:

Cooling System and Noise

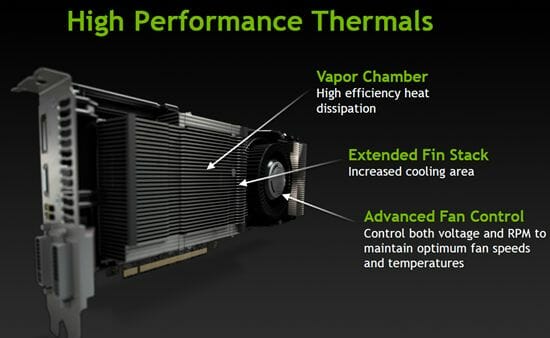

Nvidia made an effort to improve its reference cooling system for the new flagship card considering the latter’s large GPU with increased heat dissipation. There are three key improvements mentioned by Nvidia: a vapor chamber, a heatsink with a large number of aluminum fins, and an advanced fan regulation system.

It is a daunting task to dismantle the Titan’s cooler as it is secured with lots of screws that vary in type.

So, the cooler is based on a metallic frame with thermal pads. This frame cools the memory chips and some power system components. Below the casing there is an aluminum heatsink with a vapor chamber.

It is secured on the GPU individually and has no contact with the other cooler components.

The cooler has a radial fan with a decorative plastic faceplate:

We couldn’t take the fan off since it seems to be glued to the metallic frame besides being fastened to it with three screws.

The cooler’s highlighting isn’t bright, but quite beautiful:

The cooler’s operation is based on the above-mentioned GPU Boost 2.0 technology and the GPU temperature limit of 80°C. The GPU’s clock rate depends on the temperature directly.

After the temperature limit is exceeded, the boost clock rate is lowered smoothly until it reaches the base clock rate of the GK110 chip. On reaching 105°C, the card triggers a protection mode, also known as throttling. Thus, the performance of the Titan’s GPU varies dynamically depending on its temperature.

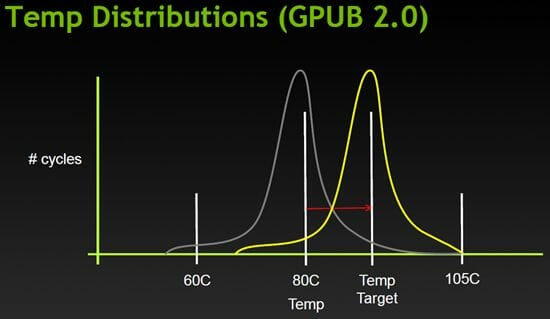

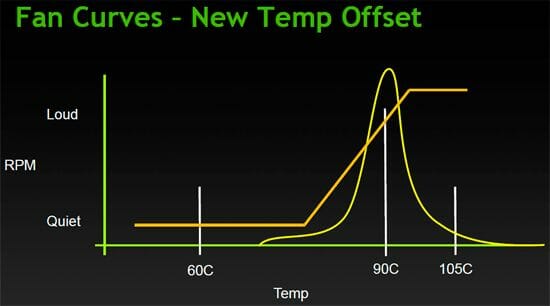

You can lift the GPU temperature limit up to 94°C using the EVGA Precision X utility:

This will keep the Titan running longer at higher frequencies, but as soon as the new temperature limit is reached, the clock rate will go down again. Here’s a diagram from Nvidia illustrating the performance/temperature correlation of the Titan’s GPU:

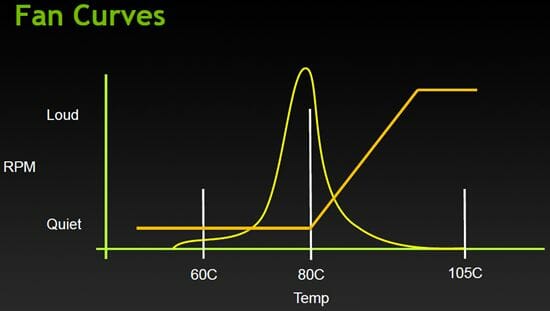

The speed of the cooler’s fan is regulated in such a way as to keep the graphics card quiet until the GPU is 80°C hot. After that, the fan begins to accelerate.

As you can see, Nvidia emphasizes quietness over temperature, relying on the GPU’s protection mechanisms and GPU Boost 2.0.

If the temperature limit is shifted up, the cooler’s fan will work at higher speeds, ensuring stability.

To test the cooler efficiency we are going to use five consecutive runs of a pretty resource-consuming Aliens vs. Predator (2010) game with the highest image quality settings in 2560×1440 resolution with 16x anisotropic filtering and MSAA 4x antialiasing. We used MSI Afterburner 3.0.0 beta 6 and GPU-Z 0.6.7 as monitoring tools. This test was performed inside a closed system case at 25°C room temperature.

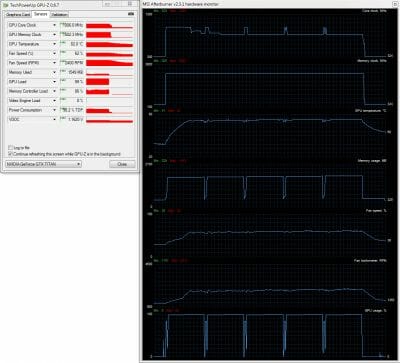

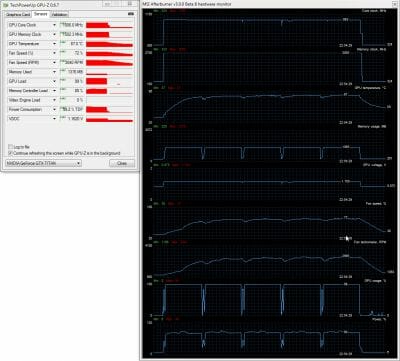

So, let’s first take a look at the graphics card’s temperature at its default power and temperature limits. The fan is regulated automatically:

As you can see, the GPU quickly got as hot as 80°C during the first cycle of our gaming test. After that, the boost clock rate, which was 1006 MHz (yes, it was 1006 rather than 867 MHz), dropped first to 993 MHz and then to 876 MHz and finally to the base level of 837 MHz. In other words, if the GPU gets over 80°C hot, there is no talking about boost mode with the Titan. As for the speed of the fan, it rose from 1100 RPM to 2412 RPM and stayed at that level thereafter.

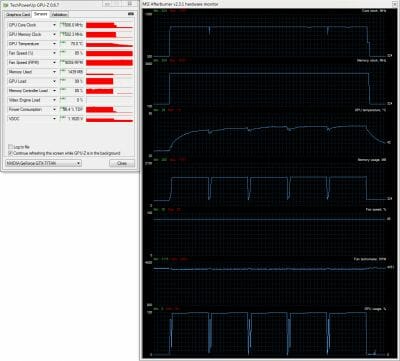

Now let’s try to raise the temperature limit to 94°C and set the power limit to 106%. The fan is still regulated automatically:

That’s quite a different picture! Upon reaching the peak 1006 MHz, our Titan only dropped its clock rate to 993 MHz and maintained it throughout most of the test. Of course, the Titan is going to deliver much higher performance now than at the noise-optimized standard settings. The top GPU temperature was 87°C during this test whereas the fan accelerated from 2412 to 2995 RPM.

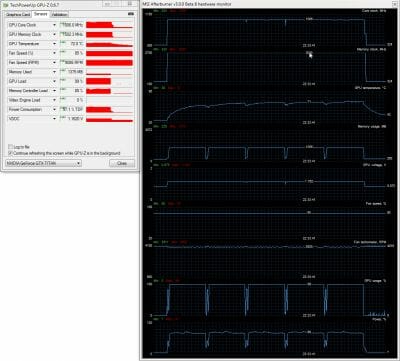

Now we roll the temperature and power limits back to their defaults (80°C/100%) but set the fan at its maximum speed and rerun the test:

Everything’s simple here. The GPU is up to 70°C hot, always working in the boost mode at 1006 MHz. Now what if we increase the temperature and power limits to 94°C and 106% and use the fan at its maximum speed again? Let’s see.

There’s no difference in terms of clock rates but the top GPU temperature is 2°C higher at 72°C.

Summing everything up, we can say that it is highly important for the GeForce GTX Titan to be cooled properly if you want to have the GPU deliver its highest sustained performance in 3D applications.

We couldn’t measure the noise level of our GeForce GTX Titan because we either had to remove the casing (and obtain irrelevant results) or drill through the casing to access the fan connector. Neither option was appropriate for us, so we had to limit ourselves to our subjective impressions.

The card is absolutely silent in 2D mode, its fan working at 1100 RPM. It is very, very quiet indeed. In 3D mode the Titan is still comfortable enough at its default settings. The fan works smoothly when increasing or decreasing its speed. When the speed is higher than 2500 RPM, the GeForce GTX Titan becomes audible against the background noise of a quiet computer, yet remains quieter compared, for example, with the dual-processor GeForce GTX 690 or the reference AMD Radeon HD 7970. All in all, the cooling system is surprisingly not noisy for a graphics card with a TDP of 250 watts.

Overclocking Potential

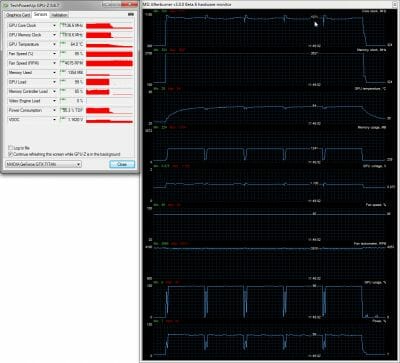

We checked out the overclocking potential of our GeForce GTX Titan using the EVGA Precision X utility at the maximum power and temperature targets:

To ensure better cooling, we removed the side panel of our computer case and set the speed of the Titan’s fan at its maximum 4000 RPM.

After several hours of such testing we managed to increase the base GPU clock rate by 135 MHz and the memory frequency by 1300 MHz.

The resulting clock rates were 972/1011/7308 MHz.

We had to eventually reduce the graphics memory frequency by 40 MHz (to 7268 MHz), however, for further testing. The GPU frequency of the overclocked card would peak to 1137 MHz occasionally, but always dropped to 1071 MHz at high loads.

As you can see, the GPU felt quite comfortable for a GK110 under our conditions, its temperature never exceeding 64°C. Our attempt to overclock it at a voltage of 1.187 volts wasn’t successful, so we stopped at the clock rates mentioned above.

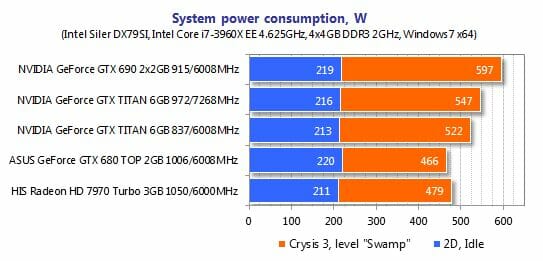

Power Consumption

We measured the power consumption of our testbed equipped with different graphics cards using a multifunctional Zalman ZM-MFC3 panel which can report how much power a computer (without the monitor) draws from a wall outlet. There were two test modes: 2D (editing documents in Microsoft Word or web surfing) and 3D (four runs of the introductory scene in Crysis 3 game at 2560×1440 with maximum image quality settings, but without MSAA). Our tests showed that the ne game is more load-intensive than the previously used Metro 2033: The Last Refuge that is why we chose to replace it.

For comparison purposes hereinafter we also added the results for Nvidia GeForce GTX 690, ASUS GeForce GTX 680 DirectCU II TOP (at reference frequencies) and HIS 7970 IceQ X2 GHz Edition 3 GB. Let’ see what we got:

When the GeForce GTX Titan works at its default clock rates, the corresponding system needs 55 to 60 watts more than the system with ASUS GeForce GTX 680 and 44 to 45 watts more than the system with HIS Radeon HD 7970. When the Titan is overclocked, the power consumption grows up by 25 watts to 547 watts, which isn’t much for a configuration with a hi-end graphics card. In fact, this is 50 watts less than required by the system with a dual-processor Nvidia GeForce GTX 690. So, there’re no surprises here.

The configurations are all comparable to each other in terms of their idle power draw.

Testbed Configuration and Testing Methodology

All participating graphics cards were tested in a system with the following configuration:

- Mainboard: Intel Siler DX79SI (Intel X79 Express, LGA 2011, BIOS 0537 from 7/23/2012);

- CPU: Intel Core i7-3960X Extreme Edition, 3.3 GHz, 1.2 V, 6 x 256 KB L2, 15 MB L3 (Sandy Bridge-E, C1, 32 nm);

- CPU cooler: Phanteks PH-TC14PE (2 x 135 mm fans at 900 RPM);

- Thermal interface: ARCTIC MX-4;

- Graphics cards:

- Nvidia GeForce GTX 690 2 x 2 GB;

- ASUS GeForce GTX 680 DirectCU II TOP 2 GB;

- HIS 7970 IceQ X2 GHz Edition 3 GB;

- System memory: DDR3 4 x 4GB Mushkin Redline (Spec: 2133 MHz / 9-11-10-28 / 1.65 V);

- System drive: Crucial m4 256 GB SSD (SATA-III,CT256M4SSD2, BIOS v0009);

- Drive for programs and games: Western Digital VelociRaptor (300GB, SATA-II, 10000 RPM, 16MB cache, NCQ) inside Scythe Quiet Drive 3.5” HDD silencer and cooler;

- Backup drive: Samsung Ecogreen F4 HD204UI (SATA-II, 2 TB, 5400 RPM, 32 MB, NCQ);

- System case: Antec Twelve Hundred (front panel: three Noiseblocker NB-Multiframe S-Series MF12-S2 fans at 1020 RPM; back panel: two Noiseblocker NB-BlackSilentPRO PL-1 fans at 1020 RPM; top panel: standard 200 mm fan at 400 RPM);

- Control and monitoring panel: Zalman ZM-MFC3;

- Power supply: Seasonic SS-1000XP Active PFC F3 1000 W (with a default 120 mm fan);

- Monitor: 27” Samsung S27A850D (DVI-I, 2560×1440, 60 Hz).

Of all graphics cards mentioned above only Asus card has higher nominal frequencies that is why we decided to adjust them to the reference level for GeForce GTX 680 based products for the purity of the experiment:

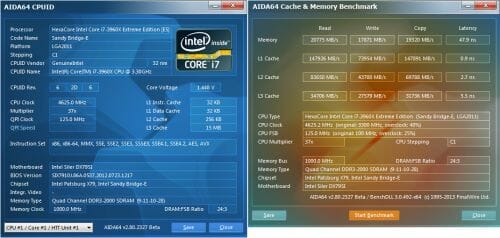

In order to lower the dependence of the graphics cards performance on the overall platform speed, I overclocked our 32 nm six-core CPU with the multiplier set at 37x, BCLK frequency set at 125 MHz and “Load-Line Calibration” enabled to 4.625 GHz. The processor Vcore was increased to 1.49 V in the mainboard BIOS:

Hyper-Threading technology was enabled. 16 GB of system DDR3 memory worked at 2 GHz frequency with 9-11-10-28 timings and 1.65V voltage.

The test session started on February 24, 2013. All tests were performed in Microsoft Windows 7 Ultimate x64 SP1 with all critical updates as of that date and the following drivers:

- Intel Chipset Drivers 9.4.0.1014 WHQL from 02/07/2013 for the mainboard chipset;

- DirectX End-User Runtimes libraries from November 30, 2010;

- AMD Catalyst 13.2 Beta 6 (12.100.17.0) driver from 02/18/2013 + Catalyst Application Profiles 12.11 (CAP2) for AMD based graphics cards;

- Nvidia GeForce 314.09 WHQL driver from 02/21/2013 for GeForce GTX TITAN and GeForce 314.07 WHQL from 02/18/2013 for GeForce GTX 690 and GTX 680 based graphics cards.

We ran our tests in the following two resolutions: 1920×1080 and 2560×1440. The tests were performed in two image quality modes: “Quality+AF16x” – default texturing quality in the drivers with enabled 16x anisotropic filtering and “Quality+ AF16x+MSAA 4(8)x” with enabled 16x anisotropic filtering and full screen 4x or 8x antialiasing if the average framerate was high enough for comfortable gaming experience. We enabled anisotropic filtering and full-screen anti-aliasing from the game settings. If the corresponding options were missing, we changed these settings in the Control Panels of Catalyst and GeForce drivers. We also disabled Vsync there. There were no other changes in the driver settings.

The list of games and applications used in this test session has been once again expanded and updated. Now we include the new 3DMark (2013), the latest version of the Heaven benchmark and the newest and very beautiful Valley benchmark. Moreover, we also added such new gaming titles as Resident Evil 6, Crysis 3 and Tomb Raider (2013). As a result, the testing suite no includes two semi-synthetic benchmarking suites, two demo-scenes and 14 latest games of various genres with all updates installed as of the beginning of the test session date:

- 3DMark 2011 (DirectX 11) – version 1.0.3.0, Performance and Extreme profiles;

- 3DMark 2013 (DirectX 9/11) – version 1.0, benchmarks in “Cloud Gate”, “Fire Strike” and “Fire Strike Extreme” scenes;

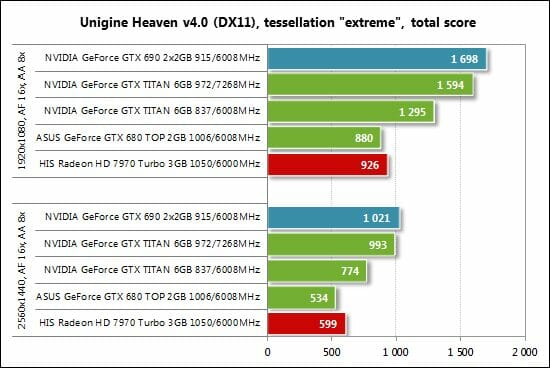

- Unigine Heaven Bench (DirectX 11) – version 4.0, maximum graphics quality settings, tessellation at “extreme”, AF16x, MSAA 8x, 1920×1080 and 2560×1440 resolutions;

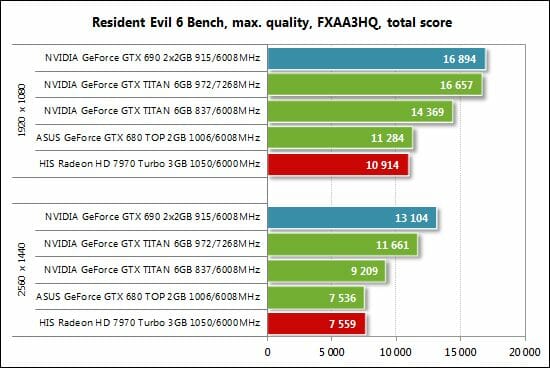

- Resident Evil 6 Bench (DirectX 9) – version 1.0, all image quality settings at maximum, FXAA3HQ antialiasing, Motion Blur – On, 1920×1080 and 2560×1440 resolutions;

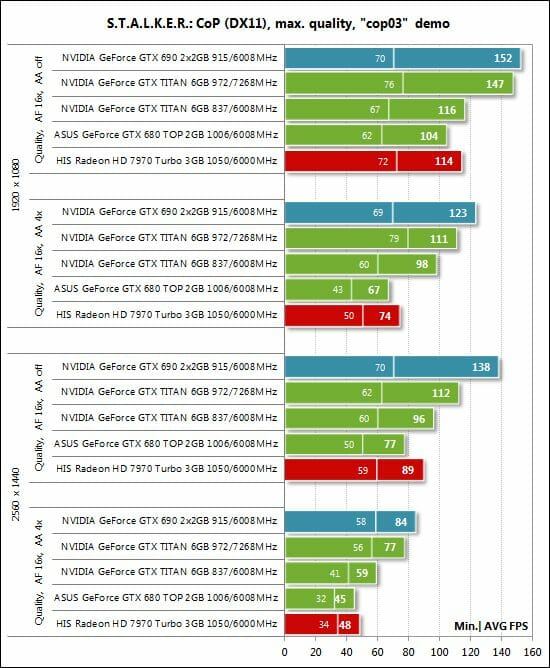

- S.T.A.L.K.E.R.: Call of Pripyat (DirectX 11) – version 1.6.02, Enhanced Dynamic DX11 Lighting profile with all parameters manually set at their maximums, we used our custom cop03 demo on the Backwater map;

- Metro 2033: The Last Refuge (DirectX 10/11) – version 1.2, maximum graphics quality settings, official benchmark, “High” image quality settings; tesselation, DOF and MSAA4x disabled; AAA aliasing enabled, two consecutive runs of the “Frontline” scene;

- Aliens vs. Predator (2010) (DirectX 11) – Texture Quality “Very High”, Shadow Quality “High”, SSAO On, two test runs in each resolution;

- Total War: Shogun 2: Fall of the Samurai (DirectX 11) – version 1.1.0, built-in benchmark (Sekigahara battle) at maximum graphics quality settings and enabled MSAA 4x in one of the test modes;

- Crysis 2 (DirectX 11) – version 1.9, we used Adrenaline Crysis 2 Benchmark Tool v.1.0.1.14. BETA with “Ultra High” graphics quality profile and activated HD textures, two runs of a demo recorded on “Times Square” level;

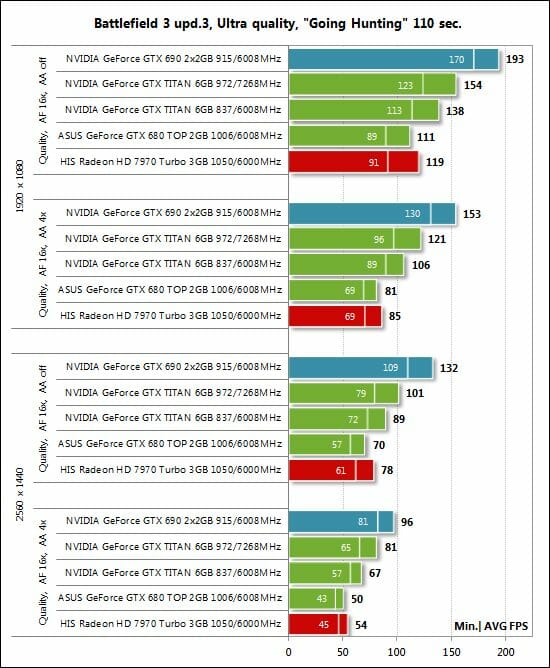

- Battlefield 3 (DirectX 11) – version 1.4, all image quality settings set to “Ultra”, two successive runs of a scripted scene from the beginning of the “Going Hunting” mission 110 seconds long;

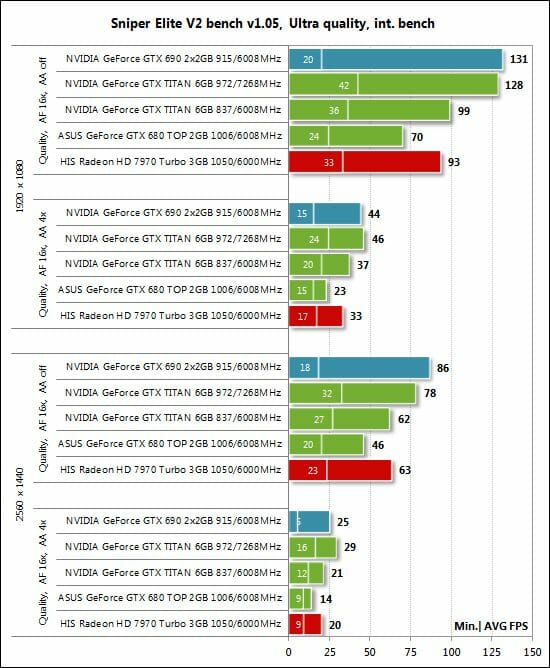

- Sniper Elite V2 Benchmark (DirectX 11) – version 1.05, we used Adrenaline Sniper Elite V2 Benchmark Tool v1.0.0.2 BETA with maximum graphics quality settings (“Ultra” profile), Advanced Shadows: HIGH, Ambient Occlusion: ON, Stereo 3D: OFF, two sequential test runs;

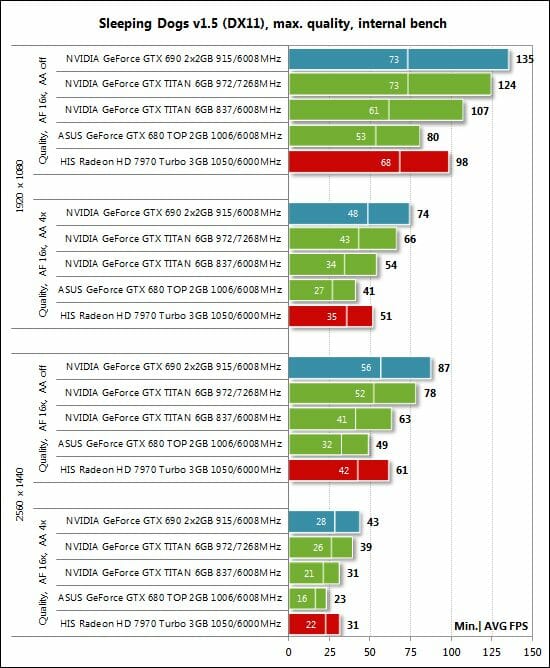

- Sleeping Dogs (DirectX 11) – version 1.5, we used Adrenaline Sleeping Dogs Benchmark Tool v1.0.0.3 BETA with maximum image quality settings, Hi-Res Textures pack installed, FPS Limiter and V-Sync disabled, two consecutive runs of the built-in benchmark with quality antialiasing at Normal and Extreme levels;

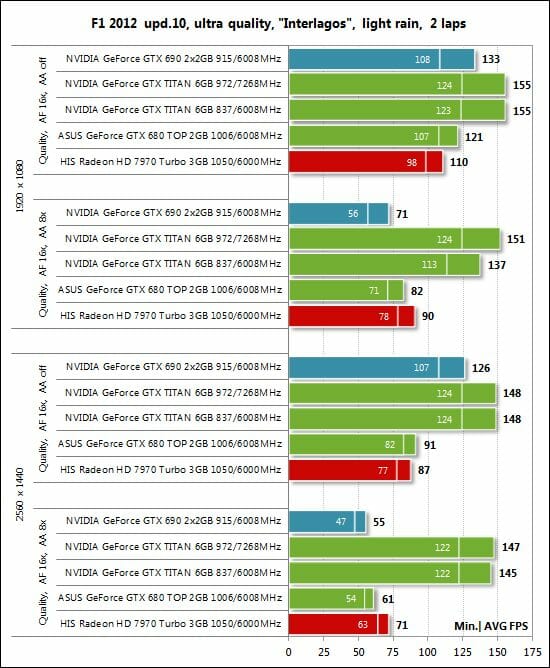

- F1 2012 (DirectX 11) – update 10, we used Adrenaline Racing Benchmark Tool v1.0.0.13 with “Ultra” image quality settings during two laps on Brazilian “Interlagos” race track with 24 other cars and a drizzling rain; we also used “Bonnet” camera mode;

- Borderlands 2 (DirectX 9) – version 1.3.1, built-in benchmark with maximum image quality settings and maximum PhysX level, FXAA enabled;

- Hitman: Absolution (DirectX 11) – version 1.0, built-in test with Ultra image quality settings, with enabled tessellation, FXAA and global lighting;

- Crysis 3 (DirectX 11) – version 1.0.0.2, all graphics quality settings at maximum, Motion Blur amount – Medium, lens flares – on, FXAA and MSAA4x modes enabled, two consecutive runs of a scripted scene from the beginning of the “Swamp” mission 110 seconds long;

- Tomb Raider (2013) (DirectX 11) – version 1.00.716.5, all image quality settings set to “Ultra”, V-Sync disabled, FXAA and 2x SSAA antialiasing enabled, TessFX technology activated, two consecutive runs of the benchmark built into the game.

If the game allowed recording the minimal fps readings, they were also added to the charts. We ran each game test or benchmark twice and took the best result for the diagrams, but only if the difference between them didn’t exceed 1%. If it did exceed 1%, we ran the tests at least one more time to achieve repeatability of results.

Performance

Before proceeding to our tests, we want to note that the GeForce GTX Titan was tested under standard conditions, just like the rest of the graphics cards, in its default operation mode, i.e. inside a computer case, with its default power, voltage and temperature targets, and with its fan regulated automatically. But in the overclocked mode, the GeForce GTX Titan was tested in an open computer case, with its fan set at maximum speed, and with the power and temperature targets set manually at their highest levels.

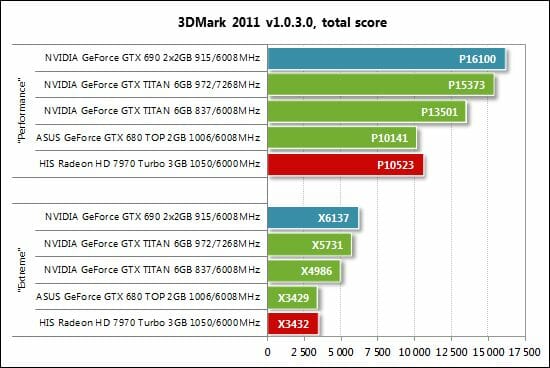

3DMark 2011

The new card takes a brisk start in our tests. While the GeForce GTX 680 is equal to the Radeon HD 7970 GHz Edition here, the new single-GPU flagship from Nvidia is over 33% faster than either of them. On the other hand, the GeForce GTX Titan can’t match the dual-processor GeForce GTX 690: the gap is about 5% even if the Titan is overclocked.

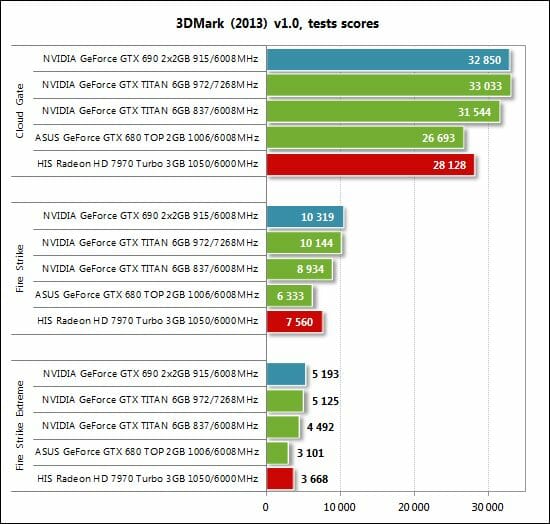

3DMark (2013)

We can see the same picture in each of the three tests from the newest version of 3DMark. The GeForce GTX Titan is up to 44% faster than the GeForce GTX 680 and 4 to 16% slower than the GeForce GTX 690. Overclocking helps it get closer to the leader, though. Take note that the Radeon HD 7970 GHz Edition is faster than the GeForce GTX 680 here, so the Titan improves Nvidia’s overall standing in this benchmark.

Unigine Heaven Bench

And once again we see the GeForce GTX 680 equaling the Radeon HD 7970 GHz Edition, the latter having but a very small advantage, whereas the GeForce GTX Titan is much faster than either of them. The Titan is actually 45% faster than Nvidia’s previous single-GPU flagship and even challenges the dual-processor GeForce GTX 690 when overclocked. At the default settings the GTX 690 is 32% faster than the Titan, however. That’s quite a lot considering that they come at the same recommended price.

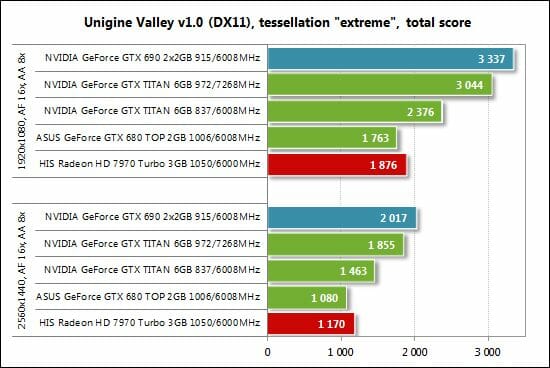

Unigine Valley Bench

This benchmark shows the same picture as Unigine Heaven. We guess we’ll be using only one out of these two Unigine tests in our future reviews.

Resident Evil 6 Bench

The new Resident Evil 6 benchmark measures performance in points, too.

We can see the GeForce GTX 680 rival the Radeon HD 7970 GHz Edition successfully and fall behind the GeForce GTX Titan by 22 to 28%. There is also an 18 to 42% gap between the Titan and the GTX 690, the Titan being unable to bridge it by means of overclocking.

Let’s now see what we have in actual games.

S.T.A.L.K.E.R.: Call of Pripyat

With full-screen antialiasing turned off, the GeForce GTX Titan is but slightly better than the GTX 680 and is almost matched by the Radeon HD 7970 GHz Edition which is traditionally good in this game. The Titan shows its muscle as soon as we enable 4x MSAA, though. Its advantage amounts to 31-46%. On the other hand, it cannot beat the dual-processor GeForce GTX 690 even when overclocked.

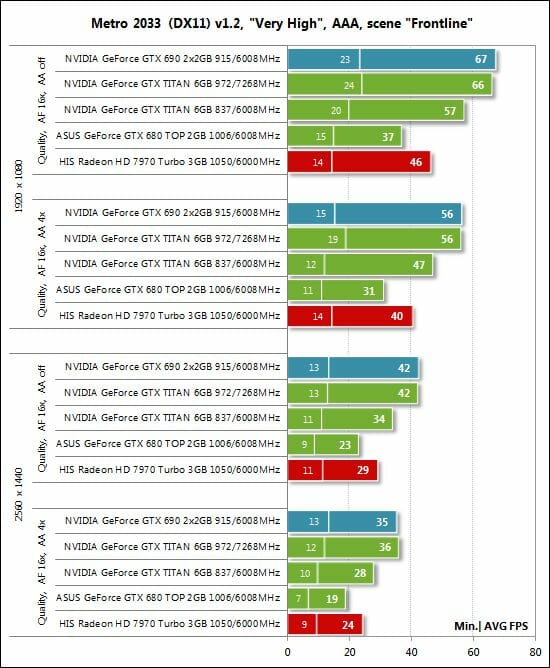

Metro 2033: The Last Refuge

A more resource-consuming application than S.T.A.L.K.E.R.: Call of Pripyat, this game shows a somewhat different picture:

The GeForce Titan is 48 to 52% ahead of the GTX 680. We can’t even remember the last time we saw such an impressive difference even between two generations of same-class GPUs, let alone same-series GPUs. The new card can even challenge the dual-processor GeForce GTX 690 when overclocked. The latter can be overclocked too, though. Moreover, we should note the superb performance of the Radeon HD 7970 GHz Edition which leaves no chance to its immediate opponent GeForce GTX 680 in this game.

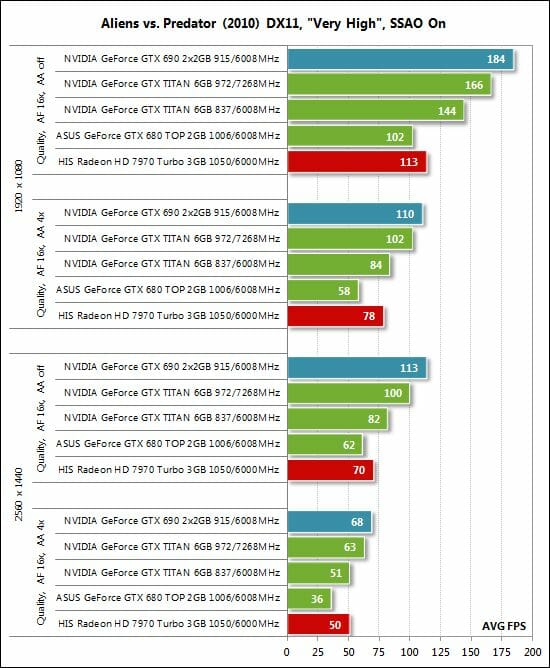

Aliens vs. Predator (2010)

The Radeon HD 7970 GHz Edition feels confident here, too:

The Radeon HD 7970 GHz Edition is especially impressive in the heaviest mode, i.e. at 2560×1440 pixels with antialiasing, where it is as fast as the GeForce GTX Titan which is twice as expensive. The latter is 32-45% faster than the GeForce GTX 680 and 22-27% slower than the GeForce GTX 690. The dual-processor card remains unrivalled even if its opponent is overclocked, although Nvidia markets them at the same price.

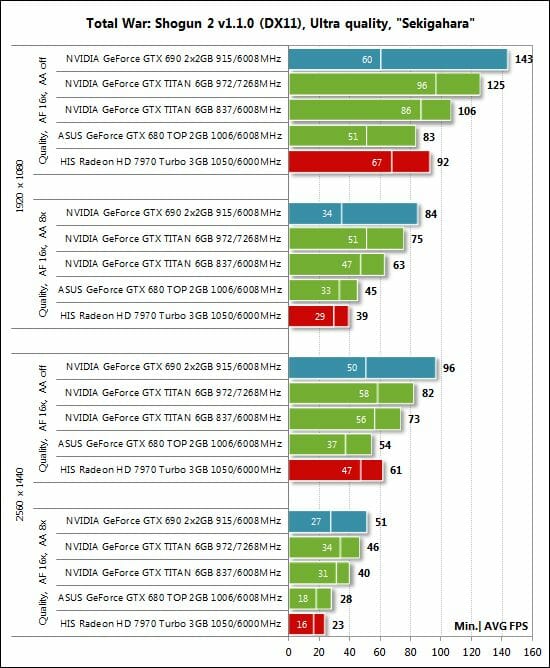

Total War: Shogun 2 – Fall of the Samurai

Here, the GeForce GTX Titan is faster than the GeForce GTX 680 by 28-43% and slower than the GTX 690 by 22-26%. Our overclocking helps the Titan narrow the gap from the leader to 7-12%. Besides, the bottom speed of the fastest single-GPU card is higher than what the GTX 690 can deliver. The Radeon HD 7970 GHz Edition is ahead of the GeForce GTX 680 with antialiasing turned off but falls behind with 8x MSAA enabled, but the gap is small in either case.

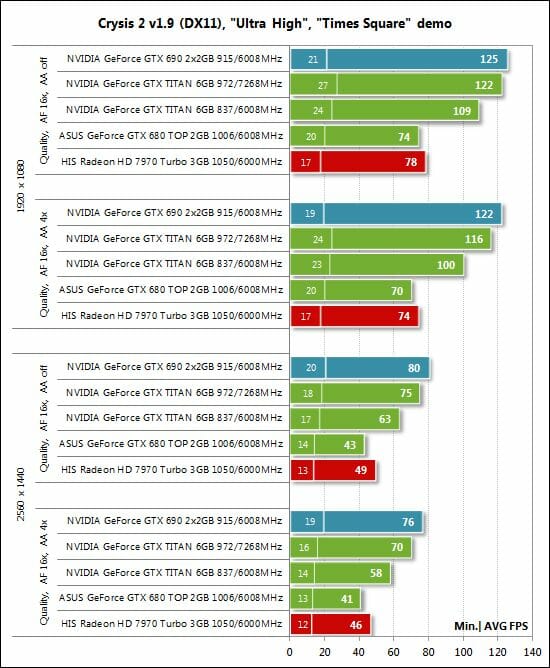

Crysis 2

Even after the release of Crysis 3 we’ll benchmark our cards in at the end of this review, Crysis 2 remains a popular test. Therefore we still have it on our test program.

The GeForce GTX Titan is 42-47% faster than the GeForce GTX 680, which is an excellent performance boost for the same GPU generation. On the other hand, the Titan is 13 to 24% slower than the GeForce GTX 690, even though overclocking helps narrow the gap to 2-8%.

Battlefield 3

The difference between the single-GPU cards from Nvidia isn’t as impressive here as in the previous games. The Titan is 24-34% ahead. The dual-processor GeForce GTX 690 is 29-33% faster than the Titan, also in terms of bottom speed. Our overclocking isn’t as beneficial as in the earlier tests.

Sniper Elite V2 Benchmark

The Titan is 42 to 61% faster than the GeForce GTX 680 but we want to single out the excellent performance of the Radeon HD 7970 GHz Edition here. Meanwhile, the GeForce GTX 690 is ahead of the overclocked Titan without MSAA but falls behind with MSAA enabled.

Sleeping Dogs

The Radeon 7970 GHz Edition is brilliant in Sleeping Dogs, too:

The GeForce GTX Titan falls behind the Radeon HD 7970 GHz Edition, although brings about a 29-35% improvement over the GeForce GTX 680. The dual-processor GeForce GTX 690 is unrivalled even if the Titan is overclocked.

F1 2012

We’ve got a weird situation in this game:

SLI doesn’t work when we enable antialiasing, but the main thing is that the GeForce GTX Titan delivers the same performance irrespective of display resolution and 8x MSAA. There must be some error/optimization in the GeForce driver that prevented us from obtaining reliable results for the GeForce GTX Titan in this game.

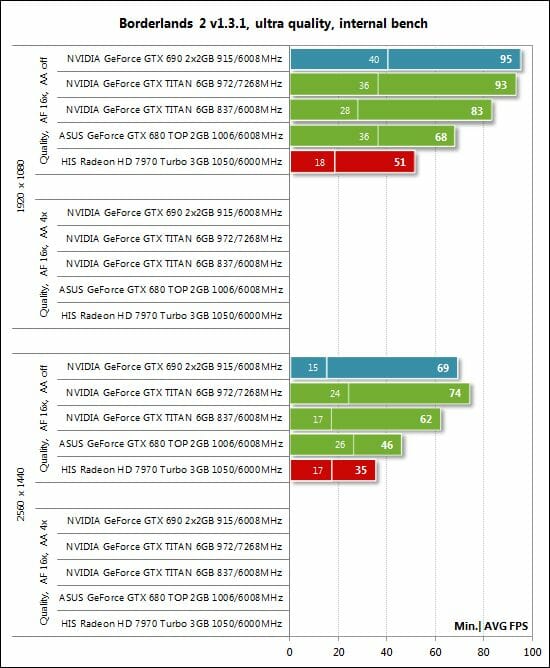

Borderlands 2

Things get back to normal in Borderlands 2:

Nvidia is ahead of AMD here, the new GeForce GTX Titan being 22 to 35% faster than the GeForce GTX 680 and 10 to 12% slower than the GTX 690. The overclocked Titan even beats the dual-processor opponent at the hardest settings.

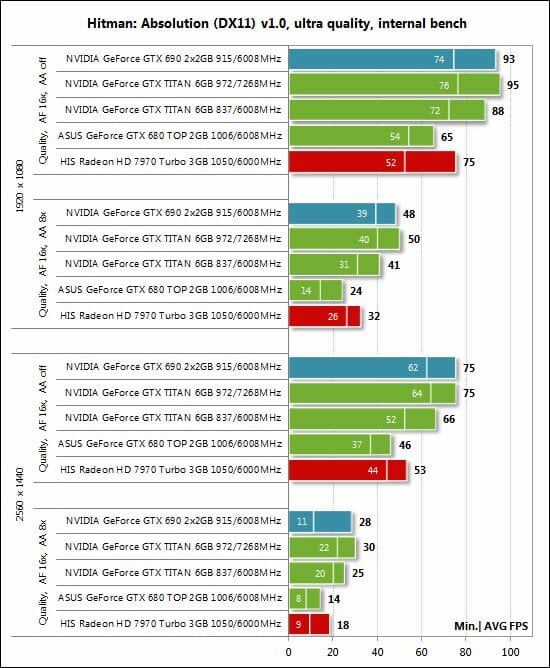

Hitman: Absolution

The GeForce GTX Titan is faster than the GTX 680 by an expected 34 to 44% without 8x MSAA, just like in most other tests, but when we enable antialiasing, the gap grows up to 71-79%, the largest gap between these cards in our review. The GTX 680 seems to lack graphics memory as well as memory bandwidth to perform faster here. The overclocked Titan catches up with the dual-processor GeForce GTX 690 and even beats the latter in terms of bottom speed.

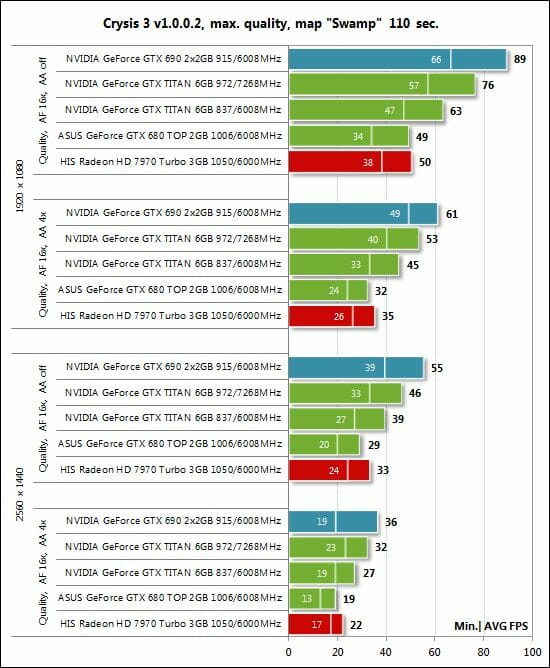

Crysis 3

And here’s the recently released Crysis 3:

The Radeon HD 7970 GHz Edition is slightly faster than the GeForce GTX 680 while the GeForce GTX Titan beats the latter by 29 to 42%. The Titan is 25-29% behind the dual-processor GeForce GTX 690, our overclocking helping it to get as close as 11-16%.

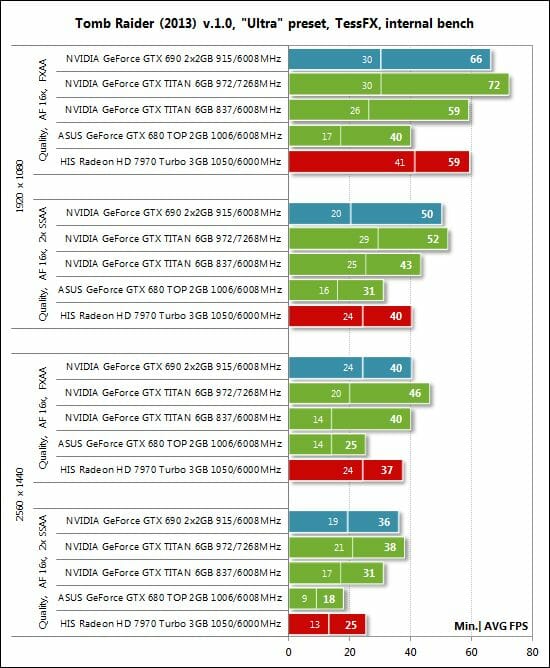

Tomb Raider (2013)

The Radeon HD 7970 GHz Edition is impressive in the newest game of our test session, being but slightly slower than the GeForce GTX Titan. The latter also looks good, beating the GeForce GTX 680 by 39-72%. When overclocked, it even outperforms the dual-processor GeForce GTX 690.

Performance Summary

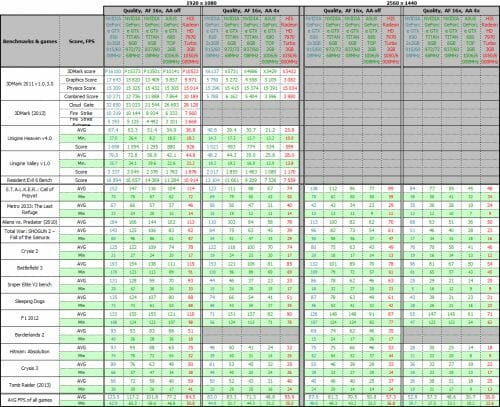

Here is a table with full test results.

Now we can move on to our summary charts.

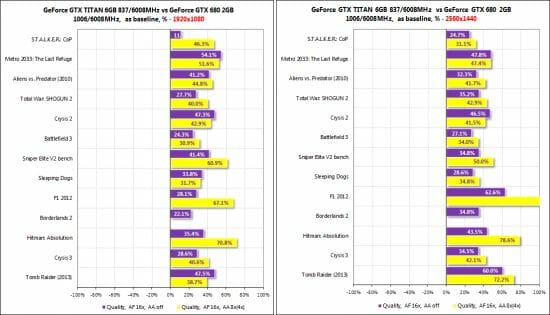

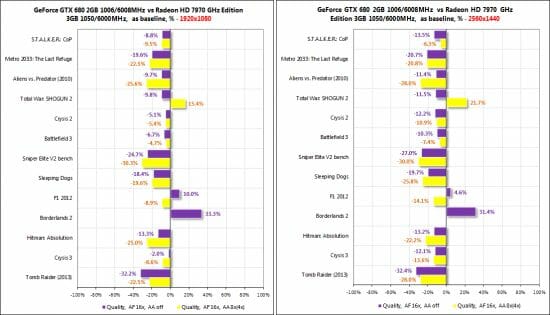

The summary diagrams are based on the gaming tests only. First of all, let’s check out the difference between the new GeForce GTX Titan and the GeForce GTX 680, the latter serving as a baseline.

The Titan is 34-47% ahead at 1920×1080 and 39-55% ahead at 2560×1440 on average across all the tests. We can note that it enjoys a larger advantage at high image quality settings, particularly when full-screen antialiasing is turned on. Its large amount of onboard memory with a 384-bit bus shows its worth then. The increased number of shader processors, texture-mapping units and raster operators available in the GK110 chip shouldn’t be forgotten, too.

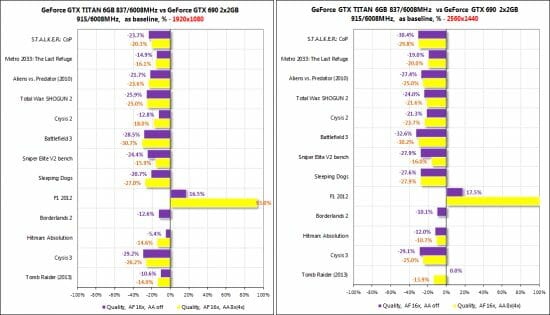

Next, let’s see how slower the GeForce GTX Titan is in comparison with the dual-processor GeForce GTX 690. We can remind you that both come at the same recommended price of $999.

Excepting the odd results in F1 2012, the Titan follows behind the GTX 690 at about the same distance in each game. It is 12 to 17% slower at 1920×1080 across all the gaming tests and 7 to 19% slower at 2560×1440. By the way, the influence of the increased amount of onboard memory and its interface can be observed here, too.

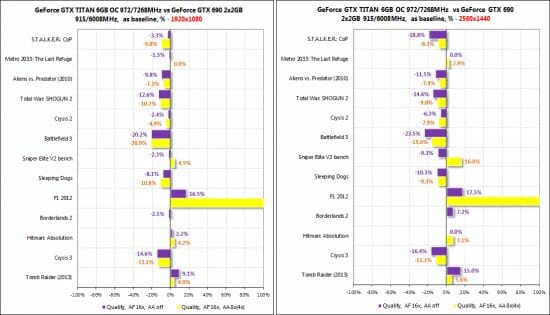

When the GeForce GTX Titan is overclocked from 837/876/6008 MHz to 973/1011/7268 MHz, the gap from the GeForce GTX 690 narrows. The overclocked Titan even wins some of our tests:

The last pair of summary diagrams doesn’t comply with the topic of our review, yet we want to compare the GeForce GTX 680 and the Radeon HD 7970 GHz Edition. We compared the GTX 680 against the HD 7970 about a year ago, finding the Nvidia card superior in most tests. However, AMD has not only dropped the recommended price of its hi-end offer since then but has also increased its clock rates from 925/5500 to 1050/6000 MHz (hence the GHz Edition naming). Some driver optimizations have been implemented in both Catalyst and GeForce suites, too, and there are new games available. So, here’s what we have in this competition right now:

As we can see, the Radeon HD 7970 GHz Edition is on the winning side now, which is bad news for Nvidia. It is only in Borderlands 2, in the high-quality mode of Total War: Shogun 2 – Fall of the Samurai and in F1 2012 (without MSAA) that the GeForce GTX 680 comes out the winner. The Radeon is ahead in every other game.

CUDA and OpenGL Performance

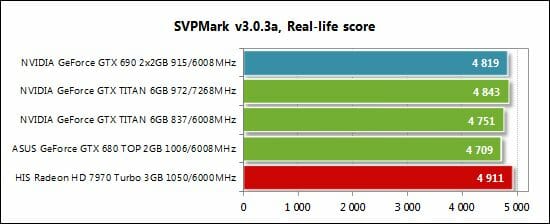

Besides our traditional gaming tests, we want to check out the GK110 GPU in computing and encoding applications. First goes the SmoothVideo Project benchmark – SVPMark 3.0.3a (the real-life test score):

The GeForce GTX Titan doesn’t show anything special here. It is close to the GeForce GTX 680 and doesn’t increase its score much when overclocked.

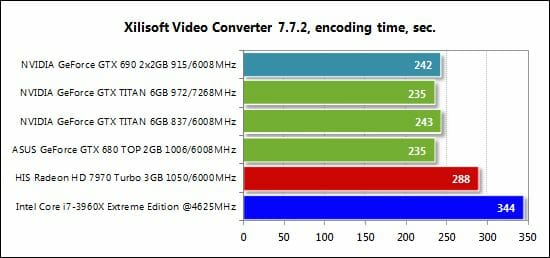

Next we measured the speed of HD video encoding in Xilisoft Video Converter version 7.7.2 which supports both AMD and Nvidia GPUs. We took a 2.5GB Full-HD video and converted it for iPad 4. The resulting file was about 750 MB large. A smaller time is better in this diagram:

Nvidia’s GPUs are superior to their AMD opponents as well as to the six-core Intel CPU in this test, but the GeForce GTX Titan is no better than the ordinary GeForce GTX 680. The dual-processor GeForce GTX 690 doesn’t provide any substantial benefits. Xilisoft Video Converter doesn’t seem to use all of the GK110’s capabilities.

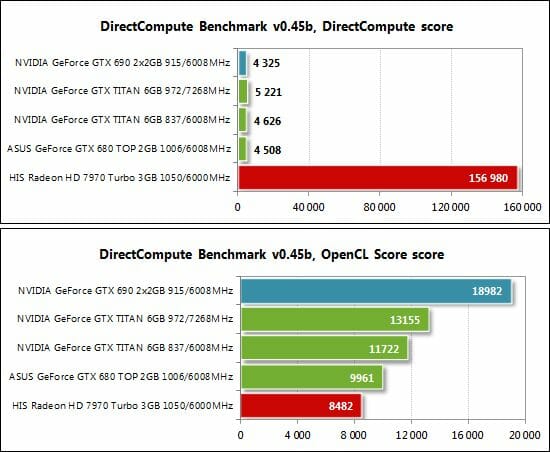

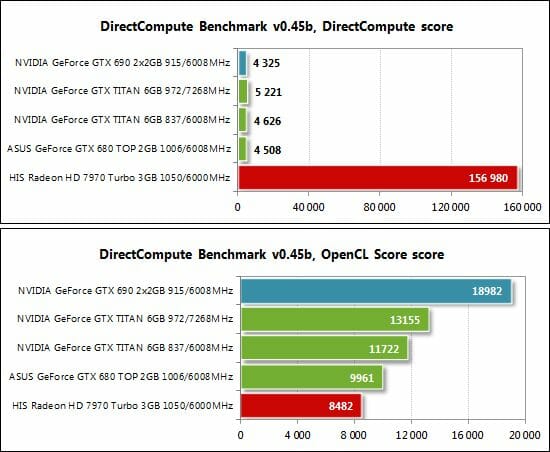

The next test is DirectCompute Benchmark version 0.45b. We show you the DirectCompute and OpenCL scores in the diagram:

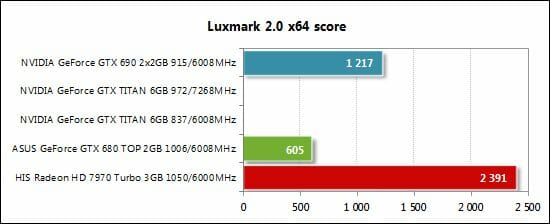

We also tried to check the GK110 out in the popular LuxMark but its latest version 2.0 didn’t support the GeForce GTX Titan, aborting the test with an error. So we could only benchmark the older cards:

The GeForce GTX Titan must be about as fast as the GeForce GTX 690 but we’ll only make sure after a GK110-compatible version of LuxMark comes out. So far we can only say that the Radeon series is much faster here.

Conclusion

Our impressions about the Nvidia GeForce GTX Titan are rather ambiguous. On one hand, it is undoubtedly the fastest single-GPU graphics card available today. Its power consumption isn’t much higher compared to earlier flagship products from both AMD and Nvidia and it is even less noisy. It supports every technology implemented in the GeForce GTX 680 and introduces some more (GPU Boost 2.0, for example). And its performance is really astonishing as it adds some 50% (or even more) to the speed of the ex-flagship GeForce GTX 680. In the last years we haven’t seen such a dramatic improvement in sheer speed even between two generations of GPUs, let alone between GPUs from the same family. The GeForce GTX Titan was meant to prove Nvidia’s technological superiority and it does that in a spectacular fashion.

On the other hand, the GeForce GTX Titan is priced very high. Being 10-20% slower than the dual-processor GeForce GTX 690, it comes at the same recommended price and will probably be more expensive in retail for the next couple of months. Moreover, people who don’t mind AFR rendering may prefer a CrossFireX configuration built out of two Radeon HD 7970 GHz Edition which is going to be faster in the majority of games, even though hotter and more power-hungry (these latter factors are secondary for owners of such premium-class graphics subsystems anyway). Thus, the GeForce GTX Titan looks like a perfect luxury product yet its price doesn’t seem justifiable. By the way, if you are into such expensive toys, you may be interested to know that Nvidia claims three GeForce GTX Titan cards to be faster than two GeForce GTX 690s.

The GPU Boost 2.0 technology raises some questions, too. Overclocking is now more difficult while the card’s performance depends more on its temperature. Non-reference Titans with original coolers would be especially interesting but we don’t think they will ever come out. But since there’s still a lot of time until the next generation of GPUs, Nvidia might think about pleasing its fans with a GeForce GTX 685 that would have a 384-bit bus and 4 GB of memory because the GeForce GTX 680 doesn’t look strong against the Radeon HD 7970 GHz Edition which has grown faster and less expensive.