Adaptec RAID ASR-5805 SAS RAID Controller Review

“Dual-core processor working at 1.2GHz frequency” – you may think that this line was taken from one of the contemporary netbook descriptions. In fact, this processor is the heart of the 5th series of Adaptec RAID controllers. Read more in our review.

In a steady and commonsensical way Adaptec has come to manufacture RAID controllers for hard disk drives with SAS interface. Having released a few trial models, the company has begun to make controllers based on a unified serial architecture. These are inexpensive entry-level 4-port controllers of the first and second series (the series number is the first numeral in the model name) and mainstream third series controllers. Of course, the high-performance fifth series, which is the current peak in the evolution of Adaptec’s controllers, is a logical continuation of the previous products.

It is interesting to compare Adaptec’s controller series, by the way. The first series has no integrated processor and cache and is equipped with a PCI Express x4 interface. The third series comes with 500MHz or 800MHz Intel 80333 processors and with 128 to 256 megabytes of cache; the 4-port models have PCI Express x4 while the 8-port model has PCI Express x8 (by the way, we tested the third series ASR-3405 model earlier). The fifth series has transitioned to PCI Express x8 entirely and every model, save for the 4-port one, is equipped with 512 megabytes of cache (the cache of the junior model ASR-5405 is cut down to 256MB). Every controller of the fifth series uses the most powerful processor currently installed on RAID controllers: it is a dual-core chip clocked at 1.2GHz.

We have not mentioned the second series because it seems to be a simplification of the fifth rather than of the third series. Both its models have four ports, i.e. one internal SFF-8087 or one external SFF-8088 connector, and PCI Express x8 together with a dual-core processor. The processor frequency is 800MHz, which is lower than in the fifth series. The second series also has a smaller cache, 128MB, probably to be positioned as junior relative to the third series. Another indication of the origin of the second series is that its models support Adaptec Intelligent Power Management that was first featured in the fifth series. This technology means that unused disks are shut down or switched into sleep mode in order to save power. And the distinguishing feature of the second series is that it only supports RAID0, 1 and 10 array types. It cannot work with parity-based arrays (RAID5, RAID6 and their two-level derivatives).

So, we are going to test the Adaptec’s fifth series model called ASR-5805.

Closer Look at Adaptec RAID ASR-5805

First off, the fifth series is one of the largest controller families produced by Adaptec. Currently it includes as many as seven members ranging from the basic ASR-5405 to the 28-port ASR-52445. The nomenclature is not confusing at all once you know how to decipher the model names: the first numeral is the series number. It is followed by one or two numerals denoting the number of internal ports. Next go two numerals denoting the number of external ports and the interface type. Since every model is equipped with PCI Express x8, every name ends in “5”. Adaptec’s older models may have “0” at the end of the model name, which indicates PCI-X.

The unified architecture implies unified specifications. The four-port model has half the standard amount of memory while the other models each have the following: a dual-core 1.2GHz processor, 512 megabytes of memory, PCI Express x8 interface, support for an optional battery backup unit (BBU), support for up to 256 SATA/SAS devices (using appropriate racks and expanders). By the way, the previous series supported up to 128 devices only. It means that Adaptec has not only endowed the new series with faster processors but also improved the firmware.

Talking about firmware, there are array building limitations due to the peculiarities of the controllers’ architecture. Few users are going to encounter them, but anyway. The fifth series (and the second series, too, for that matter) supports up to 64 arrays on one controller (the third series supports only 24 arrays), up to 128 disks in one RAID0 array, and up to 32 disks in rotated parity arrays RAID5 and RAID6.

The series 5 controllers support nearly every popular type of a RAID array, namely:

- Single disk

- RAID0

- RAID1

- RAID1E

- RAID5

- RAID5EE

- RAID6

- RAID10

- RAID50

- RAID60

We guess a bit of clarification is necessary here. While the traditional RAID0, 1, 5, 6 and combinations thereof are well known to most users, RAID1E and RAID5EE may be less familiar to you. How do they work then?

RAID1E arrays are closely related to RAID1 as they use data mirroring, too. However, not the whole disks but certain blocks of the disks are mirrored in them. This approach allows building arrays out of three or more disks (a two-disk RAID1E is effectively an ordinary RAID1). The following diagram makes the point clearer (the letter M denotes a mirror block).

So, what do we have in the end? A RAID1E array has data redundancy and will survive the failure of one disk. As opposed to RAID1 and RAID10, it can be built out of an odd number of disks. A RAID1E offers good speeds of reading and writing. As the stripes are distributed among the different disks, a RAID1E is going to be faster than a RAID1 and at least comparable to a RAID10 (unless the controller can read data from both disks in the RAID1 mirrors simultaneously). You should not view RAID1E as an ideal type of an array, though. You lose the same disk capacity as with the other mirroring array types, i.e. 50%. RAID10 is better in terms of data security: the number of disks being equal, it can survive a failure of two disks at once in some cases. Thus, the main feature of RAID1E is that it allows building a mirroring array out of an odd number of disks and provides some performance benefits, which may come in handy if your controller cannot read from both disks in ordinary mirrors simultaneously.

A RAID5EE array is a far more curious thing. Like its basis RAID5, it follows the principle of ensuring data security by storing stripes with XOR checksums. And these stripes rotate, meaning that they are distributed uniformly among all the disks of the array. The difference is that a RAID5EE needs a hot-swap disk that cannot be used as a replacement disk for other arrays. This disk does not wait for a failure to happen, as an ordinary replacement disk does, but takes part in the operation of the working array. Data is located as stripes on all the disks of the array including the replacement disk while the capacity of the replacement disk is distributed uniformly among all of the disks in the array. Thus, the replacement disk becomes a virtual one. The disk itself is utilized but its capacity cannot be used because it is reserved by the controller and unavailable for the user. In the earlier implementation called RAID5E this virtual capacity was located at the end of each disk. In a RAID5EE, it is located on the entire array as uniformly distributed blocks. To make the point clearer, we suggest that you take a look at the diagram where P1, P2, P3, P4 are the checksums of the appropriate full stripes while HS is the uniformly distributed capacity of the replacement disk.

So, if one disk fails, the lost information is restored into the “empty” stripes (marked as HS in the diagram) and the array becomes an ordinary RAID5.

What are the benefits of this approach? The replacement disk is not idle but takes part in the array’s work, improving the speed of reading thanks to the higher number of disks each full stripe is distributed among. The drawback of such arrays is obvious, too. The replacement disk is bound to the array. So if you build a few arrays on one controller, you will have to dedicate one replacement disk for every of them, which increases your costs and requires more room in the server.

Now let’s get back to the controller. Thanks to high integration Adaptec has made the controllers rather short physically. The 4- and 8-port models are even low-profile. The BBU does not occupy an expansion slot in your system case but is installed at the back of the controller itself.

The BBU is actually installed on a small daughter card which in its turn is fastened to the controller with plastic screws. We strongly recommend you using a BBU because data is usually too important to risk losing it for the sake of paltry economy. Moreover, deferred writing is highly important for the performance of RAID arrays as we learned in our test of a Promise controller that worked without a BBU. Enabling deferred writing without a BBU is too risky: we can do so in our tests but would never run a RAID controller in this manner for real-life applications.

The list of supported operating systems is extensive and covers almost every existing OS save for the most exotic ones. Besides all versions of Windows starting from Windows 2000 (and including 64-bit versions), the controller can work under a few popular Linux distributions, FreeBSD, SCO Unix and Solaris. There are also drivers for VMWare. For most of these OSes there are appropriate versions of Adaptec Storage Manager, the exclusive software tool for managing the controller. The tool is highly functional and allows doing any operations with the controller and its RAID arrays without entering its BIOS. Adaptec Storage Manager offers easy access to all the features and provides a lot of visual information about the controller’s operation modes.

Testbed and Methods

The following benchmarks were used:

- IOMeter 2003.02.15

- WinBench 99 2.0

- FC-Test 1.0

Testbed configuration:

- Intel SC5200 system case

- Intel SE7520BD2 mainboard

- Two Intel Xeon 2.8GHz CPUs with 800MHz FSB

- 2 x 512MB PC3200 ECC Registered DDR SDRAM

- IBM DTLA-307015 hard disk drive as system disk (15GB)

- Onboard ATI Rage XL graphics controller

- Windows 2000 Professional with Service Pack 4

The controller was installed into the mainboard’s PCI-Express x8 slot. We used Fujitsu MBA3073RC hard disk drives for this test session. They were installed into the standard boxes of the SC5200 system case and fastened with four screws at the bottom. The controller was tested with four and eight HDDs in the following modes:

- RAID0

- RAID10

- Degraded 8-disk RAID10 with one failed HDD

- RAID5

- Degraded 8-disk RAID5 with one failed HDD

- RAID6

- Degraded RAID6 with one failed HDD

- Degraded RAID6 with two failed HDDs

As we try to cover as many array types as possible, we will publish the results of degraded arrays. A degraded array is a redundant array in which one or more disks (depending on the array type) have failed but the array still stores data and performs its duties.

For comparison’s sake, we publish the results of a single Fujitsu MBA3073RC hard disk drive on an LSI SAS3041E-R controller as a kind of a reference point. We want to note that this combination of the HDD and controller has one problem: its speed of writing in FC-Test is very low.

By default, the controller suggests a stripe size of 256 kilobytes for arrays, but we set it always at 64KB. This will help us compare the controller with other models (most competitor products use a stripe size of 64KB) and, moreover, this is the data block size every version of Windows, up to Windows Vista SP1, uses to process files. If the sizes of the data block and stripe coincide, we can expect a certain performance growth thanks to reduced latencies.

By the way, choosing the stripe size for your RAID array is not an obvious matter. If the stripe is too small, the number of operations the array has to do increases, which may affect its performance. On the other hand, if the array is mostly used for an application (a database, for example) that works with data blocks of a specific size, it may be reasonable to set the stripe size at the same value. This question used to be debated hotly in the past. By now, applications have generally learned to access data in blocks of different sizes and the speed of arrays has grown up, yet this factor should still be taken into account when you are building your array.

We used the latest BIOS available at the manufacturer’s website for the controller and installed the latest drivers. The BIOS was version 5.2.0 build 16116 and the driver was version 5.2.0.15728.

Performance in Intel IOMeter

Database Patterns

In the Database pattern the disk array is processing a stream of requests to read and write 8KB random-address data blocks. The ratio of read to write requests is changing from 0% to 100% (stepping 10%) throughout the test while the request queue depth varies from 1 to 256.

We will be discussing graphs and diagrams but you can view the data in tabled format using the following links:

- Database, RAID1+RAID10

- Database, RAID5+RAID6

Everything is normal at a queue depth of 1. The arrays show good scalability. The degraded RAID10 does not slow down and, together with the normal 8-disk RAID10, coincides with the 4-disk RAID0, just as the theory has it.

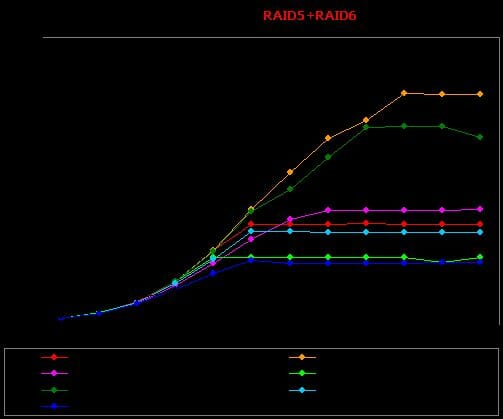

The checksum-based arrays are very good at a queue depth of 1, too. In our earlier tests with four WD Raptors with SATA interface we could observe a sudden performance hit at write operations, but the new generation of RAID controllers proves that a 4-disk RAID6 can be as fast as a single HDD thanks to the large amount of cache memory, low disk access time and fast architecture. The 4-disk RAID5 proves to be faster than the single disk at writing. The 8-disk arrays are far ahead.

The RAID6 slows down but a little when one of its disks fails but the failure of two disks leads to a performance hit. Having to constantly restore data from two checksums (writing to a rotated parity array involves reading operations), the controller gets much slower: the degraded array falls behind the single disk. The RAID5 suffers greatly even from losing one disk: its performance is worse than that of the RAID6 without one disk, yet not as bad as that of the RAID6 without two disks.

The controller’s performance grows up at a queue depth of 16 requests. The RAID10 arrays are close to the RAID0 consisting of half the number of disks at high percentages of writes. At high percentages of reads, they are close to the RAID0 arrays consisting of the same number of disks. The RAID10 are not faster than the RAID0 at reading, which means that the controller can read from both disks in a mirror but has no read optimization (it does not give the read request to what disk in the mirror can perform it faster).

The degraded RAID10 does not show up its degradedness at high percentages of writes, which is good, but loses its ground at high percentages of reads. Its performance does not slump to the level of the 4-disk arrays, meaning that the controller is reading from both disks in the non-defective mirrors.

The RAID5 and RAID6 arrays speed up, too. The RAID6 arrays are nearly all right whereas the RAID5 are far from ideal. The latter’s graphs fluctuate, indicating some flaws in the controller’s firmware, especially with respect to degraded RAID5.

We say that the RAID6 are nearly all right because of the 4-disk array’s performance: it is unexpectedly slower than the single disk at high percentages of writes. The 8-disk array survives the loss of one disk more or less acceptably but the loss of a second disk affects its performance greatly: the degraded RAID6 without two disks is ahead of the single HDD at reading but cannot compete with the latter at writing.

When the queue depth grows even longer, we have to take back the supposition we have expressed above: the controller can actually find the faster disk in a mirror. This is especially conspicuous if you compare the 4-disk arrays: the RAID10 is much faster than the RAID0 at pure reading. The degraded RAID10 is a disappointment. It copes with writing well but fails at reading. As a result, it is just slightly better than the 4-disk RAID0 through most loads.

It is interesting to compare these numbers to those of the 3ware 9690SA controller that has a processor with completely different architecture. The two products are overall equals: the Adaptec is somewhat more effective at writing whereas the 3ware is somewhat faster at high percentages of reads. Besides, the 3ware’s degraded RAID10 has a smaller performance hit.

There are no serious changes in the standings of this group except that the RAID5 arrays are now somewhat better at reading, especially the degraded array. As a result, we see an odd picture with the 8-disk arrays: a RAID6 is ahead of a RAID5 at pure reading! The degraded RAID6 behave in the same way: the array slows down without one disk and slumps below the level of the single HDD when two of its disks fail.

If we compare this to the results of the 3ware 9690SA controller, we can see that the latter is better with RAID6 under any loads and is faster with RAID5 at reading. The Adaptec’s RAID5 arrays are faster at high percentages of writes.

Disk Response Time

IOMeter is sending a stream of requests to read and write 512-byte data blocks with a request queue depth of 1 for 10 minutes. The disk subsystem processes over 60 thousand requests, so the resulting response time doesn’t depend on the amount of cache memory.

Every array is inferior to the single HDD in terms of read response time. The 8-disk arrays are not much worse that the latter, though. It is the 4-disk arrays (save for the RAID10) that are slower by about 1 millisecond, which is a considerable gap. As usual, RAID10 are the best by choosing the faster disk in a mirror.

The degraded RAID10 feels good while the degraded arrays with rotated parity are not. They take last places due to the necessity of recovering data from checksums. Of course, the degraded RAID6 without two disks is the worst array here because it has to recover data from two checksums.

It is easy to explain this diagram: the write response time is determined by the total cache of the controller and disks. Thus, the 8-disk arrays are better than the 4-disk ones, and the RAID0 are ahead of the RAID10 because, from a caching standpoint, each mirror of a RAID10 can only be viewed as a single disk. Among the checksum based arrays the 8-disk ones are ahead, too. The RAID5 are better than the RAID6 thanks to processing only one rather than two checksums.

We are impressed by the results of the degraded RAID5 and RAID6, especially of the RAID6 w/o two disks. The overhead associated with the need to read the checksum data and process them is very small. The credit goes to the employed processor. Even with two failed disks the RAID6 has a write response time comparable to that of the single HDD, which is a very good result!

Random Read & Write Patterns

Now we’ll see the dependence of the arrays’ performance in random read and write modes on the data chunk size.

We will discuss the results of the disk subsystems at processing random-address data in two variants basing on our updated methodology. For small-size data chunks we will draw graphs showing the dependence of the amount of operations per second on the data chunk size. For large chunks we will compare performance depending on data-transfer rate in megabytes per second. This approach helps us evaluate the disk subsystem’s performance in two typical scenarios: working with small data chunks is typical of databases. The amount of operations per second is more important than sheer speed then. Working with large data blocks is nearly the same as working with small files, and the traditional measurement of speed in megabytes per second is more relevant for such load.

We will start out with reading.

The load is too low for the RAID arrays to show their best at simple random reading. Every array is slower than the single disk. The degraded RAID10 is surprisingly good especially as the other RAID10 arrays do not differ much from the RAID0 (we might expect them to be faster by means of reading from the mirrors).

It is similar with the checksum-based arrays: they are all somewhat slower than the single disk, the 4-disk arrays being inferior to the 8-disk ones. The RAID6 degraded by two disks is the worst array again, yet its performance is not really too bad.

When the arrays are processing large data blocks, the sequential speed becomes the decisive factor and the RAID0 arrays go ahead. Well, the 8-disk RAID10 is better than the 4-disk RAID0 which in its turn is about as fast as the 4-disk RAID10: the controller seems to be able to read data from both disks in the mirrors. Even the degraded RAID10 is ahead of the 4-disk RAID0. The loss of a disk in one mirror does not prevent it from reading from both disks in the remaining mirrors.

It is due to the higher sequential read speed that the RAID5 are faster than the RAID6. The arrays of the latter type are rather too slow, though. The performance slump of the 4-disk RAID5 at very large data blocks is no good: 200MBps should not be the limit for this array.

The degraded arrays behave predictably: the RAID5 and RAID6 slow down without one disk. The RAID6 with two failed disks is comparable to the single HDD.

Random writing goes next.

It is cache memory that determines the results of writing in small data chunks. The controller shows almost ideal operation: nice scalability, perfectly shaped graphs and high speeds (the speeds are higher than with the 3ware 9690SA controller we tested recently).

The number of disks in the array is still important for the checksum based arrays when they are writing data in small blocks, but the checksum calculations are a negative factor. The RAID6 have to process two checksums, therefore even the degraded RAID5 proves to be faster than the 8-disk RAID6. The degraded RAID6 without two disks feels surprisingly good here. Of course, it takes last place and is slower than the single HDD, but its performance is good enough for an array that has not only to read data but also restore them from two checksums in order to perform a write operation.

There is something fundamentally wrong in this test: the RAID10 are for some reason slower than the RAID10 when processing such data blocks. This is especially clear with the 8-disk RAID0 because this array has no cause for not being able to overcome the 120MBps mark. And the 4-disk RAID0 has no cause to be inferior to every other array, and even to the single HDD, at large data blocks.

The behavior of the degraded RAID10 is understandable, though. It behaves like the RAID0 array, i.e. each mirror behaves like a single disk. But why don’t the ordinary RAID10 behave like that? And what is going on with the arrays at large data blocks? Distributing the data blocks among the stripes of a RAID0 array is quite easy we might think, but there is some problem here.

Yes, the Adaptec controller has problems with writing in large data blocks indeed. The checksum-based arrays are almost as fast as the single disk, the 4-disk arrays being faster than the 8-disk ones. But each of the three degraded arrays has sped up suddenly, outperforming their healthy counterparts. We might explain this by the degraded arrays’ not having to process checksums that go to a failed disk, but the RAID6 without two disks would win then. And this wouldn’t provide such a high performance gain anyway. So, the controller behaves very oddly indeed when writing data in large blocks.

Sequential Read & Write Patterns

IOMeter is sending a stream of read and write requests with a request queue depth of 4. The size of the requested data block is changed each minute, so that we could see the dependence of an array’s sequential read/write speed on the size of the data block. This test is indicative of the highest speed a disk array can achieve.

The 8-disk RAID0 delivers an astonishing performance! The controller’s fast processor is strong enough for reading over 100MBps from each of the eight disks. The controller obviously reads from both disks in the mirrors, although not ideally: the 4-disk RAID10 is about as fast as the RAID0 while the 8-disk RAID10 is slower than its mirror-less counterpart. It is good that reading from both disks in mirrors works even on very small data blocks.

The checksum-based arrays have lower speeds than the RAID0, but that’s logical: one and two blocks are lost in each stripe with RAID5 and RAID6, respectively, due to the writing of checksums. As a result, the read speed degrades when all of the disks are being accessed. It is clear how dear in terms of performance the loss of disks is for the degraded arrays. Besides the reduction of speed due to the decreased size of the array, the degraded arrays lose about 200MBps more. Thus, the cost of data recovery equals the loss of two more disks when the degraded array is reading data. It is interesting that the RAID6 is almost indifferent to the loss of a second disk. The performance hit is only 100MBps, which is quite proportional to the reduction of the array size by one disk.

Well, the controller is not good at all at sequential writing. The performance of the RAID0 arrays is especially poor. They are slower than the single HDD! The degraded RAID10 is as worse as the RAID0: its graph almost coincides with the 4-disk RAID0’s (this explains the poor results in the test of writing large random-address data blocks). The controller might be suspected in having disabled deferred writing, but the full RAID10 arrays deliver normal speeds even though their graphs are not perfect, either. The zigzagging graphs indicate flaws in the controller’s firmware or driver.

The RAID5 and RAID6 arrays are not quite good at writing, either. The 8-disk arrays are inferior to the single HDD whereas their 4-disk counterparts are quite faster, even though do not reach the level that might have been expected from the performance of the single HDD. The degraded arrays deliver excellent performance, the RAID6 without two disks being in the lead. And the graphs are zigzagging again.

We hope these problems occur only when the controller meets the specific synthetic load and that we will see normal results in FC-Test.

Multithreaded Read & Write Patterns

The multithreaded tests simulate a situation when there are one to four clients accessing the virtual disk at the same time, the number of outstanding requests varying from 1 to 8. The clients’ address zones do not overlap. We’ll discuss diagrams for a request queue of 1 as the most illustrative ones. When the queue is 2 or more requests long, the speed doesn’t depend much on the number of applications.

- IOMeter: Multithreaded Read RAID1+RAID10

- IOMeter: Multithreaded Read RAID5+RAID6

- IOMeter: Multithreaded Write RAID1+RAID10

- IOMeter: Multithreaded Write RAID5+RAID6

We want to say a word in defense of the 8-disk arrays: they only show their top speeds at queue depths other than 1. We have seen this before in our tests of top-performance controllers with SAS disks, so we know that you should have a look at the table with the numbers. It should be noted, however, that the Adaptec delivers high speed even at the minimum queue depth. Its results are the best among all the controllers we have tested so far.

When reading one thread, the arrays are ranked up in a normal manner: the 8-disk arrays are in the lead, the RAID0 are ahead, followed by the RAID5 and RAID6. Interestingly, the 4-disk RAID10 is the fastest among the 4-disk arrays.

The standings change dramatically when there are two threads to be processed. The RAID10 have the smallest performance hit, even though without showing the ability to parallel the load by reading the threads from the different disks in the mirrors. The 8-disk arrays are all good, but the 4-disk ones have a difficult time. The degraded arrays, even the RAID6 without two disks, pass this test quite successfully, excepting the RAID10 which is as fast as the 4-disk RAID0.

When the number of read threads is increased to three and four, the RAID10 arrays lose their advantage, the 8-disk one having the biggest performance hit. The other arrays just slow down a little.

At one write thread we see those odd problems again. As a result, the degraded arrays cope best with writing. They are followed by the RAID10 whereas the 8-disk RAID5 and RAID6 and both RAID0 fall behind the single HDD even.

The addition of a second write thread brings about significant changes we cannot find a reasonable explanation for. For example, the degraded checksum-based arrays and all of the 4-disk arrays, excepting the RAID0, slow down (the 4-disk RAID6 and RAID10 have a considerable performance hit) whereas the other arrays speed up. The 8-disk RAID5 and RAID6 improve the speed twofold! And this is quite inexplicable because these arrays still have to process the same checksums with two write threads as with only one thread.

The number of write threads increases, and the performance of the 8-disk RAID5 and RAID6 falls below that of their 4-disk counterparts. As if to balance them off, the 4- and 8-disk RAID10 accelerate again. The degraded RAID10 differs from both the other degraded arrays and from the RAID10 and is about as slow as the 4-disk RAID0.

Web-Server, File-Server, Workstation Patterns

The controllers are tested under loads typical of servers and workstations.

The names of the patterns are self-explanatory. The request queue is limited to 32 requests in the Workstation pattern. Of course, Web-Server and File-Server are nothing but general names. The former pattern emulates the load of any server that is working with read requests only whereas the latter pattern emulates a server that has to perform a certain percent of writes.

- IOMeter: File-Server

- IOMeter: Web-Server

- IOMeter: Workstation

- IOMeter: Workstation, 32GB

The Web-Server pattern consists of read requests only. The RAID10 are somewhat faster than the RAID0 thanks to reading from the faster disk in the mirror even though this technique is not very efficient here (we have seen better results in our previous test sessions). The degraded RAID10 slows down heavily at long queue depths, being just a little faster than the 4-disk arrays. At short queue depths (up to 8 requests) it is about as fast as the healthy array.

The RAID5 and RAID6 arrays are equals here if they have the same amount of disks. The degraded arrays have a difficult time, though. The degraded 8-disk RAID5 is only as fast as the 4-disk RAID5. The RAID6 slows down even more than one of its disks fails. When two disks fail, the RAID6 is just hardly faster than the single HDD.

The performance ratings show that the array type has little importance under such load while the amount of disks in the array is the decisive factor.

When there are write requests in the load, the RAID10 are slower than the RAID0 at long queue depths although the arrays are close at short queue depths. The performance of the RAID0 is growing up at a faster rate as the queue is increasing.

The RAID6 arrays fall behind the RAID5 ones in the File-Server test. Take note of the behavior of the degraded 8-disk arrays: the performance of the degraded RAID5 is higher than that of the 4-disk RAID5 while the degraded RAID6 (without one disk) is slower than the 4-disk RAID6. When working with two failed disks the RAID6 is ahead of the single HDD at short queue depths but sinks to last place at long queue depths.

The number of disks is still the decisive factor, but the type of the array becomes important, too, if your server is going to process some write requests. The checksum-based arrays have lower performance but offer the higher fault tolerance of RAID6 and higher capacity than in RAID10.

The RAID0 arrays are in the lead under this Workstation load. The degraded RAID10 feels surprisingly good, being close to the healthy array at short queue depths.

As expected the calculation of the second checksum lowers the performance of the RAID6 relative to the RAID5. The degraded arrays are good enough, excepting the RAID6 w/o two disks that is slower than the single HDD at every queue depth. Interestingly, the difference between the latter two disk subsystems is negligible at queue depths of 8 through 16: this must be the preferable load for the degraded RAID6.

Well, you should not build checksum-based arrays for workstations unless you need high data security. The 4-disk RAID0 outperforms the 8-disk RAID6 and is close to the RAID5. The 4-disk RAID10 looks good enough, too.

When the test zone is limited to 32 gigabytes, the RAID0 get even faster than the RAID10.The RAID5 increase their advantage over the RAID6, too. Take note that the degraded arrays feel better with the limited test zone: the RAID6 w/o two disks is faster than the single HDD.

The RAID0 improve their standings when the test zone is reduced to 32GB.

Performance in FC-Test

For this test two 32GB partitions are created on the virtual disk of the RAID array and formatted in NTFS and then in FAT32. Then, a file-set is created on it. The file-set is then read from the array, copied within the same partition and then copied into another partition. The time taken to perform these operations is measured and the speed of the array is calculated. The Windows and Programs file-sets consist of a large number of small files whereas the other three patterns (ISO, MP3, and Install) include a few large files each.

We’d like to note that the copying test is indicative of the array’s behavior under complex load. In fact, the array is working with two threads (one for reading and one for writing) when copying files.

This test produces too much data, so we will only discuss the results of the Install, ISO and Programs patterns in NTFS which illustrate the most characteristic use of the arrays. You can use the links below to view the other results:

Well, the RAID0 arrays are not as bad after all as we might expect after the sequential writing test. They do not deliver the speed we want, though. The speed of writing files is about 2.5 times lower than necessary. It is odd that the arrays reach their top speeds in the Install pattern rather than with the large files of the ISO file-set. The ISO results are really strange besides the generally low speeds. For example, the 4-disk RAID0 is twice as fast as the 8-disk RAID10 although they should be equals. The degraded RAID10 is somehow faster than the healthy one. So, the controller does have problems with writing.

The RAID5 and RAID6 arrays have problems, too. Like in the sequential writing test first places go to the degraded arrays, which is nonsense. Moreover, the 8-disk arrays are very slow with the large files of the ISO pattern: funnily, the 4-disk RAID5 is 50% faster than the 8-disk one. It is only with the small files of the Programs pattern that the standings are more or less all right but the speeds are no good there, either.

The controller seems to hit some speed limitation at reading: the RAID0 and RAID10 arrays all have about the same speeds. It is funny with the Install pattern: the arrays’ read speed is lower than their write speed. The speed of reading large files is good, though. This controller’s read speed ceiling is much higher than that of any other controller we have tested before. The 3ware 9690SA could not yield over 160MBps while the Adaptec easily delivers 400MBps.

By the way, judging by the speed of the 4-disk RAID10 the controller reads from both disks of the mirrors. Otherwise, we would not have a read speed higher than 250MBps.

And once again we see the 8-disk arrays hit the speed limitation when processing large files. The standings are overall what they should be, though. The degraded arrays slow down proportionally to the reduction of the number of disks in them.

The Copy Near test is reminiscent of the oddities we have seen at writing: all the arrays are faster with the Install rather than ISO files. The 8-disk RAID0 arrays are very slow while the 8-disk RAID10 is similar to the 4-disk RAID0, just as in theory. The degraded RAID10 performs well, being almost as fast as the healthy array.

We see the checksum-based arrays have the same problems with the large ISO files, but the problems are not so big now. The degraded arrays are not in the lead anymore. The standings are overall logical.

Copying from one partition to another produces roughly the same results as the Copy Near test. The only significant difference is that the 4-disk RAID5 and RAID6 have too low performance with the ISO files. The controller shows a strange dislike of large files. Its firmware calls for improvement.

Performance in WinBench 99

Finally, here are data-transfer graphs recorded in WinBench 99:

And these are the data-transfer graphs of the RAID arrays built on the Adaptec ASR-5850 controller:

- RAID10, 4 disks

- RAID10, 8 disks

- RAID10, 8 disks minus 1

- RAID5, 4 disks

- RAID5, 8 disks

- RAID5, 8 disks minus 1

- RAID6, 4 disks

- RAID6, 8 disks

- RAID6, 8 disks minus 1

- RAID6, 8 disks minus 2

There are no graphs for the RAID0 arrays because WinBench 99 refused to perform the test on them, issuing an error message. We could not make those arrays pass the test even by reducing the test zone. We do not doubt the controller’s reliability with RAID0 (the many hours of IOMeter tests proved to us that there were no problems), so this must be the benchmark’s fault (it is 10 years old already, by the way).

The following diagram compares the read speeds of the arrays at the beginning and end of the partitions created on them.The standings are very neat: every array takes its proper place. The data-transfer graphs have few fluctuations of speed, and even the degraded arrays perform steadily. The only disturbing factor is that the 8-disk RAID5 has a long flat stretch in the graph: it looks like 700MBps is an artificial limitation for it. It is a shame we could not record the graphs for the RAID0 arrays.

Conclusion

Recalling the impression ASR-3405 made on us back in the days, we should confess that the fifth series from Adaptec is definitely better. The combination of a high-performance processor and optimized firmware makes the ASR-5805 completely different from its predecessor. This controller delivers superb performance with every array type under server loads, can choose the disk of a mirror that takes less time to access data, and can also read from both disks of a mirror simultaneously.

It is also very good with RAID5 and RAID6 arrays which are difficult to write good firmware for. Optimizations are present for degraded arrays, too. The speed of sequential reading is far from the theoretical one, but better than what the competitor products we have tested so far can offer.

On the downside is the terribly low performance of some arrays at sequential writing. The controller is especially poor at writing large files, so Adaptec still has something to improve.