Two Kingston HyperX 120 GB SSDs vs. Kingston HyperX 240 GB SSD

Today almost every mainboard has onboard RAID controllers. So, why not try increase the performance of the disk sub-system by building a RAID 0 array of a couple of SSD drives?

Using multiple hardware devices in a single subsystem is a popular trend on the PC market. Enthusiasts often build graphics subsystems out of two or more graphics cards whereas users who need high computing performance can go for multiprocessor workstations. This approach works for the disk subsystem, too. It is very easy to increase its performance by combining two or more hard disks into a RAID. Data is distributed among multiple disks on level 0 RAIDs, so the resulting data-transfer speed can be increased substantially by reading from or writing to several disks in parallel.

As a matter of fact, users would often argue what RAID it would take to match the speed of an SSD when the latter was just coming to the consumer market. Of course, things are different now. The SATA 6 Gbit/s interface and the new generation of controllers have raised the performance bar of modern SSDs far above that of conventional hard disks. This gave rise to another question, though. Can we increase our disk subsystem performance by combining multiple SSDs into a RAID?

Indeed, there seem to be no reasons for RAID technology not to be beneficial for SSDs. SSDs are fast with small chunks of data whereas chipset-integrated RAID controllers offer direct communication with the CPU at data-transfer speeds which are many times that of SATA 6 Gbit/s. So, SSD-based RAID0 looks like a viable idea, especially as it doesn’t involve extra expense. The total capacity of a RAID is the sum of the capacities of its constituents whereas the price of an SSD is directly proportional to its capacity. So, if you use the free RAID controller integrated into your mainboard’s chipset, you will end up with the same cost per gigabyte of storage as with a single larger-capacity SSD.

So, SSD-based RAID0 looks attractive in theory, but we are going to check this idea out in practice. Kingston was kind to offer us two 120-gigabyte and one 240-gigabyte SSD from its top-end HyperX series so that we could directly compare a dual-SSD RAID0 with a single SSD of the same capacity.

Closer Look at Kingston HyperX SSD Series

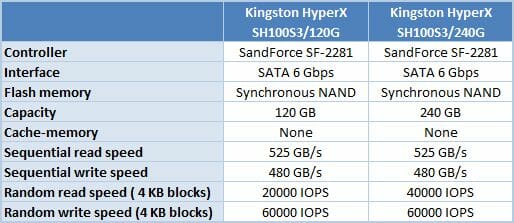

Kingston’s HyperX series is comprised of rather ordinary enthusiast-oriented SSDs based on second-generation SandForce controllers. They employ the well-known SF-2281 chip and 25nm NAND flash memory manufactured by Intel or Micron. In other words, The HyperX series is the top-performance version of SandForce’s current platform, identical to Corsair’s Force Series GT or OCZ’s Vertex 3 in its internals.

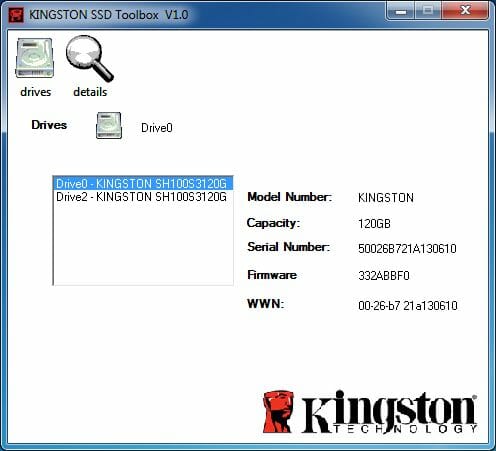

The only thing that differentiates the HyperX series from the competitors is the eye-catching design of the case and the exclusive Toolbox utility that helps you view SSD-related information including S.M.A.R.T.

The utility resembles OCZ’s Toolbox without firmware update (Kingston offers a special tool for that) and Secure Erase functions.

The difference is due to the number of NAND devices in each model. Each 25nm MLC flash die having a capacity of 8 gigabytes, the 120GB model contains 16 flash devices whereas the 240GB model, 32 flash devices. Considering that the SandForce SF-2281 controller has eight-channel architecture, the 120GB and 240GB models can use 2-way and 4-way interleaving, respectively. The higher level of interleaving means higher performance as the controller can go to another NAND device without waiting for the current one to complete its operation. This is in fact similar to RAID0 but within a single SSD, on the level of the SandForce controller.

Testbed Configuration

The testbed we are going to use for this test session is based on a mainboard with Intel H67 chipset which offers two SATA 6 Gbps ports. We connect our SSDs to these particular ports.

Here is the full testbed configuration:

- Intel Core i5-2400 (Sandy Bridge, 4 cores, 3.1 GHz, EIST and Turbo Boost turned off);

- Foxconn H67S mainboard (BIOS A41F1P01);

- 2 x 2 GB DDR3-1333 SDRAM DIMM 9-9-9-24-1T;

- Crucial m4 256 GB system disk (CT256M4SSD2);

- Tested SSDs:

- Kingston HyperX 120 GB (SH100S3/120G, firmware version 332);

- Kingston HyperX 240 GB (SH100S3/240G, firmware version 332).

- Microsoft Windows 7 SP1 Ultimate x64 OS

- Drivers:

- Intel Chipset Driver 9.2.0.1030

- Intel HD Graphics Driver 15.22.1.2361

- Intel Management Engine Driver 7.1.10.1065

- Intel Rapid Storage Technology 10.8.0.1003.

Building a RAID0 with SSDs

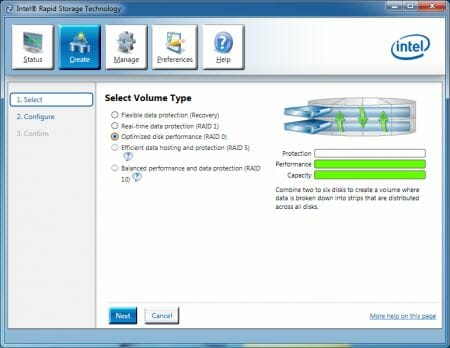

We are going to build our SSD-based RAID using a standard SATA RAID controller that is integrated into modern chipsets. Such controllers work well with single SSDs and suit the purpose of this test session just fine, especially as they are available on most mainboards and thus do not require extra investment on the user’s part.

Our testbed is based on an LGA1155 mainboard with H67 chipset whose SATA controller supports RAID. You only need to change the controller’s operation mode from AHCI to RAID in the mainboard’s BIOS. However, changing the BIOS option is likely to make your OS unbootable. You’ll be getting a blue screen of death as you try to boot your computer up because the RAID driver is off by default in Windows. There are two ways to solve the problem. One is to reinstall Windows after you’ve switched to RAID mode. The necessary driver will be enabled automatically during installation. The other way is to find the Start variable in the system registry (HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services\Iastorv) and set its value at 0 before changing the SATA controller settings in your mainboard’s BIOS. Then you just reinstall the Inter Rapid Storage Technology driver, being already in RAID mode.

With RAID mode turned on and the required drivers enabled, you can proceed to building your RAID. You use the Intel RST driver for that and are only required to specify the disks you want to combine and the RAID level. It’s RAID0 for our test.

The rest of configuring is performed automatically but you can change the stripe size (128 KB by default) and enable the driver’s write caching (if enabled, this can lead to data loss in case of a power failure).

We wouldn’t recommend you turning the write caching on, especially as the OS itself offers such functionality. As for the stripe size (it’s the size of the fragments data are broken into when stored on a RAID), it is not quite reasonable to rely on the driver’s default of 128 kilobytes. A large stripe size makes sense for conventional hard disks which are much faster at reading or writing large blocks of sequentially placed data than at processing small data blocks because the latter operation involves too much repositioning of the read/write heads. SSDs, on their part, boast a very low access time, so choosing a small stripe size should ensure better performance with small files.

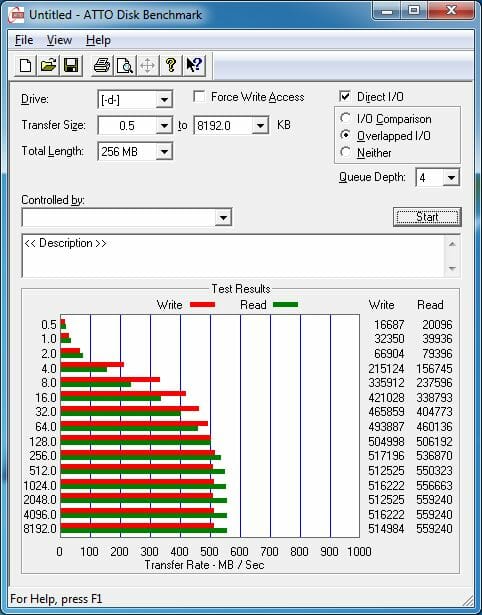

It must be noted that a single Kingston HyperX 120GB can process larger data blocks faster but there are other things to consider.

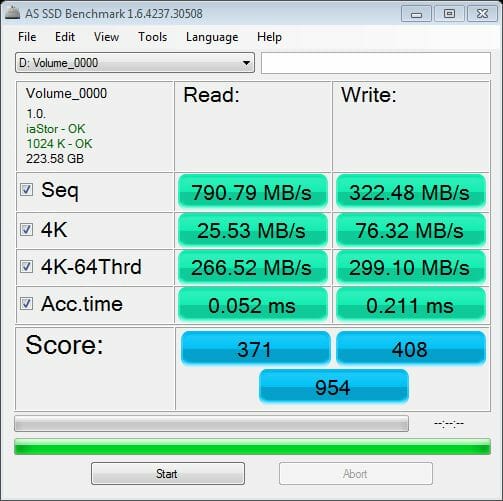

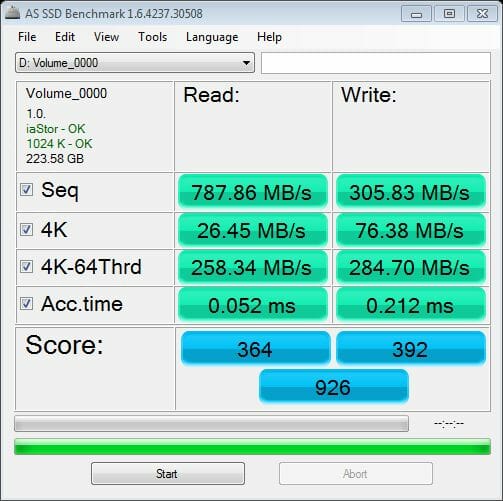

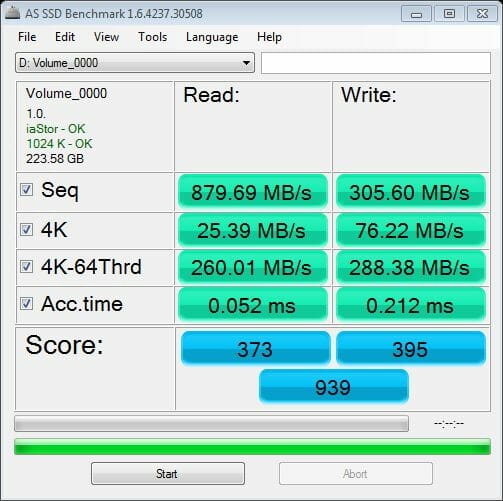

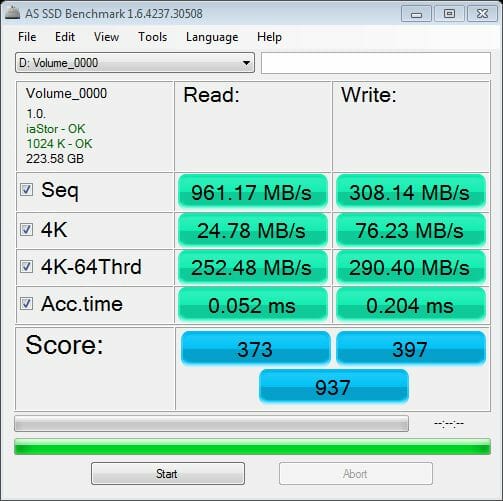

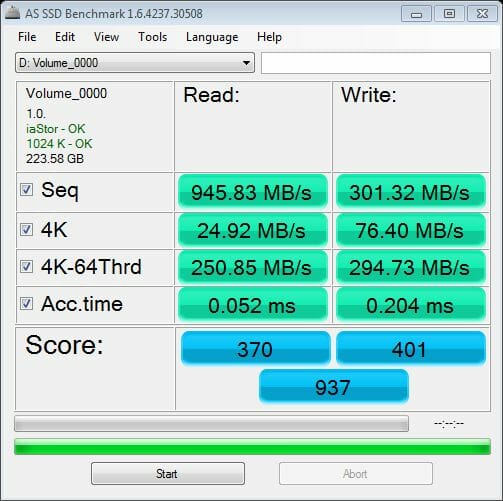

The Intel RST driver can intellectually process the data request queue, ensuring that the SSD-based RAID0 be fast even at a small stripe size. We’ve carried out a brief test of a RAID0 built out of two HyperX 120GB SSDs using different stripe sizes.

The results of AS SSD Benchmark suggest that the performance of the RAID0 doesn’t vary greatly as the stripe size changes. On the other hand, this parameter really affects the speed of sequential operations as well as the processing of small data blocks at a long request queue. The RAID0 seems to deliver its best performance with 32KB data blocks, so the driver’s default doesn’t look optimal. We will use the stripe size of 32 kilobytes for our today’s tests and recommend you to use this setting, too, if you build a RAID0 out of SSDs with second-generation SandForce controllers.

There is one more important thing to be noted here. As soon as your RAID is built, it is identified by the OS as a single whole and you cannot access its constituents separately. This may be inconvenient. For example, you won’t be able to update the firmware or view the S.M.A.R.T. information or perform a Secure Erase for the SSDs in your RAID. But the biggest problem is that the OS won’t be able to use the TRIM command which is supposed to protect SSDs from performance degradation.

Performance

Random and Sequential Read/Write Speed

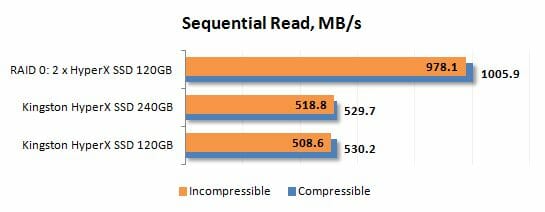

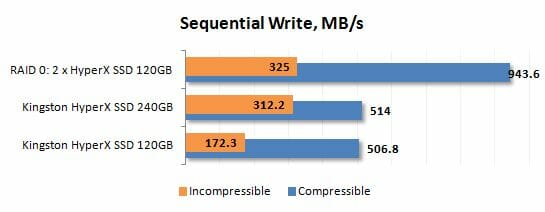

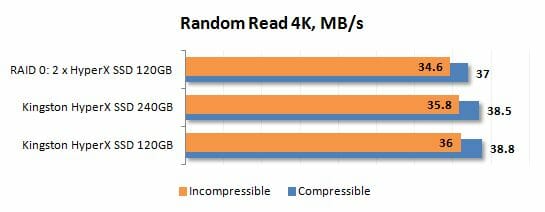

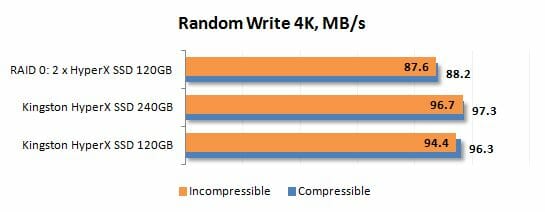

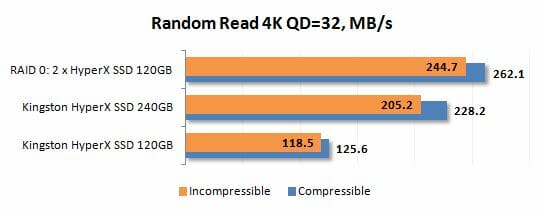

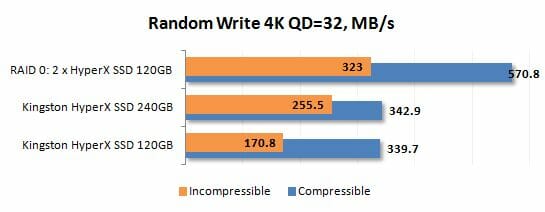

We benchmark the speed of random and sequential reading and writing with CrystalDiskMark 3.0.1. This benchmark is handy as it can measure the speed of an SSD with both random incompressible and predefined compressible data. So, there are two numbers in the diagrams that reflect the maximum and minimum speed. The real-life performance of an SSD is going to be in between those two numbers depending on how effectively the SF-2281 controller can compress the data.

The performance tests in this section refer to SSDs in their “fresh” out-of-the-box state. No degradation could have taken place yet.

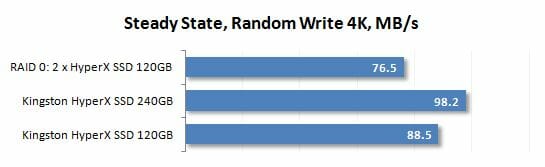

The 120GB SSD is considerably slower than its 240GB cousin but the RAID0 array built out of two 120GB SSDs beats the latter. As we see, RAID0 technology helps boost performance in linear operations and during processing of small data chunks at a long queue depth. On the other hand, the RAID0 is somewhat slower than the 240GB SSD at processing random-address 4KB data blocks.

Degradation and Consistent Performance

Unfortunately, SSDs are not always as fast as in their fresh state. In many situations their real-life performance goes down far below the numbers you have seen in the previous section of this review. The reason is that, having run out of free pages in flash memory, the SSD controller has to erase memory pages before writing to them, which involves certain latencies.

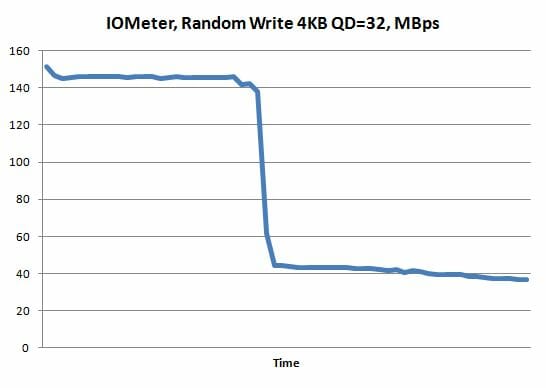

This is best illustrated by the following diagram. We are writing data to our SSD and watching the write speed change.

The write speed plummets at a certain moment. It’s when the total amount of data written equals the SSD’s capacity. Of course, users are more interested in the consistent performance of their SSDs rather than the peak speed they are going to show only in the first period of their usage. SSD makers, on their part, specify the speed characteristics of fresh SSDs for marketing reasons. That’s why we test the performance hit that occurs when a fresh SSD turns into a used one.

The catastrophic performance hit illustrated by the diagram above is somewhat artificial, however, and only reflects the case of incessant writing. When it comes to real-life scenarios, modern SSD controllers can alleviate the performance hit by erasing unused flash memory pages beforehand. They use two techniques for that: idle-time garbage collection and TRIM. TRIM doesn’t work for RAID0s, however, as the OS has no direct access to the SSDs. Therefore it is quite possible that a single SSD can turn out to be better than a corresponding RAID0 after both have been used for a while.

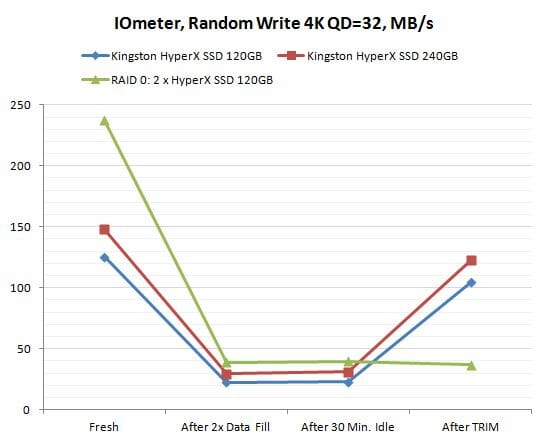

We are going to check this out in practical tests according to the SNIA Solid State Storage Performance Test Specification. The point is that we measure write speed in four different cases: 1) the RAID and SSDs being in their fresh state; 2) after the RAID and SSDs have been twice fully filled with data; 3) after a 30-minute pause so that the SSD controller could restore performance by collecting garbage; and 4) a final test after issuing a TRIM command.

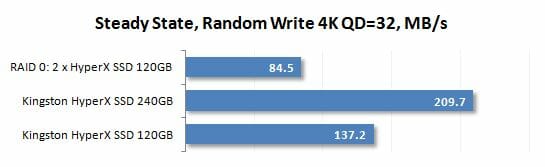

We measured the speed of writing of random-address 4KB data blocks at a request queue depth of 32 commands using IOMeter 1.1.0 RC1. Test data were pseudorandom.

Performance degradation is a real problem indeed. As you can see, the SSDs do suffer a terrible performance hit. And, most unfortunately, the SF-2281 based products do not benefit much from the garbage collection technique. The reserve pool, which amounts to about 7% of the full capacity of these SSDs, doesn’t help them at all. It is only the TRIM command that can bring performance back to a more or less normal level. However, TRIM doesn’t work with RAIDs, so single SSDs are going to be eventually much faster at writing than an SSD-based RAID0.

It means that the write speeds shown in the diagrams in the previous section of our review only reflect but a fragment of the overall picture. As soon as fresh SSDs turn into used ones, their performance changes completely. Their write speed is different. The next diagrams show this speed as benchmarked by CrystalDiskMark 3.0.1.

You can see the SSD-based RAID0 slowing down over time so much that it becomes slower than the single 120GB SSD with 4KB data blocks, the single SSD benefiting from the TRIM command. Thus, the real-life benefits of an SSD-based RAID0 boil down to its high read speed which does not degenerate over time as the SSDs get filled with data.

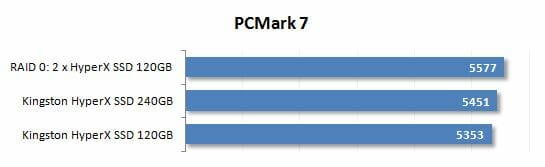

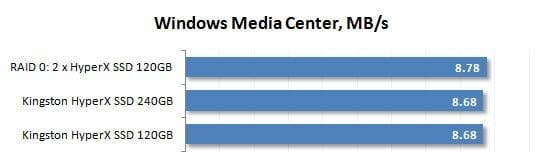

Futuremark PCMark 7

PCMark 7 incorporates an individual disk subsystem benchmark which is based on real-life applications. It reproduces typical disk usage scenarios and measures how fast they are performed. Moreover, the disk access commands are not reproduced one by one, but with pauses necessary to process the data, just like in real life.

The benchmark reports an overall disk subsystem performance rating as well as the data-transfer rate in particular scenarios. Take note that the speed in these scenarios is rather low due to the pauses between input and output operations. In other words, PCMark 7 shows you the speed of the disk subsystem from the application’s point of view. Rather than the pure performance of an SSD, this benchmark will show us how good it is in practical tasks.

We ran PCMark 7 on used SSDs, i.e. in the state they are going to have most of the time in real-life computers. Their performance is thus affected not only by their controller or flash memory type but also by the internal algorithms that fight performance degradation.

The overall PCMark 7 score can serve as an intuitive benchmark for users who want to know the relative standings of SSDs in terms of performance but do not care about learning the technical details. Judging by the results, the RAID0 is overall faster than the single SSD of the same capacity. Considering that a computer’s disk subsystem is mostly used for reading in typical scenarios, these results seem to be true to life.

You may want to take a look at the individual subtests. The gaps between the contestants are quite impressive in some of them.

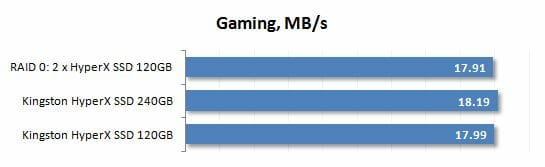

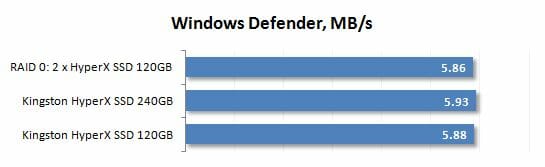

There are scenarios that are very difficult for RAID0s based on SSDs with second-generation SandForce controllers. It is when the disk subsystem has to process a lot of small data blocks: Gaming and Windows Defender.

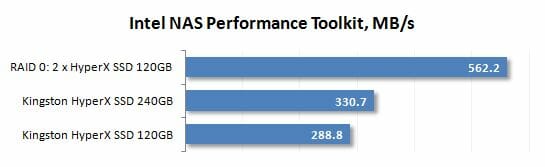

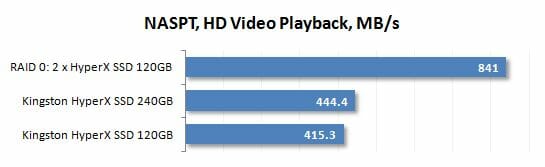

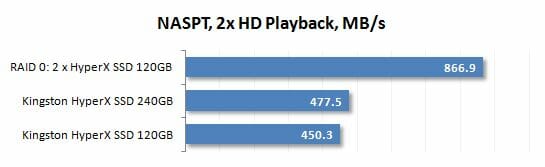

Intel NAS Performance Toolkit

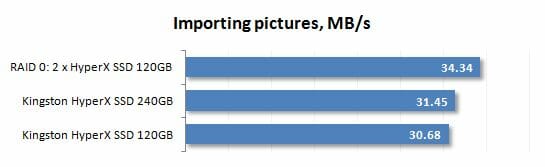

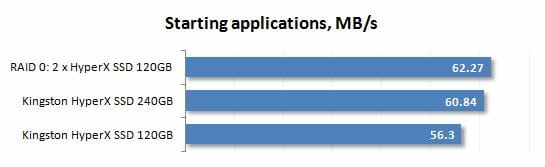

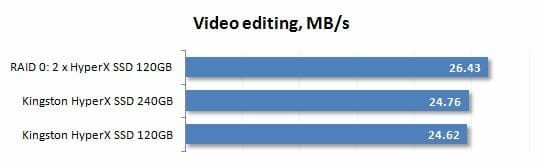

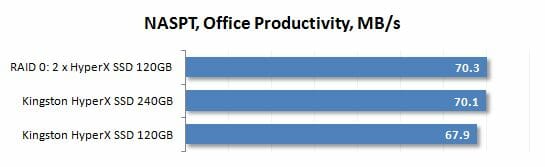

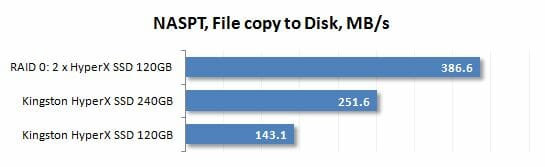

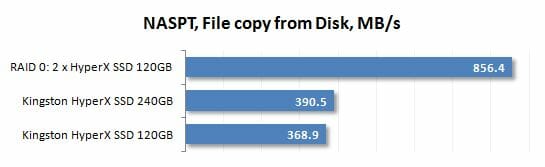

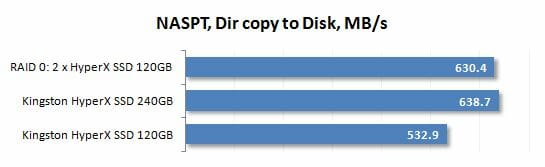

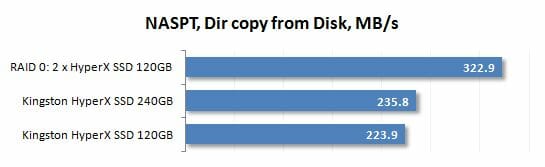

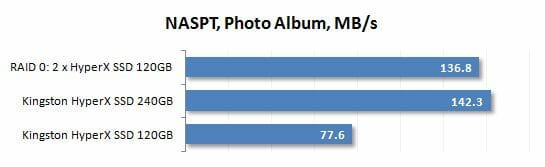

Yet another disk subsystem benchmark that uses real-life usage scenarios, Intel NASPT reproduces predefined disk activity traces and measures how much time they take to be performed, just like in PCMark 7. Together with the latter, this benchmark helps get a general notion of a disk subsystem’s performance in real-life applications. The SSDs are tested in their used state again.

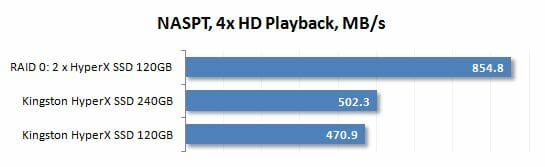

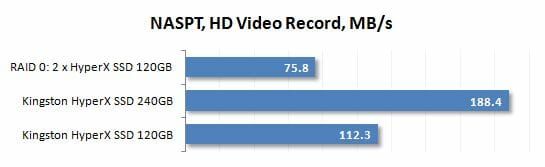

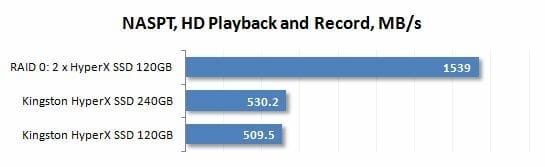

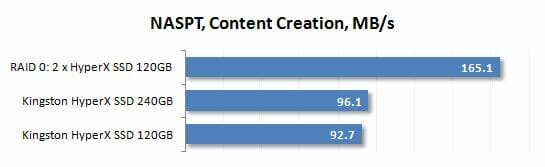

Intel NASPT prefers the RAID0 consisting of two 120GB SSDs to the single 240GB SSD. The RAID0 is almost twice as fast as its opponent, but this success of RAID technology can be somewhat marred by the results of the individual subtests.

All goes well until the SSDs switch from reading to writing. The single 240GB SSD is a clear winner at writing.

Conclusion

Unfortunately, our today’s tests do not provide a clear answer to the question if building a RAID0 out of modern SSDs makes sense. This solution has its highs and lows and we can only do as much as lost them all and let you be the decision maker.

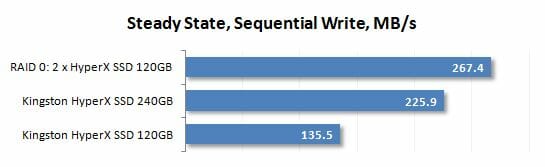

RAID0 is a traditional method of boosting your disk subsystem performance. The trick works with SSDs, too. Combining two SSDs into a RAID0 helps increase linear read/write speeds as well as the speed of processing small data blocks at a long request queue. We did notch very impressive sequential read and write speeds in our tests, getting much higher than the SATA 6 Gbit/s bandwidth.

However, we should keep it in mind that modern SSDs have a tendency to get faster as their capacity grows even within the same product series, so a two-disk RAID0 may turn out to be slower than a single large-capacity SSD. More importantly, SATA RAID controllers, including those in modern chipsets, do not support the TRIM command. As a result, the array’s writing performance degrades over time whereas single SSDs are less susceptible to this problem.

Thus, a RAID0 will only be superior to a single SSD at linear operations whereas random-address operations will expose its weakness. That’s why we can’t prefer the RAID0 solution to a single SSD without reservations. On the other hand, most of our lifelike benchmarks do show the RAID0 to be overall faster. In other words, the RAID0 is better on average, especially as it doesn’t involve any investment: the cost per gigabyte is the same for a RAID0 and an SSD of the same capacity.

There is some inconvenience about running an SSD RAID0 that should also be mentioned. You cannot monitor the health of your SSDs in a RAID0 or update their firmware. A RAID0 will also have lower reliability since a failure of any SSD causes the loss of all data stored on all the SSDs in the array.